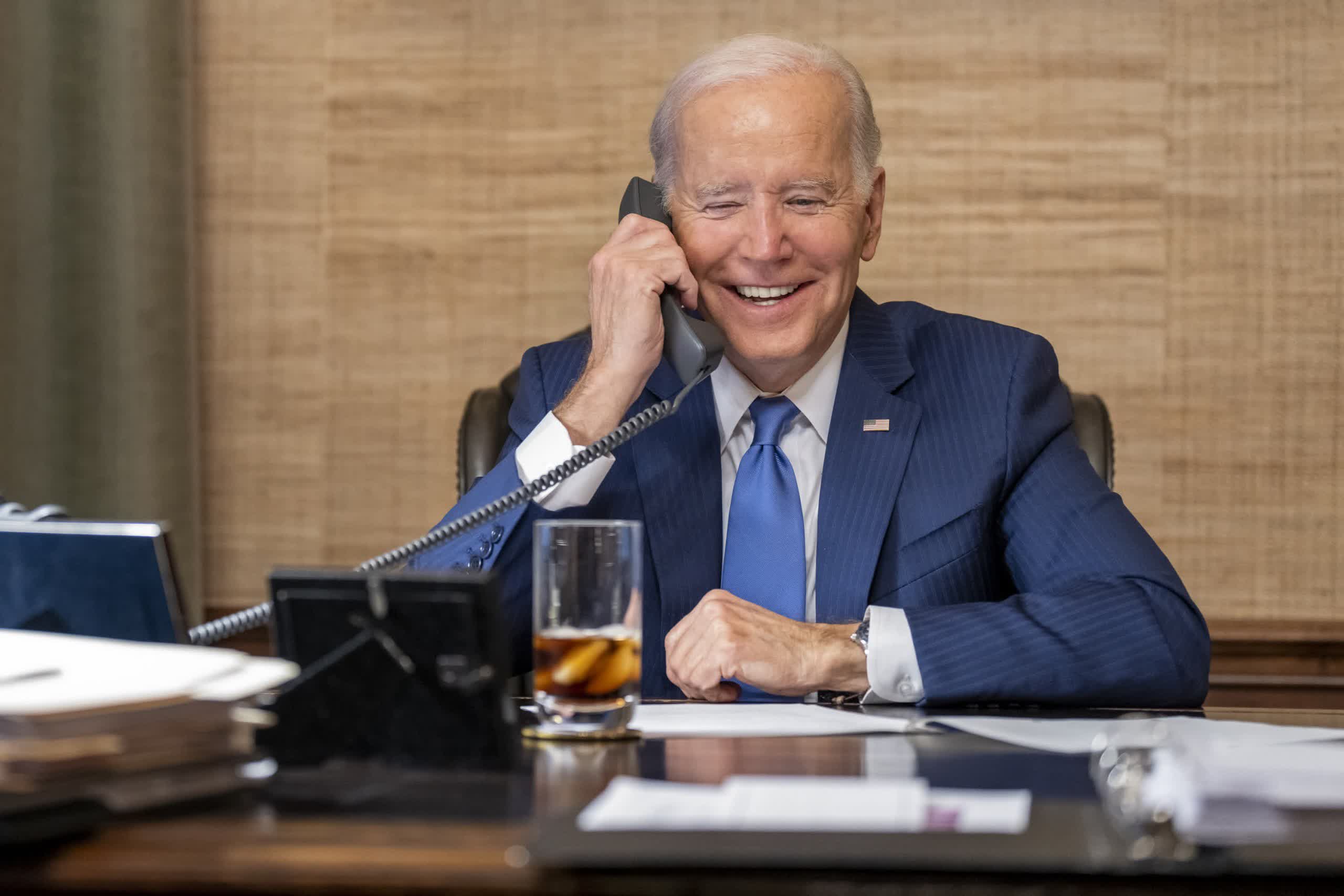

What just happened? The FCC is moving to make the use of AI-generated voices in robocalls illegal after a message that was designed to sound like President Biden told New Hampshire residents not to vote in last month's primary. The agency is concerned about the audio deepfake tech being used to confuse and deceive consumers by imitating the voices of "celebrities, political candidates, and close family members."

FCC Chairwoman Jessica Rosenworcel's proposal would make the use of AI-generated voices in robocalls illegal under the Telephone Consumer Protection Act, or TCPA.

The 1991 TCPA law restricts telemarketing calls and the use of automatic telephone dialing systems and artificial or prerecorded voice messages. It also requires telemarketers to obtain prior express written consent from consumers before robocalling them. The FCC wants AI-generated voices to be held to these same standards. The five members of the Commission are expected to vote on the proposal sometime in the coming weeks.

"AI-generated voice cloning and images are already sowing confusion by tricking consumers into thinking scams and frauds are legitimate," Rosenworcel said in a statement. "No matter what celebrity or politician you favor, or what your relationship is with your kin when they call for help, it is possible we could all be a target of these faked calls."

Voice-fraud detection company Pindrop analyzed the fake 39-second message that sounded like Biden telling New Hampshire residents not to vote and found that it was created using a text-to-speech engine made by ElevenLabs. The startup uses artificial intelligence software to replicate voices in more than two dozen languages.

Bloomberg reports that ElevenLabs confirmed its software was used in the New Hampshire incident and banned the account that was responsible. New Hampshire's Attorney General's office has announced an investigation into the fake Biden calls.

An FCC spokesperson said the potential change to the law will give State Attorneys General new powers in the fight against robocall scammers who use AI while also protecting consumers.

The FCC has been battling against robocalls for years. In December 2022, it proposed a $300 million fine against an illegal transnational robocalling operation that made more than five billion automated calls to over 500 million phone numbers within a three-month period in 2021. There was also a $225 million fine for misleading robocalls handed out in 2020.

Generating the voices of family members has become a popular tactic among criminals looking to steal money from unsuspecting victims. This crime has been on the increase as generative AI technologies become easier to acquire, cheaper, and more convincing.