In brief: ElevenLabs, the startup that made headlines earlier this year when its AI tech was used to clone President Biden's voice in a series of robocalls, has partnered with an AI detection company to fight deepfakes. It's an import move during an election year in which misinformation is proving a big problem.

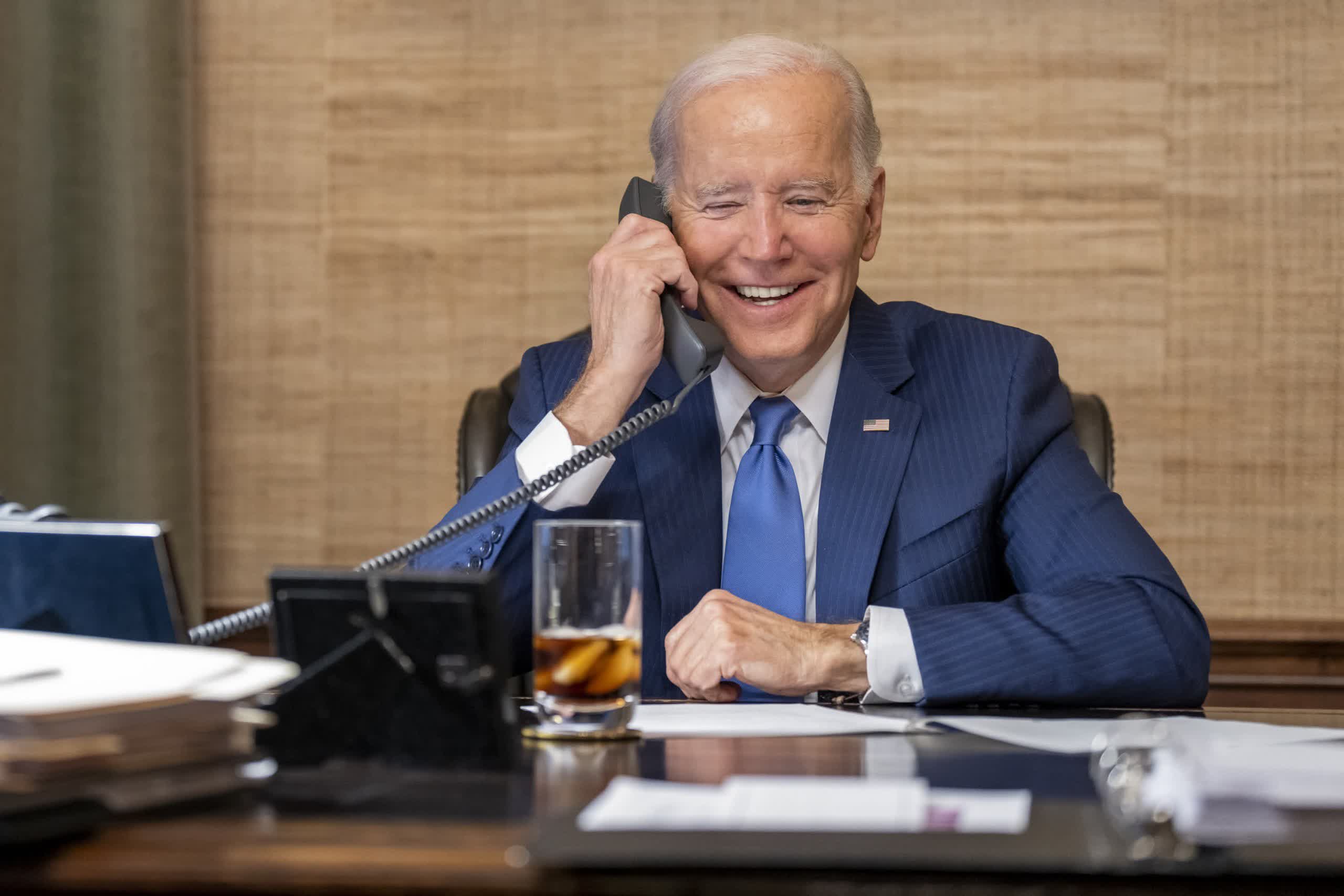

In January, a 39-second robocall went out to voters in New Hampshire telling them not to vote in the Democratic primary election, but to "save their votes" for the November presidential election. The voice handing out this advice sounded almost exactly like Joe Biden. Voice-fraud detection company Pindrop Security later analyzed the call, concluding that it was created using a text-to-speech engine made by ElevenLabs, which the company confirmed. The person who created the deepfake was suspended from ElevenLabs' service.

Now, Bloomberg reports that ElevenLabs has partnered with Reality Defender, a US-based firm that offers its deepfake detection services to governments, officials and enterprises.

The partnership appears to be a mutually beneficial one. Reality Defender gets access to ElevenLabs' voice cloning data and models, allowing it to better detect fakes, while the AI firm can use Reality Defender's tools to help prevent its products from being misused. An ElevenLabs spokesperson said there was no financial element to the deal.

ElevenLabs prohibits the cloning of a person's voice without their permission. Its services are advertised as being able to bridge language gaps, restore voices to those who have lost them, and make digital interactions feel more human.

Deepfakes that are able to create incredibly realistic video and audio of real people are becoming increasingly advanced. Not only is it being used to place victims in porn, but it's increasingly being abused in the political arena – a big concern during an election year.

The incident with Biden's voice led to the FCC's proposal to make the use of AI-generated voices in robocalls illegal under the Telephone Consumer Protection Act, or TCPA. The agency cited concerns that technology was being used to confuse and deceive consumers by imitating the voices of "celebrities, political candidates, and close family members."

During his State of the Union address in March, Biden called for a ban on AI voice impersonation, though the extent of the proposed ban was unclear.