Mirrors to the soul: In just a few years, contemporary generative AI systems have come a long way in creating realistic-looking humans. Eyes and hands are its most significant stumbling blocks. Still, models like Stable Diffusion are getting proficient at generating humans that, if not perfect, are at least easy to edit, which has sparked concerns about misuse.

Researchers at the University of Hull have recently revealed a groundbreaking method to identify AI-generated deepfake images by analyzing reflections in human eyes. Last week, the team unveiled the technique at the Royal Astronomical Society's National Astronomy Meeting. The method employs tools used by astronomers to study galaxies to examine the consistency of light reflections in eyeballs.

Adejumoke Owolabi, an MS student at the University of Hull, led the research team supervised by Astrophysics Professor Dr. Kevin Pimbblet.

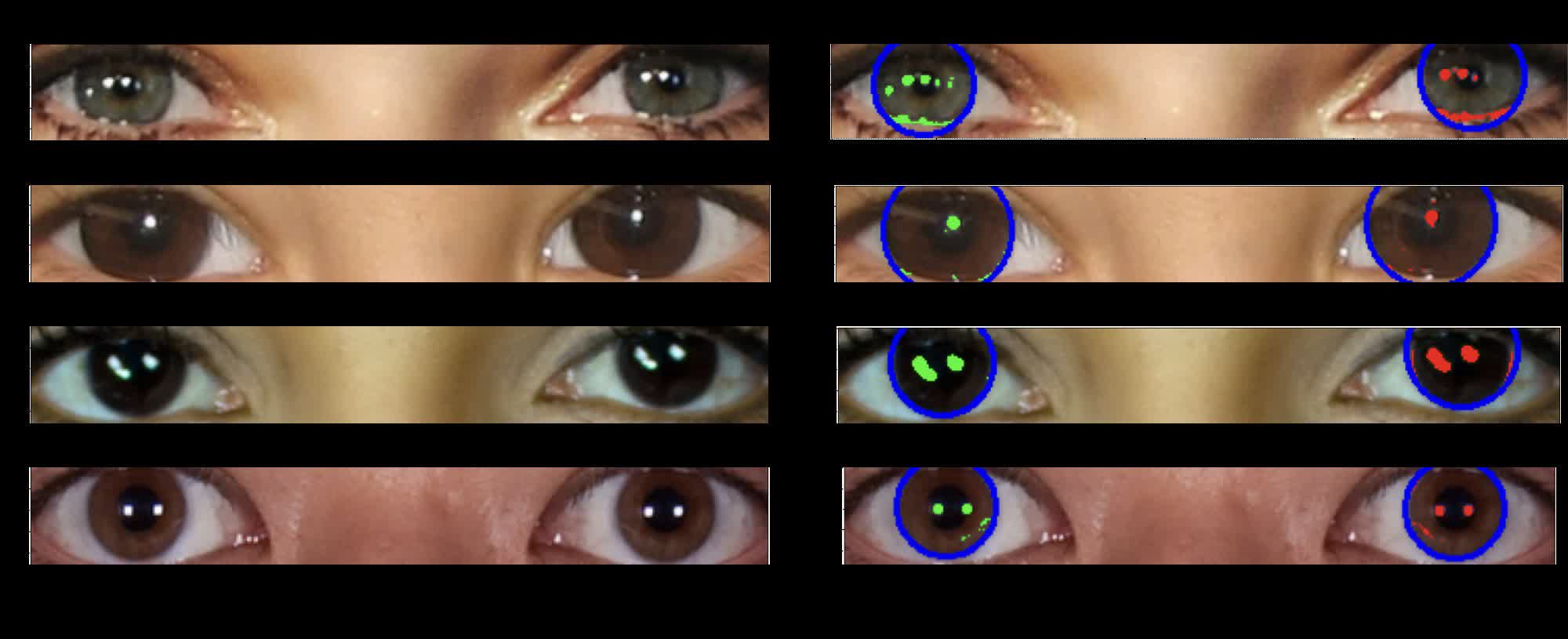

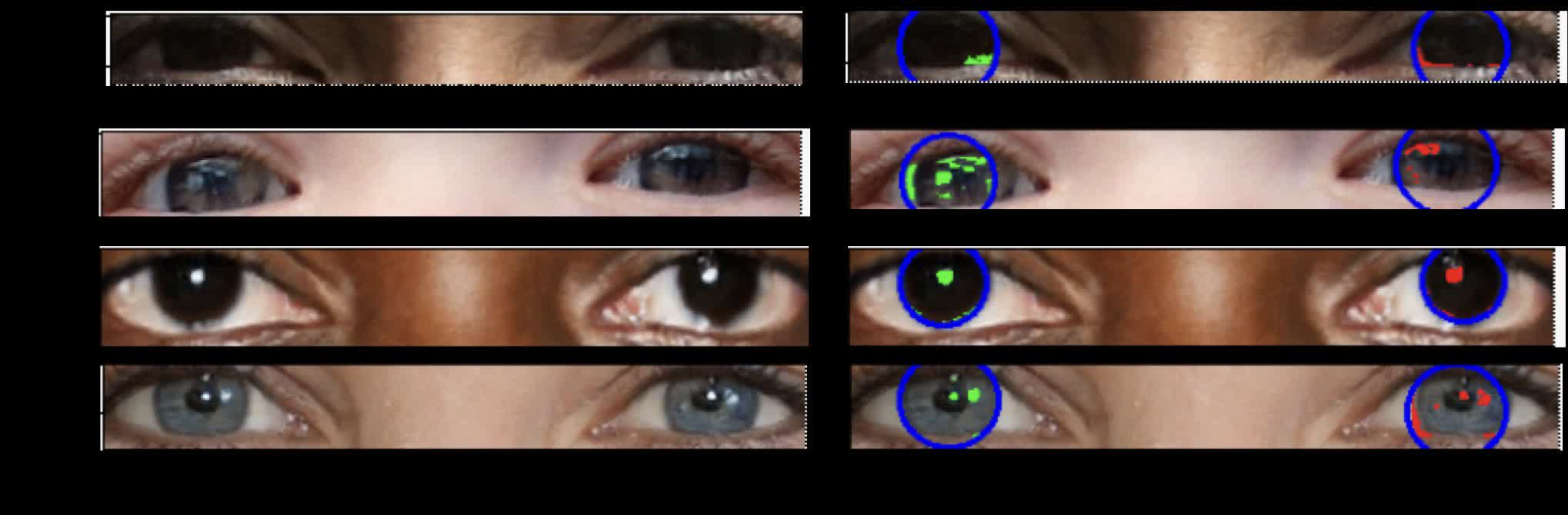

The detection technique works on the principle that a pair of eyeballs will reflect light sources similarly. The placement and shape of light reflections is consistent in both eyes in genuine images. By contrast, many AI-generated images don't account for this, leading to misplaced and oddly shaped reflections between the eyes.

The astronomy-based approach to deepfake detection might seem excessive since even a casual photo analysis can reveal inconsistencies in eye reflections. However, using astronomy tools to automate the measurement and quantification of the reflections is a novel advancement that can confirm suspicions, potentially providing reliable legal evidence of fraud.

Pimbblet explained that Owolabi's technique automatically detects eyeball reflections and runs their morphological features through indices to compare the similarity between the left and right eyeballs. Their findings showed that deepfakes often exhibit differences between the pair of eyes.

The researchers pulled concepts from astronomy to quantify and compare eyeball reflections. For example, they can assess the uniformity of reflections across eye pixels using the Gini coefficient, typically used to measure light distribution in galaxy images. A Gini value closer to 0 indicates evenly distributed light, while a value nearing 1 suggests concentrated light in a single pixel.

"To measure the shapes of galaxies, we analyze whether they're centrally compact, whether they're symmetric, and how smooth they are. We analyze the light distribution," Pimbblet explained.

The team also explored using CAS parameters (concentration, asymmetry, smoothness), another astronomy tool for measuring galactic light distribution. However, this method was less effective in identifying fake eyes.

While the eye-reflection technique shows promise, it may not be foolproof if AI models evolve to incorporate physically accurate eye reflections. It seems inevitable that GenAI creators will correct these imperfections in time. The method also requires a clear, up-close view of eyeballs to be effective.

"There are false positives and false negatives; it's not going to get everything," Pimbblet cautioned. "But this method provides us with a basis, a plan of attack, in the arms race to detect deepfakes."

Image credit: Adejumoke Owolabi