Nvidia's RTX Remix goes open source, giving classic games an AI-powered remastering renaissance

The floodgates have been opened to modders

OpenAI's internal discussion board about AI development was compromised by hackers

The company didn't care to inform any outside authority about the incident last year

Apple AI home device could merge the HomePod and Apple TV

Competing with Amazon and Google

Cloudflare offers one-click solution to block AI bots

Why it matters: There is a growing consensus that generative AI has the potential to make the open web much worse than it was before. Currently all big tech corporations and AI startups rely on scraping all the original content they can off the web to train their AI models. The problem is that an overwhelming majority of websites isn't cool with that, nor have they given permission for such. But hey, just ask Microsoft AI CEO, who believes content on the open web is "freeware."

New technology enables GPUs to use PCIe-attached memory for expanded capacity

It could be a game changer for AI and HPC applications

AI and Ukraine drone warfare are bringing us one step closer to killer robots

Weapons are being deployed with minimal human involvement

Even staunch fans are calling out Apple's less-than-transparent AI training data harvesting

It claims most training samples are Apple-owned, but what about the rest?

Almost half of all web traffic is bots, and they are mostly malicious in nature

AI algorithms are making everything worse for companies, content creators, and users

Google's greenhouse emissions are growing because of AI, and AI could help with that

Generative algorithms need a lot of energy

Pixel 9 phones could ship with a Microsoft Recall-like feature called "Google AI"

It will be a lot less creepy, though

Microsoft AI CEO: Content on the open web is "freeware" for AI training

The BS claim stirs a hornet's nest of copyright concerns

Is there a sustainable model for AI factories?

Navigating the heavy freight of AI data processing

Editor's take: Investors usually hate airlines – they're capital-intensive, have high operating expenses, limited suppliers, and no way to differentiate with customers. How are AI factories any different?

Bill Gates says we don't have to worry about AI energy use

"Let's not go overboard on this"

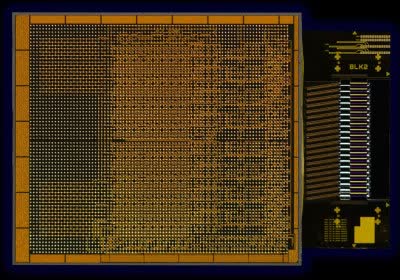

Move over GPUs, with 1,536 cores the Thunderbird RISC-V CPU is ready to eat your lunch

Open source enables small industries to participate in the accelerator boom

Google Gemini is not as good at analysis as its hype says, other AI models also struggle

The big picture: If there's one thing that generative AI is supposed to be good at, it's analyzing the written word. However, two studies suggest that this ability may have been overhyped. One study demonstrates that Gen AI struggles with understanding long-form books, while another shows that these models find answering questions about videos challenging. This is something companies should consider as they augment their workforce with Gen AI.

Audi is adding ChatGPT into existing cars going back to 2021, more going forward

Use ChatGPT to control your car with your voice

Intel says its optical interconnect chiplet technology is a milestone in high-speed data transmission

It can already support 4 terabits per second bidirectional data transfer across 100 meters

Google Translate adds support for 110 new languages using the power of AI

Cantonese and Punjabi are among the major new additions

Google, Snap, Meta and many others are "quietly" changing privacy policies to allow for AI training

It is sneaky and possibly illegal, according to the FTC

AMD approached to make world's fastest AI supercomputer powered by 1.2 million GPU

Forward-looking: It's no secret that Nvidia has been the dominant GPU supplier to data centers, but now there is a very real possibility that AMD might become a serious contender in this market as demand grows. AMD was recently approached by a client asking to create an AI training cluster consisting of a staggering 1.2 million GPUs. That would potentially make it 30x more powerful than Frontier, the current fastest supercomputer. AMD supplied less than 2% of data center GPUs in 2023.

The PC market is on the rebound, thanks to Windows 10's looming end-of-support date and AI PCs

Up 5% this year, 8% in 2025

Energy-efficient AI model could be a game changer, 50 times better efficiency with no performance hit

Cutting corners: Researchers from the University of California, Santa Cruz, have devised a way to run a billion-parameter-scale large language model using just 13 watts of power – about as much as a modern LED light bulb. For comparison, a data center-grade GPU used for LLM tasks requires around 700 watts.

Deepfaking it: GenAI is more likely to be misused to influence your vote than to steal your money

Expect to see more deepfake videos this political season

Google could launch AI chatbots based on celebs and influencers later this year

Imitating Meta's unpopular chatbots. But why?