Forward-looking: Nvidia will be showcasing its Blackwell tech stack at Hot Chips 2024, with pre-event demonstrations this weekend and at the main event next week. It's an exciting time for Nvidia enthusiasts, who will get an in-depth look at some of Team Green's latest technology. However, what remains unspoken are the potential delays reported for the Blackwell GPUs, which could impact the timelines of some of these products.

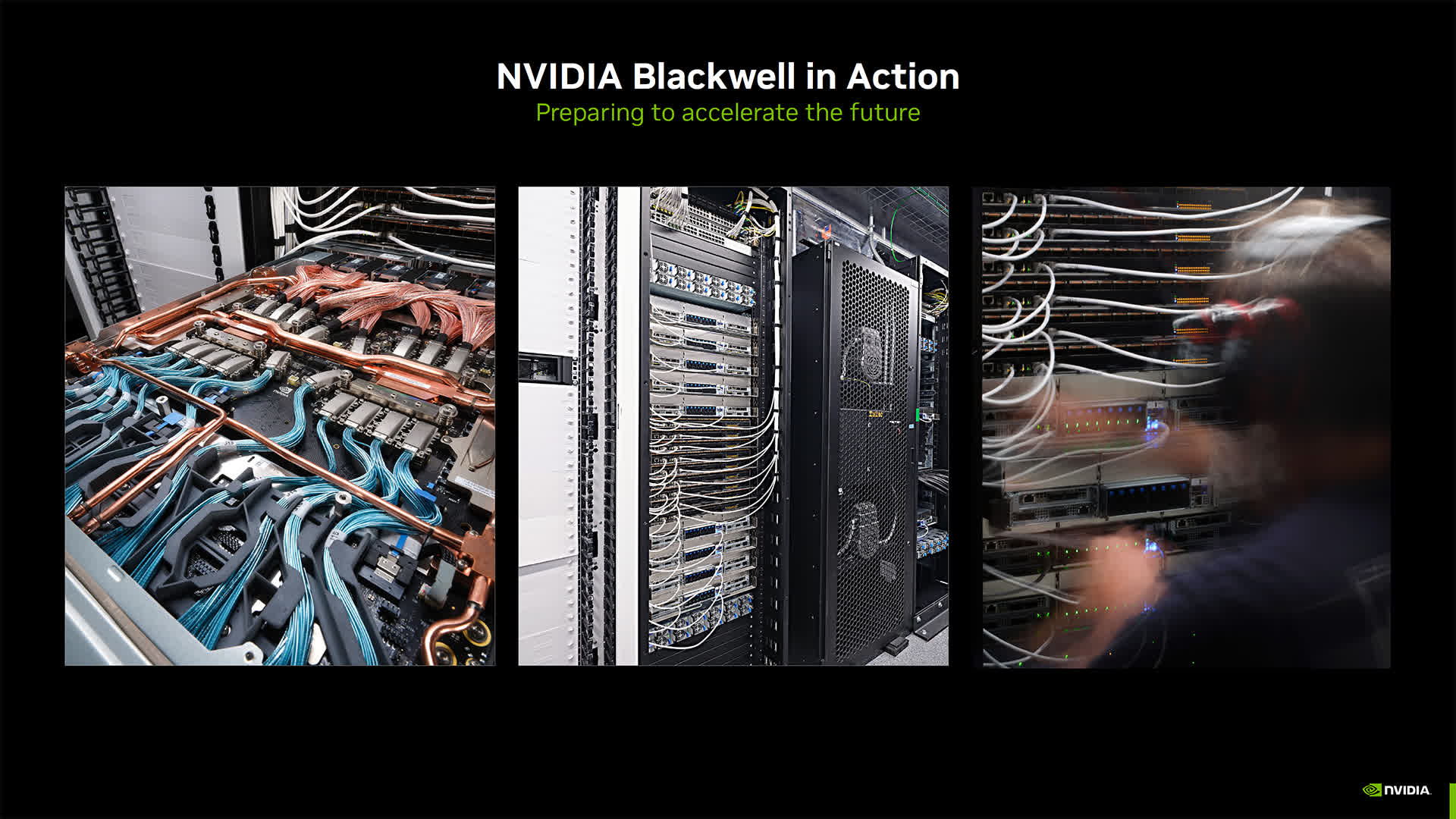

Nvidia is determined to redefine the AI landscape with its Blackwell platform, positioning it as a comprehensive ecosystem that goes beyond traditional GPU capabilities. Nvidia will showcase the setup and configuration of its Blackwell servers, as well as the integration of various advanced components, at the Hot Chips 2024 conference.

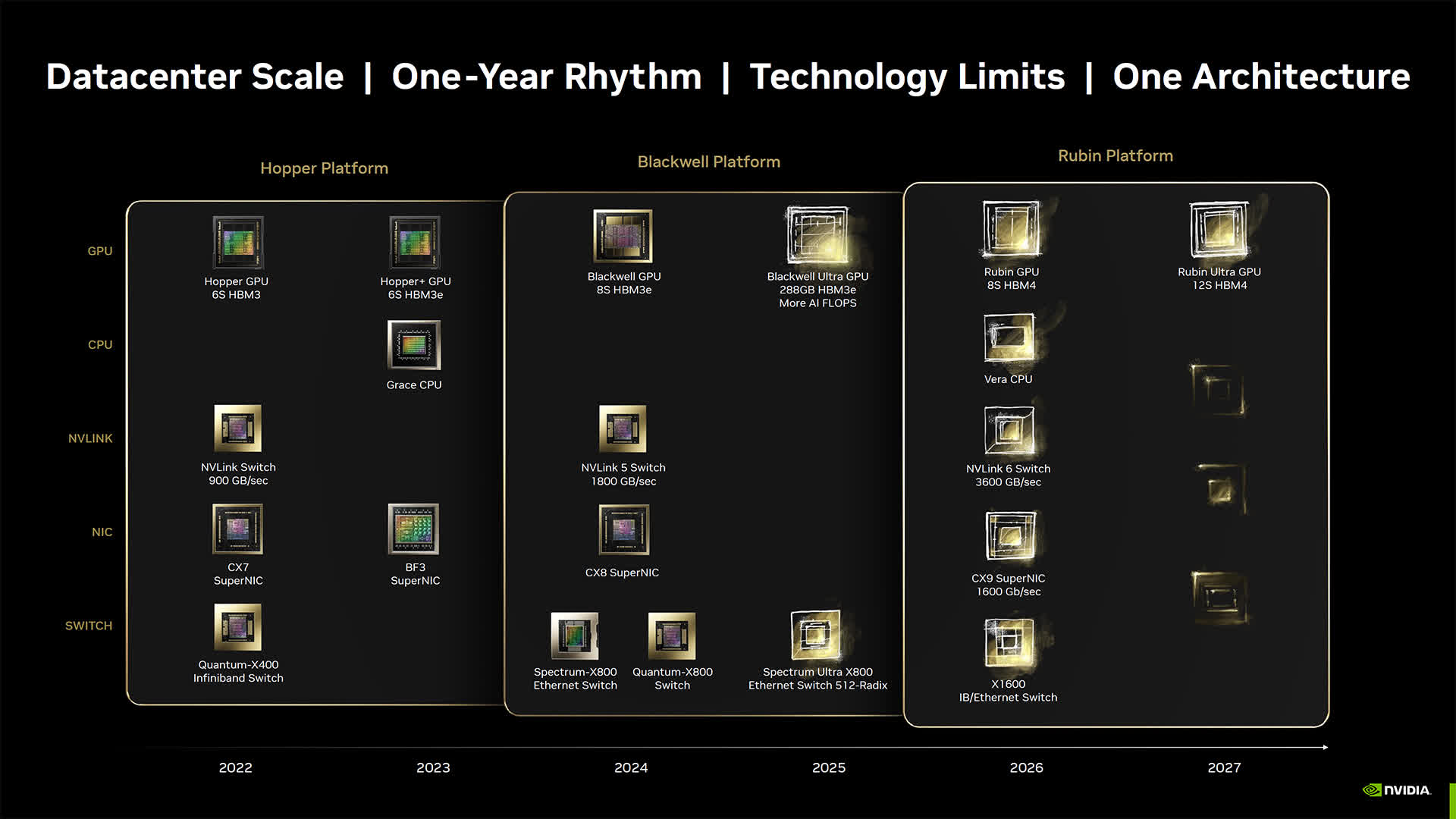

Many of Nvidia's upcoming presentations will cover familiar territory, including their data center and AI strategies, along with the Blackwell roadmap. This roadmap outlines the release of the Blackwell Ultra next year, followed by Vera CPUs and Rubin GPUs in 2026, and the Vera Ultra in 2027. This roadmap had already been shared by Nvidia at Computex last June.

For tech enthusiasts eager to dive deep into the Nvidia Blackwell stack and its evolving use cases, Hot Chips 2024 will provide an opportunity to explore Nvidia's latest advancements in AI hardware, liquid cooling innovations, and AI-driven chip design.

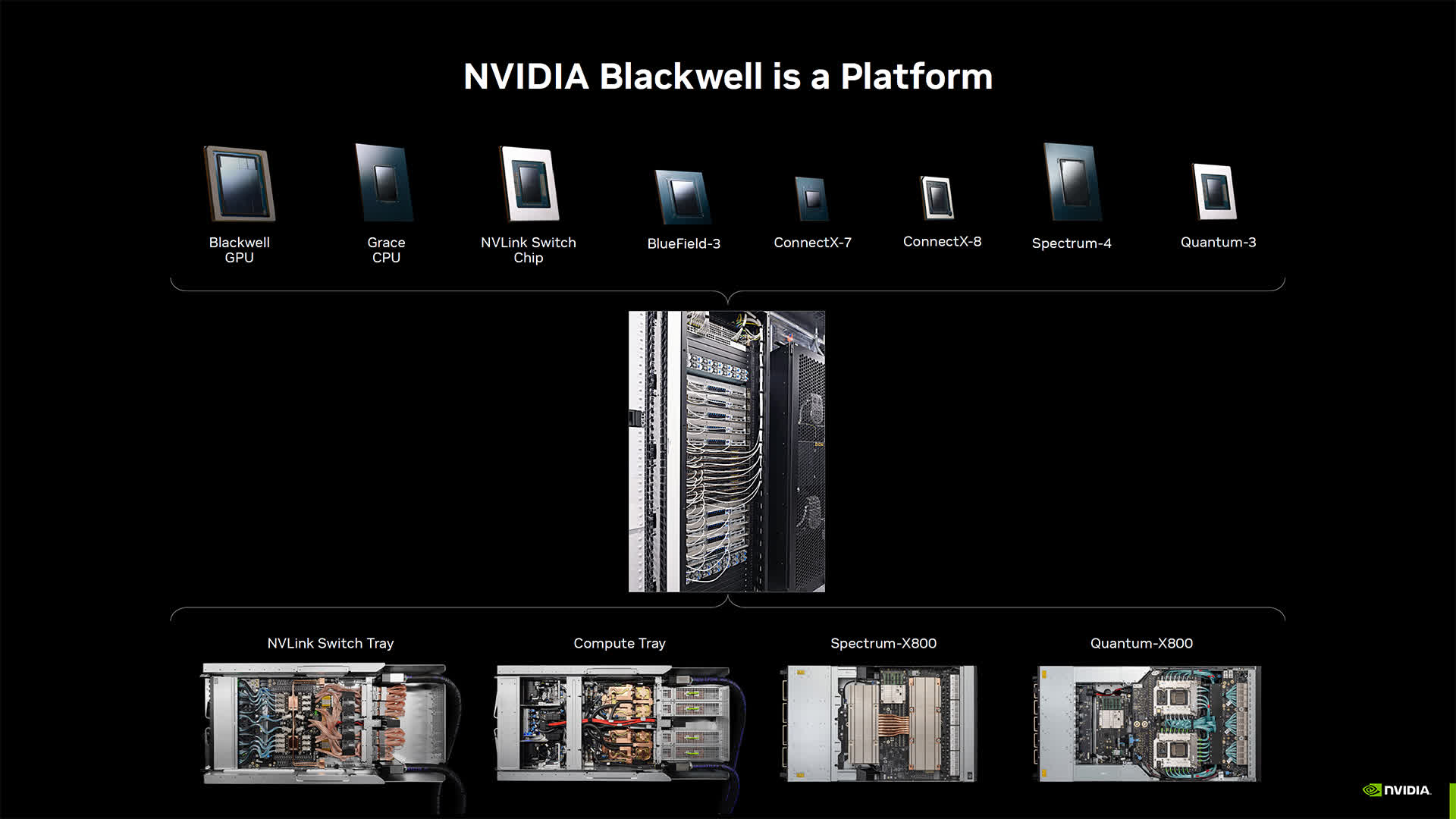

One of the key presentations will offer an in-depth look at the Nvidia Blackwell platform, which consists of multiple Nvidia components, including the Blackwell GPU, Grace CPU, BlueField data processing unit, ConnectX network interface card, NVLink Switch, Spectrum Ethernet switch, and Quantum InfiniBand switch.

Additionally, Nvidia will unveil its Quasar Quantization System, which merges algorithmic advancements, Nvidia software libraries, and Blackwell's second-generation Transformer Engine to enhance FP4 LLM operations. This development promises significant bandwidth savings while maintaining the high-performance standards of FP16, representing a major leap in data processing efficiency.

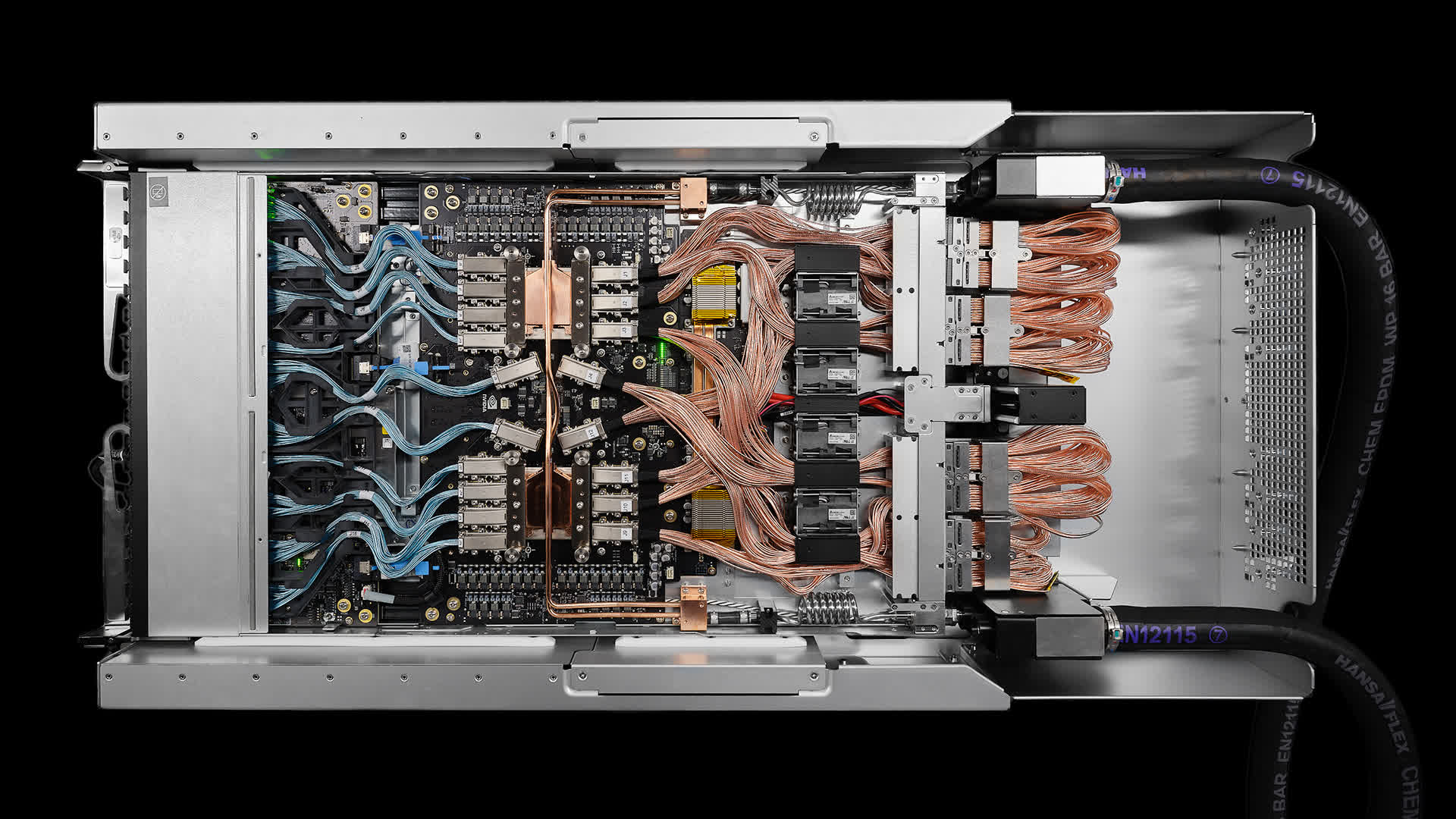

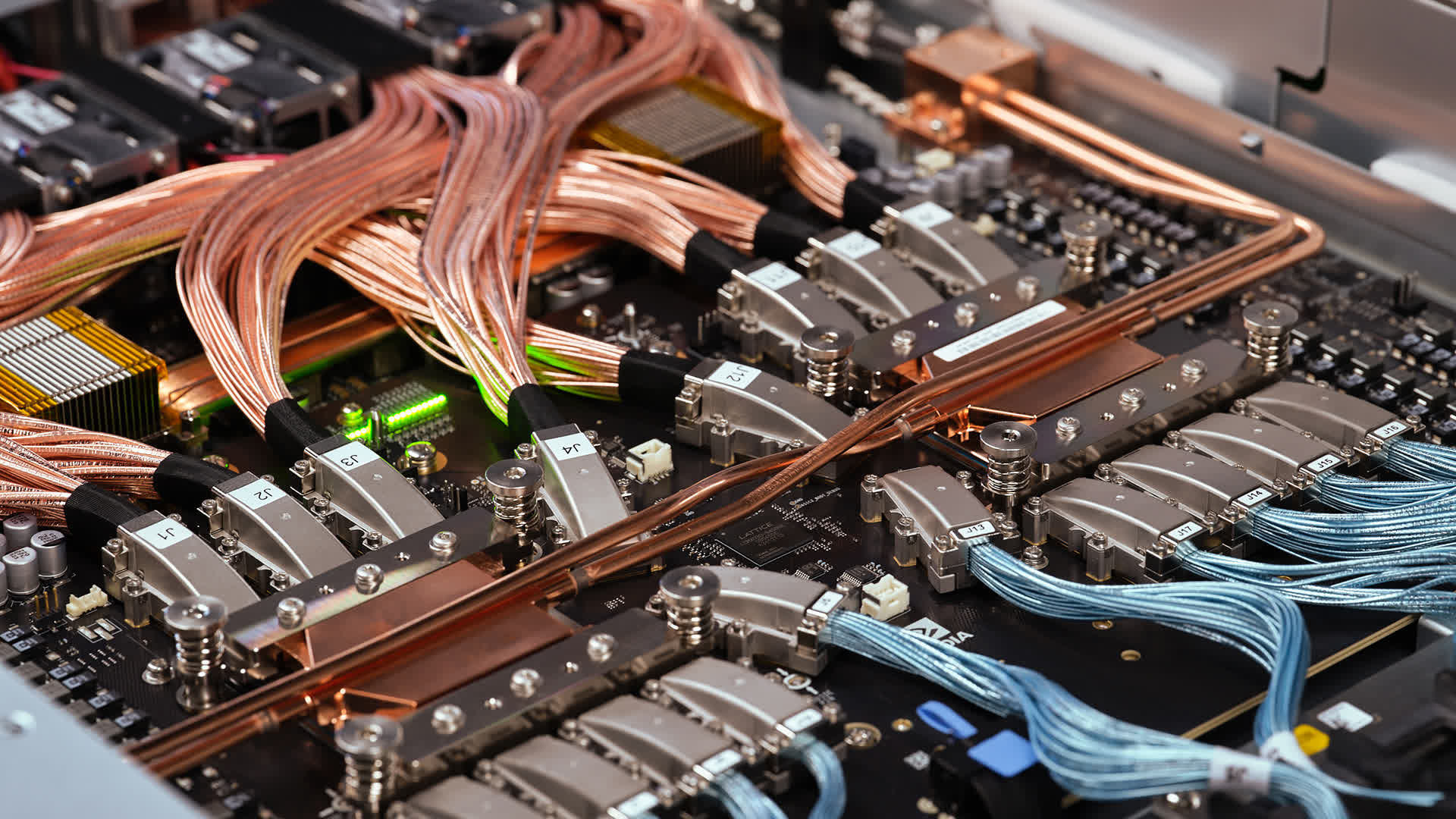

Another focal point will be the Nvidia GB200 NVL72, a multi-node, liquid-cooled system featuring 72 Blackwell GPUs and 36 Grace CPUs. Attendees will also explore the NVLink interconnect technology, which facilitates GPU communication with exceptional throughput and low-latency inference.

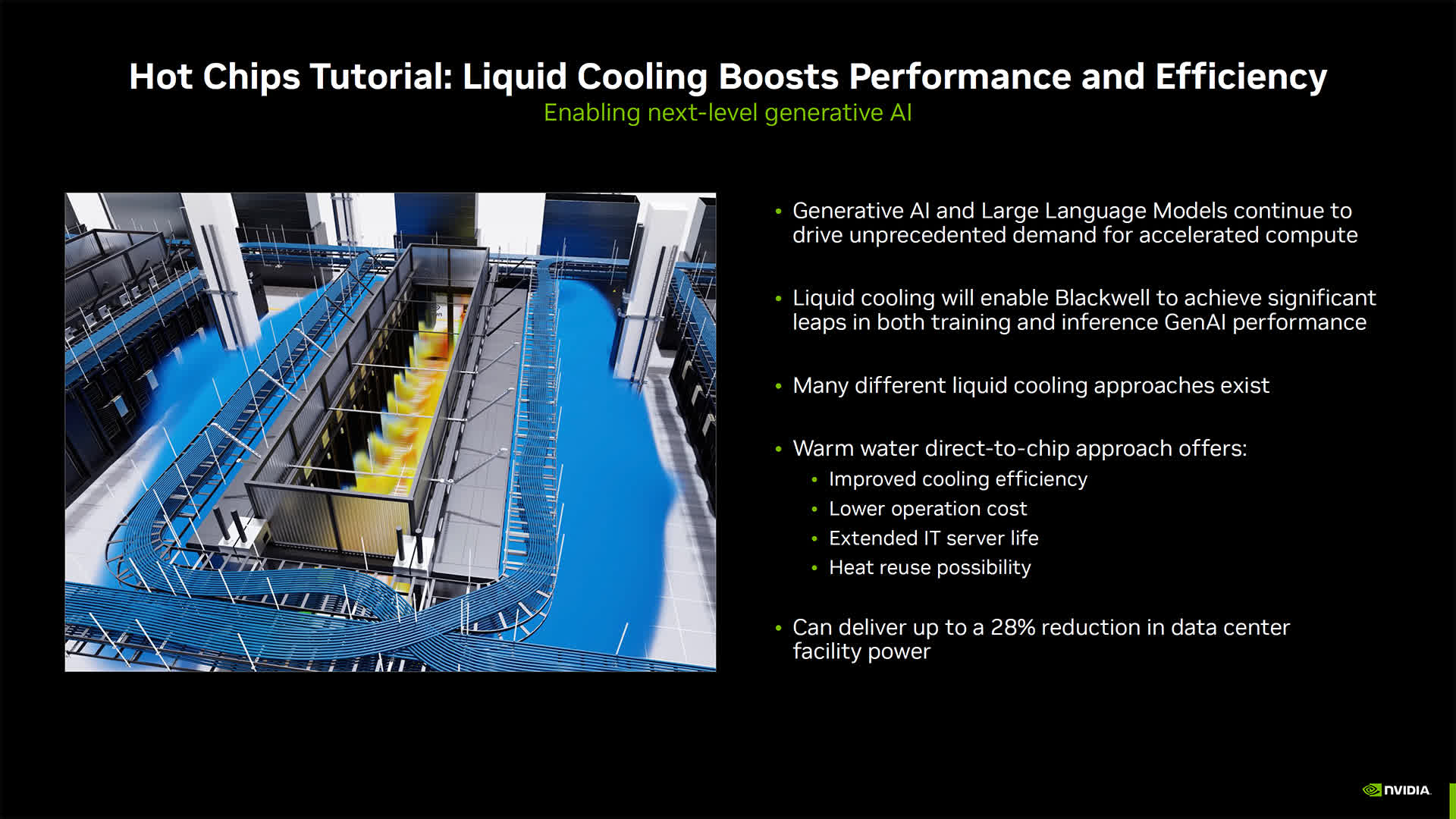

Nvidia's progress in data center cooling will also be a topic of discussion. The company is investigating the use of warm water liquid cooling, a method that could reduce power consumption by up to 28%. This technique not only cuts energy costs but also eliminates the necessity for below ambient cooling hardware, which Nvidia hopes will position it as a frontrunner in sustainable tech solutions.

In line with these efforts, Nvidia's involvement in the COOLERCHIPS program, a U.S. Department of Energy initiative aimed at advancing cooling technologies, will be highlighted. Through this project, Nvidia is using its Omniverse platform to develop digital twins that simulate energy consumption and cooling efficiency.

In another session, Nvidia will discuss its use of agent-based AI systems capable of autonomously executing tasks for chip design. Examples of AI agents in action will include timing report analysis, cell cluster optimization, and code generation. Notably, the cell cluster optimization work was recently recognized as the best paper at the inaugural IEEE International Workshop on LLM-Aided Design.

Nvidia to showcase Blackwell server installations at Hot Chips 2024