Modern graphics cards and monitors usually offer two port options for connecting them together: DisplayPort and HDMI. One of those two has now been around for nearly 20 years, yet they're both still going strong, getting faster, and offering more features with every update. High refresh rate gaming is relatively new, though, so you might not know which interface is best to use.

You may even think that it doesn't actually matter which one you pick, but that's definitely not the case. In this article we'll point out the differences between these interfaces, how the choice of monitor fits into this, and why graphics card vendors seem to prefer DisplayPort over HDMI.

When change happens slowly in PC tech

It used to be the case that if you wanted to hook up a monitor to your PC, you had to pick between two ways of doing it: the older analogue systems (VGA and S-Video) or the newer digital interface called DVI. Neither method allowed audio to be transmitted in the same cable and the connectors themselves were notoriously fiddly to use. Attaching TVs to media players wasn't much better, with the likes of the wobbly SCART connector or the multiple RGB component cables.

So in 2002, a new tech consortium comprised of the likes of Hitachi and Philips, got together to create an interface and connector that would offer video and audio transmissions in a single cable, while still offering support for DVI. The system would come to be known as the High-Definition Multimedia Interface or HDMI, promising a new era in terms of simplicity and functionality.

But while the home entertainment industry quickly adopted HDMI, the PC world was somewhat more reluctant. ATI and Nvidia didn't routinely add the HDMI interface on their graphics cards until late 2009 and by then, there was a new kid on the block: DisplayPort.

DisplayPort was also developed by a group of tech manufacturers, but this was backed by the old VESA (Video Electronics Standards Association) body, which has been around since 1989. DisplayPort was designed with similar goals to HDMI – a single connector for video and audio, with backwards compatibility for DVI.

Both systems were created so that they could be enhanced with new features and better capabilities, without needing a new connector. At face value, they seemed to be similar: HDMI uses a total of 19 pins to send video, audio, clock rate, and other control mechanisms, whereas a DisplayPort connector packs 20 pins.

As it so happens, underneath it all, they work in very different ways.

Goodbye clock signals, hello packets

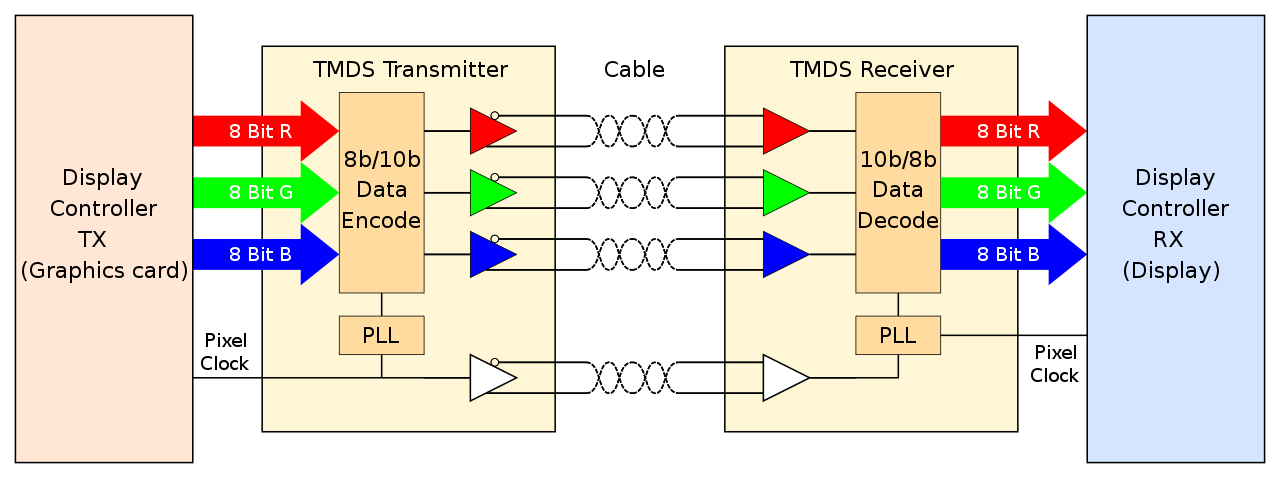

The original HDMI interface used the same signaling system as DVI – a set of four links, operating with transmission-minimized differential signaling (TMDS), sending the three color channels and the pixel clock, running at 165 MHz. That was good enough to drive a monitor at 1920 x 1200 resolution with a refresh rate of 60 Hz.

Audio was transmitted during the video blanking intervals, the period of time when older displays (such as cathode ray tubes, CRTs) would not display an image.

Modern TVs and monitors didn't need to do this, but with the standards for transmission set in stone years ago, blanking still remained.

Where DVI could support higher resolutions or better refresh rates by using a second set of links, HDMI was simply updated to have faster and faster pixel clocks. Version 1.3 appeared in 2006, sporting a 340 MHz clock, and by the time version 2.0 rocked up in 2013, the pixel clock was running at up to 600 MHz.

DisplayPort (DP) doesn't use TMDS, nor does it have dedicated pins for the clock signal. You could say DP is the Ethernet of the display world – packets of data are transmitted along the wires, just like on a network, and the pixel clock rate (along with a whole host of other information) is stored in those packets. It works in a similar manner to the way PCI Express does.

This means that DisplayPort can transmit video and audio at the same time, separately, or send something completely different. The downside to this is that DVI backwards compatibility is somewhat complex, requiring powered adapters in some cases.

The first version of DisplayPort offered notably better support for high resolutions at high refresh rates, thanks to having a maximum data transmission rate of 8.6 Gbps (compared to HDMI, which sported just under 4 Gbps at that time).

Both systems have been regularly improved, and the latest version of DisplayPort (2.0, released in 2019) has a maximum data rate up to 77 Gbps. There are some notable caveats with that, though, which we'll come to in a moment.

HDMI version 2.0, which appeared in 2013, was still using TDMS for video transmission but raising the pixel clock beyond 600 MHz wasn't an option – something entirely different was required to improve upon the 14 Gbps data rate. Version 2.1 was launched in 2017 and took a leaf from the DisplayPort book, using the four links to send data packets, rather than utilizing TDMS.

This upped the maximum data rate to 42 Gbps – impressive, but still well short of what could be achieved using DisplayPort.

Both systems have multiple transmission modes and they don't always run at the fastest rate. A graphics card with a HDMI 2.0 output (e.g. AMD Radeon Vega series) connected to a monitor with HDMI 2.1 will only have 14 Gbps of bandwidth available (assuming the cable is up to scratch, too). The same is true if the monitor was 2.0 and the GPU was 2.1: the best common transmission mode is the one that gets used.

So, to hit 77 Gbps with DisplayPort, the graphics card, monitor, and connecting cable all need to support UHBR 2.0 (Ultra High Bandwidth Rate); if the monitor only offers DP 1.4a, for example, then the best you can have is 26 Gbps. And given that there are currently no DP 2.0 displays on the market right now, 1.4 is all you've got.

Choice: DisplayPort, for sheer bandwidth, but HDMI 2.1 is very good.

As we'll soon see, cables turn out to be rather important.

Numbers don't lie, right?

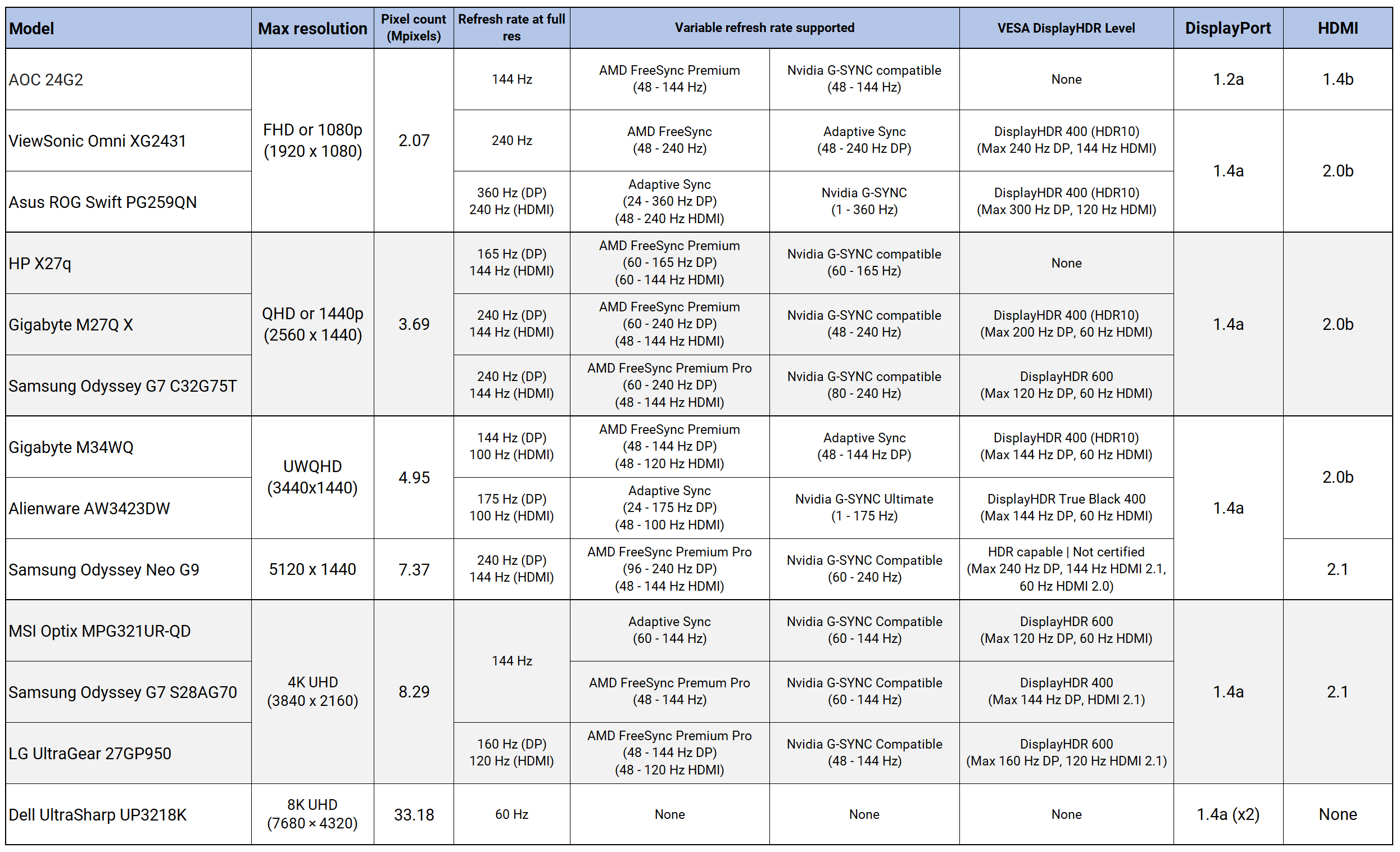

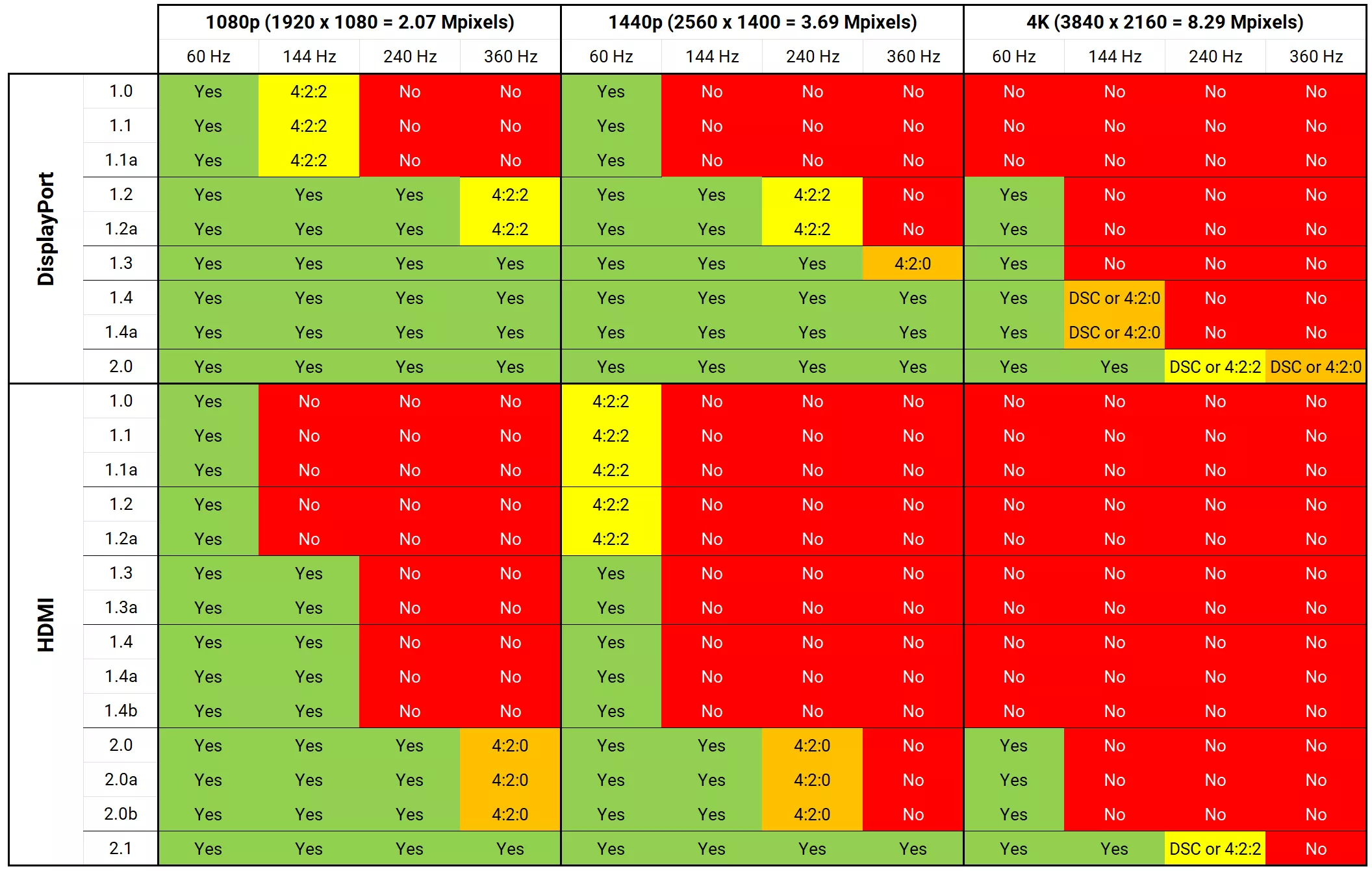

To highlight the differences between what can be achieved via the two systems, let's take a look at some common gaming resolutions and a handful of refresh rates.

There are plenty of monitors on the market now that support many of these configurations (none, though, that are 4K 240+ Hz!) but if you have a monitor or refresh rate that isn't listed below, you can use our forums to ask for guidance.

An entry of 'Yes' in the table means that the display system supports that resolution and refresh rate, without resorting to the use of image compression. It's worth noting that a 'No' entry doesn't necessarily mean that it won't work – it's just that there's insufficient transmission bandwidth for that resolution and refresh rate, using industry standard display timings.

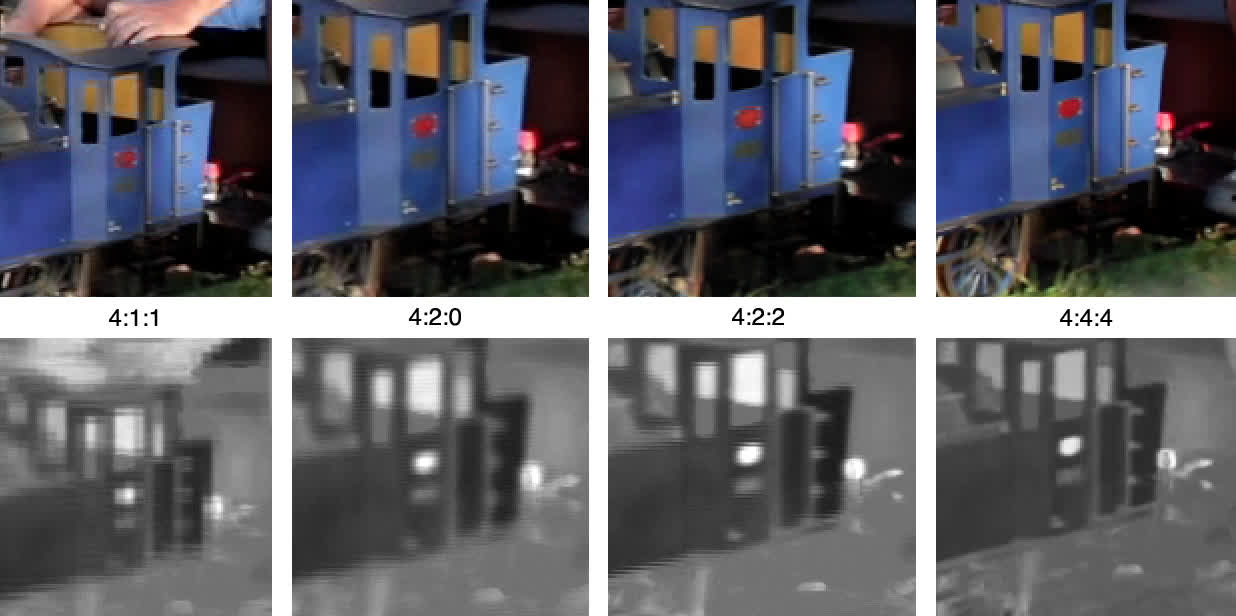

DisplayPort and HDMI support the use of YCbCr chroma subsampling, which reduces the bandwidth requirements for the video signal, at the cost of image quality.

4:2:2 drops the bandwidth requirement by a third, whereas 4:2:0 almost halves it. For the very best pictures on your monitor, you don't want to be using compression, but depending on what setup you've got, you might not have any option.

DisplayPort 1.4 onward and HDMI 2.1 also support Display Stream Compression (DSC), a VESA-developed algorithm that's almost as effective as 4:2:0 but without a noticeable loss in image quality.

DP vs HDMI resolution/refresh/spec comparison table

At face value, DisplayPort is clearly the superior system, as versions 1.4 and 2.0 appear to be significantly better than any version of HDMI. However, there's more to it than just the numbers.

For example, as of writing this, there are no graphics cards that have a DisplayPort 2.0 output – only AMD's Radeon 600M series laptop chips do. But don't forget that there are no DP 2.0 monitors on the market, and there isn't likely to be for a while yet. They're all still using version 1.4, although that's still more than capable, as shown in the table.

If you've got an older graphics card (e.g. GeForce GTX 10 or Radeon 500 series), that have DP 1.4 sockets on them, they don't offer DSC, so you're stuck to using 4:2:0 subsampling at the highest resolutions. This is a common issue with products being DisplayPort or HDMI compliant – there's no requirement for them to offer every feature that a particular revision has.

HDMI 2.1 does have better support on the GPU front: all of AMD Radeon 5000 and 6000 cards have it, as does Nvidia's RTX 30 lineup. But once again, there's relatively few monitors that use it – typically just 4K monitors do.

Cable Length

There's another key aspect to consider, which puts HDMI in a better light: it was developed primarily for home entertainment systems, not PCs, and supports far longer cables than DisplayPort does, especially at high resolutions and refresh rates.

For example, at 4K 60 Hz, it's generally recommended that with DP 1.4, you keep the cable length under 6 feet, even it's a high quality one. Apply the same display configuration to a HDMI setup and you're looking at up 20 feet.

If you're using the DP cable that came bundled with your monitor and you've noticed that the setup is somewhat unstable, you might find that switching to HDMI solves all your problems. You can buy active DisplayPort cables, which have powered circuitry to boost the signal if you need to go really long, but they are expensive.

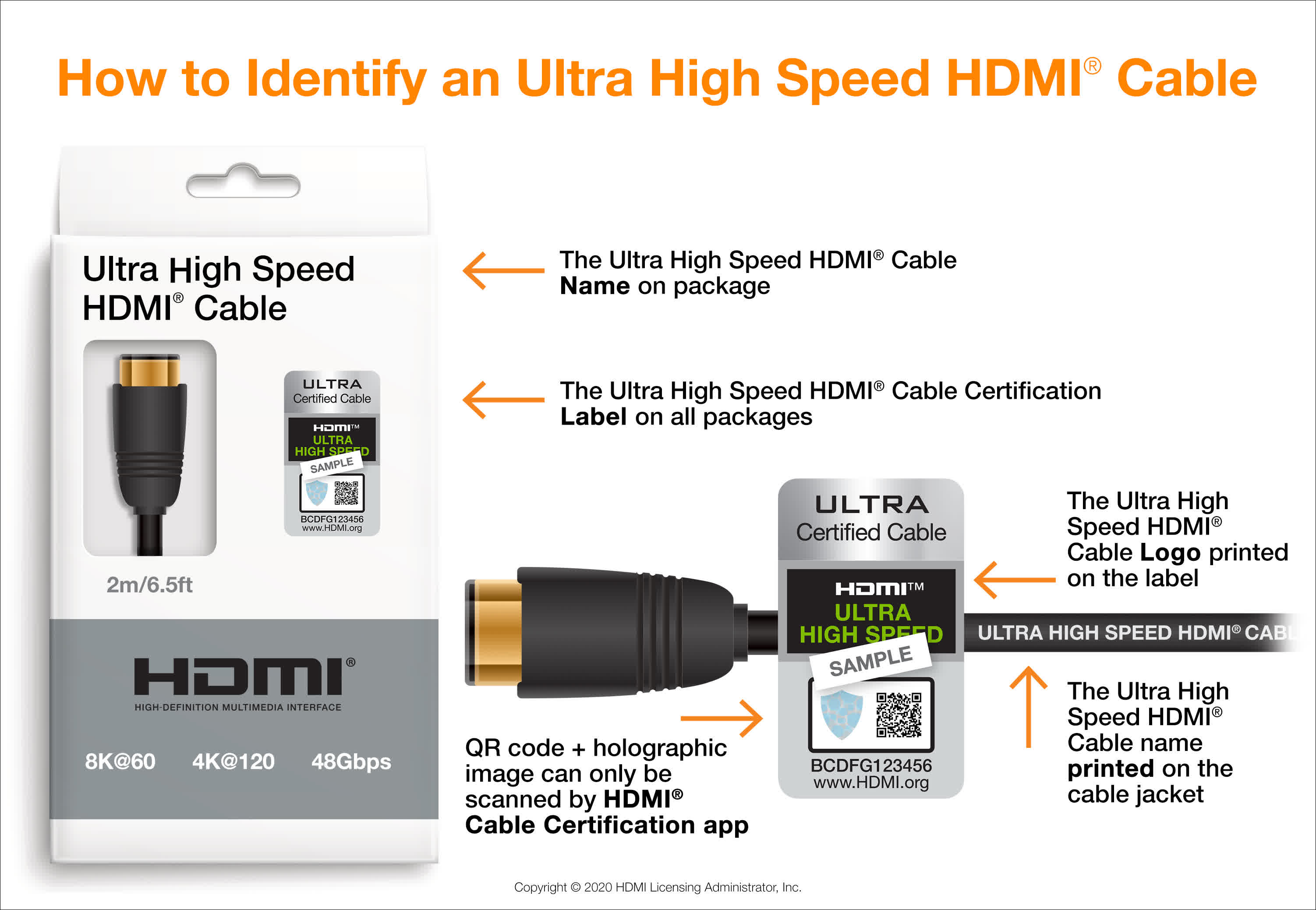

Although VESA and the HDMI Group have a certified labeling system, supposedly to clearly indicate what resolution and refresh rate the cable supports, it's not monitored in any way, nor do we see much in the way of any litigation being taken out against falsely labelled products.

... which does make choosing the right cable somewhat of a lottery.

It's about striking a balance: ignore the cheap, never-heard-of-before brands that fill the likes of Amazon's shelves, and stick to known brands (e.g. Lindy, Belkin, Snowkids, True HQ). Conversely, don't be suckered in by claims of better performance with the very expensive ones – $20 for a 7 feet DisplayPort cable, rated for 8K at 60 Hz, is more than enough.

Choice: DisplayPort, with a short length, high quality cable.

More features means more problems

Monitors with HDR enabled (as long as it's supported by the graphics card, too) use 10-bits per color channel, rather than the usual 8-bits, to generally improve the variation in light and color levels, viewed on the screen. Those extra bits eat into the available transmission bandwidth available, which in turn cuts down on the maximum resolution and refresh rate combination possible.

For example, the Samsung Odyssey G7 27" has a HDR maximum refresh rate of 120 Hz when using DisplayPort, but just a miserly 60 Hz with HDMI – and don't forget that one of its key selling features is its 240 Hz max rate.

It's a similar situation with Variable Refresh Rate (VRR), a technology that allows the refresh rate to dynamically change, over a specific range, so that vertical synchronization (aka, vsync) can be enabled and not cause performance problems. This locks the swapping about of the GPU's frame buffers (the chunk of memory that it renders everything into) to coincide with the refreshing of the monitor screen.

The result is a seamless display on the monitor, with no tearing running across the image; coupled with a high refresh rate and it's a delight on the eyes.

AMD and Nvidia have their own versions of VRR and it's something we've looked at before, but whichever one you go with, the range of rates available is almost always narrower with HDMI compared to DisplayPort.

Sticking with the Odyssey G7 again (HDR disabled), the VRR range is 60 to 240 Hz with DisplayPort, but 48 to 144 Hz with HDMI.

We've taken a smattering of monitors currently on the market and you can see how the two display connection system handle the use of HDR or VRR.

Take a look at the three 4K monitors shown above and it's clear that using HDMI 2.1 alone doesn't always guarantee that you'll be able to max out all of the features, resolution, and refresh rate. It's fair to say that some manufacturers are somewhat coy, when it comes to being upfront about what their products are actually capable of.

DisplayHDR 400 and 600 aren't really worth the cost in refresh rate, or even using for that matter, but VRR definitely is – it nearly always works at rates lower than the minimum specified and even if your PC can't render graphics fast enough to match the highest refresh rate, the use of FreeSync or G-Sync will ensure that the monitor displays consistently smooth images.

Choice: DisplayPort, easily.

But I don't have DisplayPort, nor HDMI...

You may be reading this on a computer that doesn't have any obvious DisplayPort or HDMI ports. For example, Apple's latest MacBook Air sports a single Thunderbolt 3 and two USB-C ports.

Thunderbolt actually combines a PCI Express and DisplayPort interface in one, with the latter offering up to 40 Gbps of transmission bandwidth. That's on a par with HDMI 2.1 and it's actually good enough for a maximum resolution of 6016 x 3384 at 60 Hz.

If you want to take advantage of VRR, you'll need to check the device's specifications carefully. MacBooks from the past 3 years support it (though it goes by the VESA name of Adaptive Sync), but only with DisplayPort – it's not going to work with HDMI or DP-to-HDMI adapters.

Phones, tablets, and lightweight laptops may come with nothing more than a USB-C port. Fortunately, if you want to connect to an external display, there's a handy feature in that technology (although it's not always implemented by the manufacturer) simply titled DisplayPort/HDMI Over USB-C.

This system takes advantage of USB's Alternate Mode, allowing for a DP/HDMI transmission to be sent through a USB 3.1 interface – even while using it as a normal USB connection. There's a little less bandwidth available, compared to a regular DP/HDMI connection, so you won't be able to hook a phone up to a 4K 144 Hz monitor and run it at maximum settings. But it will be fine for 4K 60 Hz or 1440p 120 Hz.

Choice: Tie, although it should be DisplayPort if you're using Apple products

Don't forget the graphics card, too!

When it comes to high refresh rate gaming, using DisplayPort or HDMI is dictated by the graphics card – if the GPU isn't up to churning out your favorite games at 144 fps or more, then which socket you connect your monitor isn't all that important.

Take our recent look at the PC version of Spider-Man Remastered, for example. At 4K, set to Medium Quality, there wasn't a single graphics card whose 1% Low was 144 fps or more. Nvidia's RTX 3090 came close, though, and this card along with AMD's Radeon 6950 XT, is the only sensible choice for gaming at 4K 120 Hz or more – they may well struggle to hit those rates in every game, but the use of VRR will mean images will be tear-free and you'll still get a decent refresh rate.

Coming further down the price range (i.e. into the realms of normality), the Radeon 6700 XT and GeForce RTX 3070 are both excellent cards, and are good enough for 1440p at 120 Hz or 1080p at 240 Hz. This is, of course, highly dependent on the game and what graphics setting are being used – high refresh gaming nearly always involves making some compromises somewhere along the lines.

The Radeon 6600 XT and GeForce RTX 3060 are best suited to 1080p gaming, but if you're after refresh rates (with VRR enabled) greater than 100 Hz, you'll have to drop a few graphics settings in many games.

This is especially true if you're aiming for something like 1080p at 360 Hz. Such a configuration is very much the preserve of competitive esports, and even with the detail levels cranked right down, you're almost certainly going to need a top end PC, replete with a $1,000+ graphics card, to stand any chance of running at that rate.

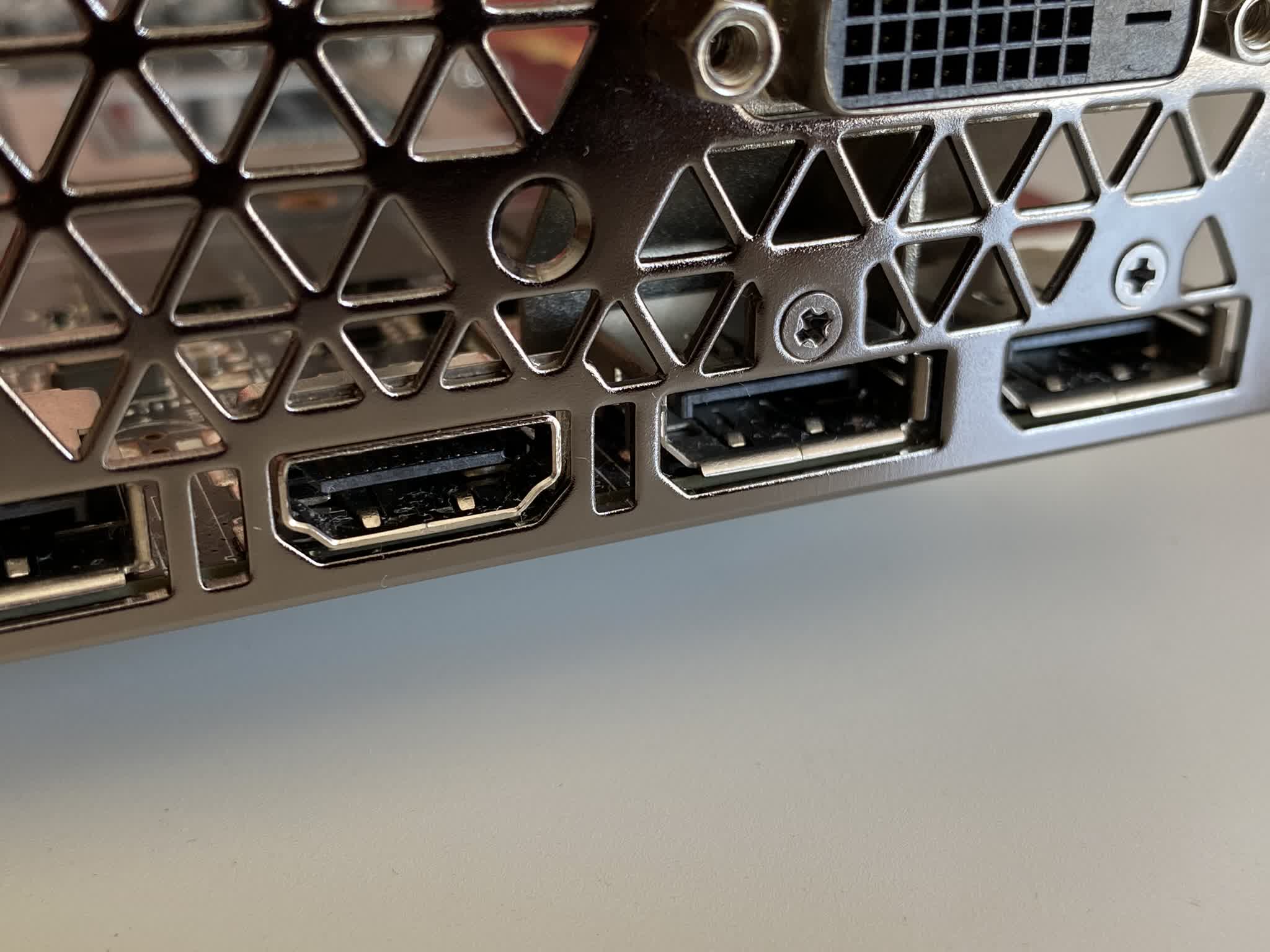

But whatever graphics card you have, you'll notice one thing in common with them all: the number of DisplayPort ports compared to HDMI ones. GPUs released today typically sport three DP sockets and just the one HDMI. But why?

Remember that HDMI had a slow adoption in the world of PCs and by the time the likes of VGA and DVI were being replaced, DisplayPort was available and was far superior to HDMI? Today, the latter is still seen as the preserve of televisions and consoles, hence why GPU vendors still prefer to load up their models with multiple DP ports.

Which is perfect if you want to set up a multiple monitor, high refresh rate gaming system (for flight or motorsport sims, for example). But do note that the display engine of the GPU can only output so many pixels per second – an Nvidia GeForce RTX 3090 supports a maximum resolution of 7680 x 4320 (33 Mpixels) HDR at 60 Hz.

All those pixels can be sent to a single monitor or distributed across multiple ones – so you could easily have three 1440p monitors running at 120 Hz, with the aforementioned graphics card (assuming the game can cope with that resolution).

The monitors themselves could be attached to individual DP sockets or, using DisplayPort's daisy chaining feature, hooked up to just the one port and then again to each monitor in the chain. Nvidia's card only support a maximum of 4 monitors, though, unlike AMD's which support up to six.

Unfortunately, HDMI doesn't have a daisy chain feature and with most cards only having one or two HDMI sockets at best, DisplayPort is the clear choice for multi-monitor gaming.

Choice: For sheer number of outputs, DisplayPort.

Closing words

It shouldn't have come as a surprise that DisplayPort was the best choice for high refresh rate gaming, or for that matter, PCs in general. The latest revision of HDMI is very good, but it's somewhat of a jack-of-all-trades, providing support for TVs, consoles, media players, as well as computers.

But that breadth of usability curtails its outright performance, in comparison to DisplayPort, and the latter provides the best support for high resolutions, high refresh rates, and other gaming features.

We'll close this overview of DisplayPort vs HDMI for high refresh rate gaming, with a simple three word chant: cables, cables, cables. Don't go cheap and use whatever's rattling around in the box, as you may find your cherished gaming machine isn't quite as stable as it could be. Use one from a known brand and follow the labels – pick the right one for your exact needs. You can skip the adamantium plated, 0% nitrogen snake oils products though!

Shopping Shortcuts:

- GeForce RTX 3070 on Amazon

- Radeon RX 6700 XT on Amazon

- GeForce RTX 3060 on Amazon

- Radeon RX 6600 XT on Amazon

- Asus ROG Swift PG259QN on Amazon

- Gigabyte M27Q X on Amazon

- Alienware AW3423DW on Amazon

Further Testing

Since we published this article, we have written new featured articles you may be interested in: