The CPU is often called the brains of a computer, and just like the human brain, it consists of several parts that work together to process information. There are parts that take in information, parts that store information, parts that process information, parts that help output information, and more. In today's explainer, we'll go over the key elements that make up a CPU and how they all work together to power your computer.

You should know, this article is part of our Anatomy series that dissects all the tech behind PC components. We also have a dedicated series to CPU Design that goes deeper into the CPU design process and how things work internally. It's a highly recommended technical read. This anatomy article will revisit some of the fundamentals from the CPU series, but at a higher level and with additional content.

Compared to previous articles in our Anatomy series, this one will inevitably be more abstract. When you're looking inside something like a power supply, you can clearly see the capacitors, transformers, and other components. That's simply not possible with a modern CPU since everything is so tiny and because Intel and AMD don't publicly disclose their designs. Most CPU designs are proprietary, so the topics covered in this article represent the general features that all CPUs have.

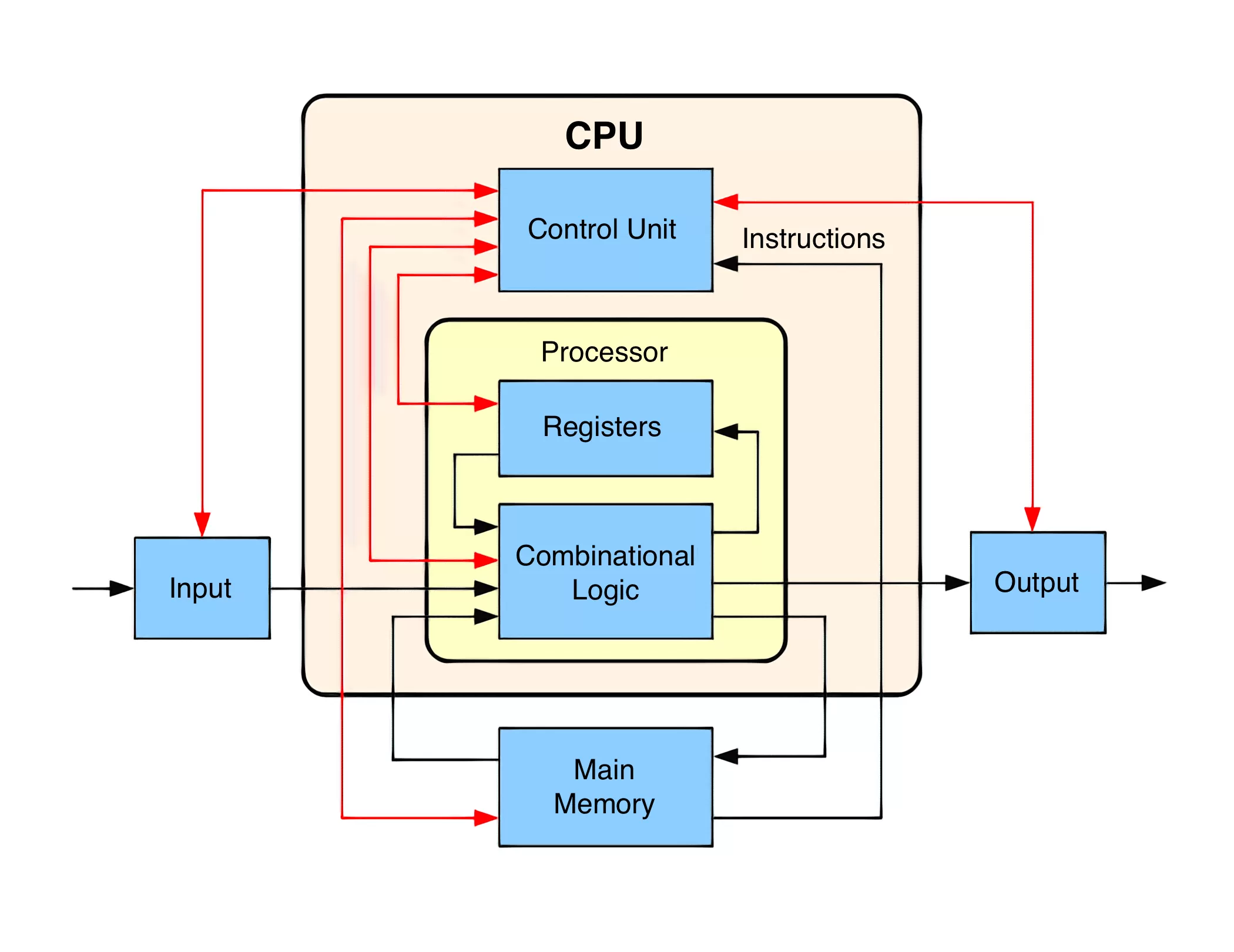

So let's dive in. Every digital system needs some form of a Central Processing Unit. Fundamentally, a programmer writes code to do whatever their task is, and then a CPU executes that code to produce the intended result. The CPU is also connected to other parts of a system like memory and I/O to help keep it fed with the relevant data, but we won't cover those systems today.

The CPU Blueprint: An ISA

When analyzing any CPU, the first thing you'll come across is the Instruction Set Architecture (ISA). This is the figurative blueprint for how the CPU operates and how all the internal systems interact with each other. Just like there are many breeds of dogs within the same species, there are many different types of ISAs a CPU can be built on. The two most common types are x86 (found in desktops and laptops) and ARM (found in embedded and mobile devices).

There are some others like MIPS, RISC-V, and PowerPC that have more niche applications. An ISA will specify what instructions the CPU can process, how it interacts with memory and caches, how work is divided in the many stages of processing, and more.

To cover the main portions of a CPU, we'll follow the path an instruction takes as it is executed. Different types of instructions may follow different paths and use different parts of a CPU, but we'll generalize here to cover the biggest parts. We'll start with the most basic design of a single-core processor and gradually add complexity as we get towards a more modern design.

Control Unit and Datapath

The parts of a CPU can be divided into two: the control unit and the datapath. Imagine a train car. The engine is what moves the train, but the conductor is pulling the levers behind the scenes and controlling the different aspects of the engine. A CPU is the same way.

The datapath is like the engine and as the name suggests, is the path where the data flows as it is processed. The datapath receives the inputs, processes them, and sends them out to the right place when they are done. The control unit tells the datapath how to operate like the conductor of the train. Depending on the instruction, the datapath will route signals to different components, turn on and off different parts of the datapath, and monitor the state of the CPU.

The Instruction Cycle - Fetch

The first thing our CPU must do is figure out what instructions to execute next and transfer them from memory into the CPU. Instructions are produced by a compiler and are specific to the CPU's ISA. ISAs will share most common types of instructions like load, store, add, subtract, etc, but there many be additional special types of instructions unique to each particular ISA. The control unit will know what signals need to be routed where for each type of instruction.

When you run a .exe on Windows for example, the code for that program is moved into memory and the CPU is told what address the first instruction starts at. The CPU always maintains an internal register that holds the memory location of the next instruction to be executed. This is called the Program Counter (PC).

Once it knows where to start, the first step of the instruction cycle is to get that instruction. This moves the instruction from memory into the CPU's instruction register and is known as the Fetch stage. Realistically, the instruction is likely to be in the CPU's cache already, but we'll cover those details in a bit.

The Instruction Cycle - Decode

When the CPU has an instruction, it needs to figure out specifically what type of instruction it is. This is called the Decode stage. Each instruction will have a certain set of bits called the Opcode that tells the CPU how to interpret it. This is similar to how different file extensions are used to tell a computer how to interpret a file. For example, .jpg and .png are both image files, but they organize data in a different way so the computer needs to know the type in order to properly interpret them.

Depending on how complex the ISA is, the instruction decode portion of the CPU may get complex. An ISA like RISC-V may only have a few dozen instructions while x86 has thousands. On a typical Intel x86 CPU, the decode process is one of the most challenging and takes up a lot of space. The most common types of instructions that a CPU would decode are memory, arithmetic, or branch instructions.

3 Main Instruction Types

A memory instruction may be something like "read the value from memory address 1234 into value A" or "write value B to memory address 5678". An arithmetic instruction might be something like "add value A to value B and store the result into value C". A branch instruction might be something like "execute this code if value C is positive or execute that code if value C is negative". A typical program may chain these together to come up with something like "add the value at memory address 1234 to the value at memory address 5678 and store it in memory address 4321 if the result is positive or at address 8765 if the result is negative".

Before we start executing the instruction we just decoded, we need to pause for a moment to talk about registers.

A CPU has a few very small but very fast pieces of memory called registers. On a 64-bit CPU these would hold 64 bits each and there may be just a few dozen for the core. These are used to store values that are currently being used and can be considered something like an L0 cache. In the instruction examples above, values A, B, and C would all be stored in registers.

The ALU

Back to the execution stage now. This will be different for the 3 types of instructions we talked about above, so we'll cover each one separately.

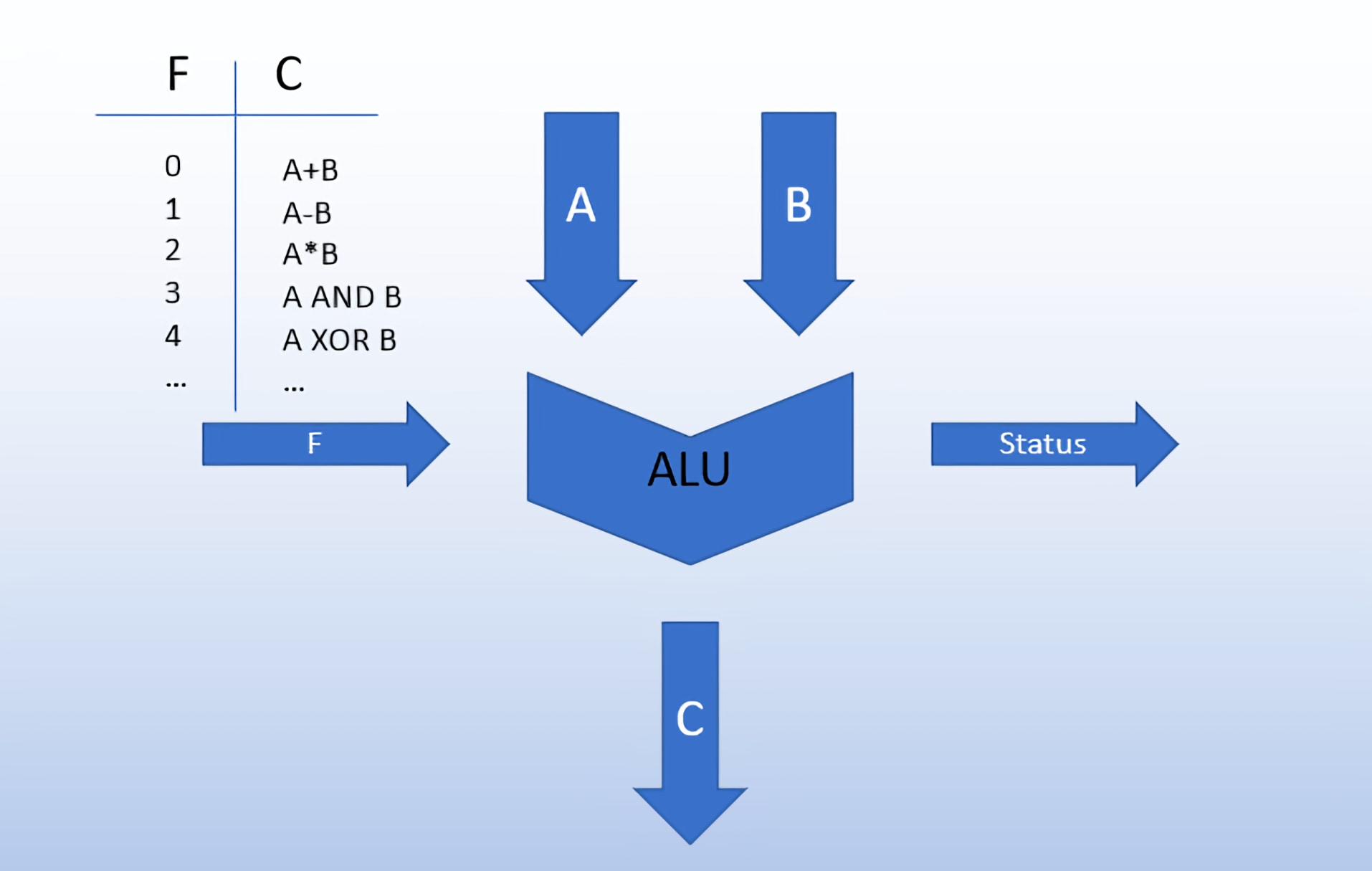

Starting with arithmetic instructions since they are the easiest to understand. These type of instructions are fed into an Arithmetic Log Unit (ALU) for processing. An ALU is a circuit that typically takes two inputs with a control signal and outputs a result.

Imagine a basic calculator you used in middle school. To perform an operation, you type in the two input numbers as well as what type of operation you want to perform. The calculator does the computation and outputs the result. In the case of our CPU's ALU, the type of operation is determined by the instruction's opcode and the control unit would send that to the ALU. In addition to basic arithmetic, ALUs can also perform bitwise operations like AND, OR, NOT, and XOR. The ALU will also output some status info for the control unit about the calculation it has just completed. This could include things like whether the result was positive, negative, zero, or had an overflow.

An ALU is most associated with arithmetic operations, but it may also be used for memory or branch instructions. For example, the CPU may need to calculate a memory address given as the result of a previous arithmetic operation. It may also need to calculate the offset to add to the program counter that a branch instruction requires. Something like "if the previous result was negative, jump ahead 20 instructions."

Memory Instructions and Hierarchy

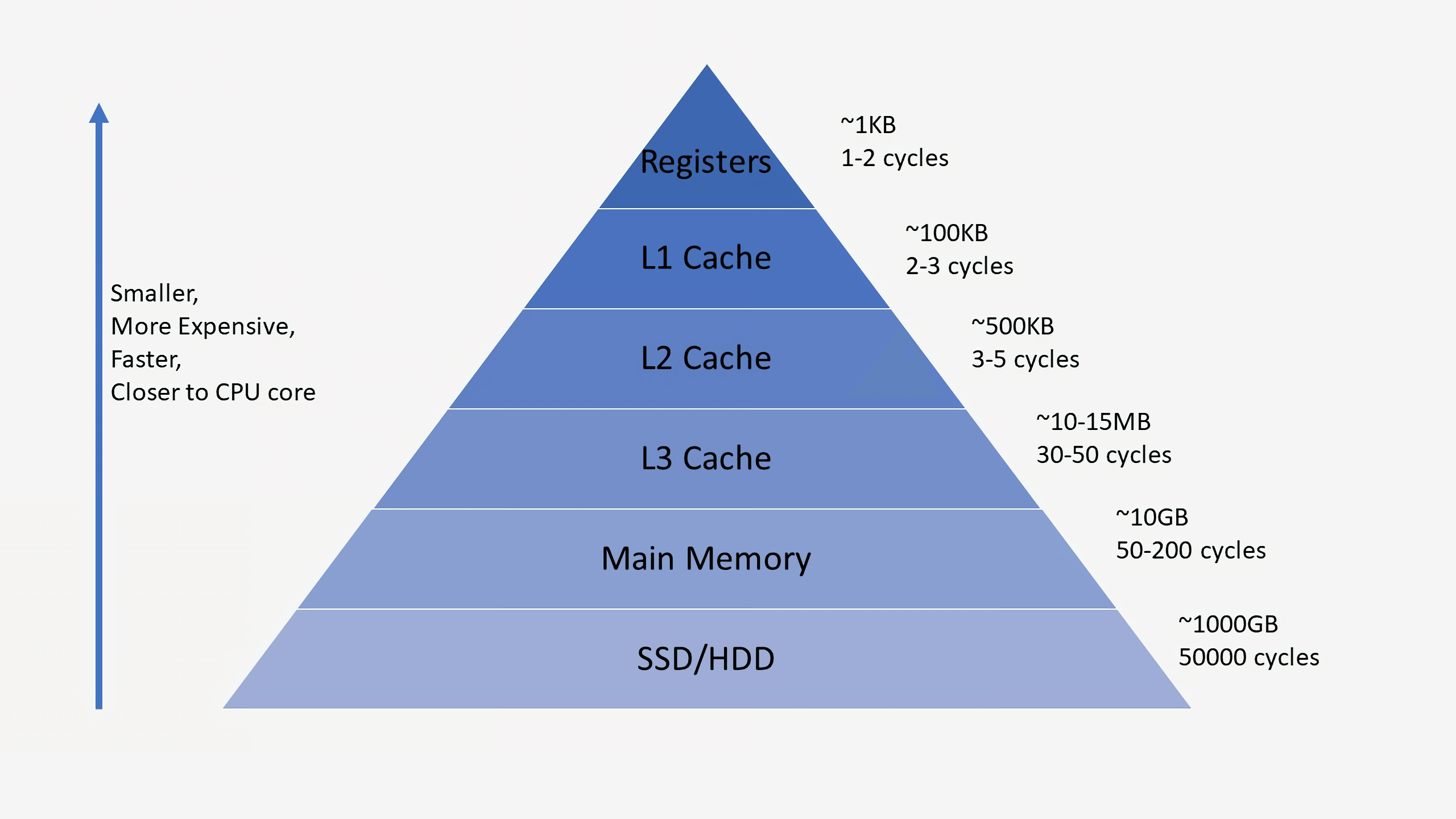

For memory instructions, we'll need to understand a concept called the Memory Hierarchy. This represents the relationship between caches, RAM, and main storage. When a CPU receives a memory instruction for a piece of data that it doesn't yet have locally in its registers, it will go down the memory hierarchy until it finds it. Most modern CPUs contain three levels of cache: L1, L2, and L3. The first place the CPU will check is the L1 cache. This is the smallest and fastest of the three levels of cache. The L1 cache is typically split into a portion for data and a portion for instructions. Remember, instructions need to be fetched from memory just like data.

A typical L1 cache may be a few hundred KB. If the CPU can't find what it's looking for in the L1 cache, it will check the L2 cache. This may be on the order of a few MB. The next step is the L3 cache which may be a few tens of MB. If the CPU can't find the data it needs in the L3 cache, it will go to RAM and finally main storage. As we go down each step, the available space increases by roughly an order of magnitude, but so does the latency.

Once the CPU finds the data, it will bring it up the hierarchy so that the CPU has fast access to it if needed in the future. There are a lot of steps here, but it ensures that the CPU has fast access to the data it needs. For example, the CPU can read from its internal registers in just a cycle or two, L1 in a handful of cycles, L2 in ten or so cycles, and the L3 in a few dozen. If it needs to go to memory or main storage, those could take tens of thousands or even millions of cycles. Depending on the system, each core will likely have its own private L1 cache, share an L2 with one other core, and share an L3 among groups four or more cores. We'll talk more about multi-core CPUs later in this article.

Branch and Jump Instructions

The last of the three major instruction types is the branch instruction. Modern programs jump around all the time and a CPU will rarely ever execute more than a dozen contiguous instructions without a branch. Branch instructions come from programming elements like if-statements, for-loops, and return-statements. These are all used to interrupt the program execution and switch to a different part of the code. There are also jump instructions which are branch instructions that are always taken.

Conditional branches are especially tricky for a CPU since it may be executing multiple instructions at once and may not determine the outcome of a branch until after it has started on subsequent instructions.

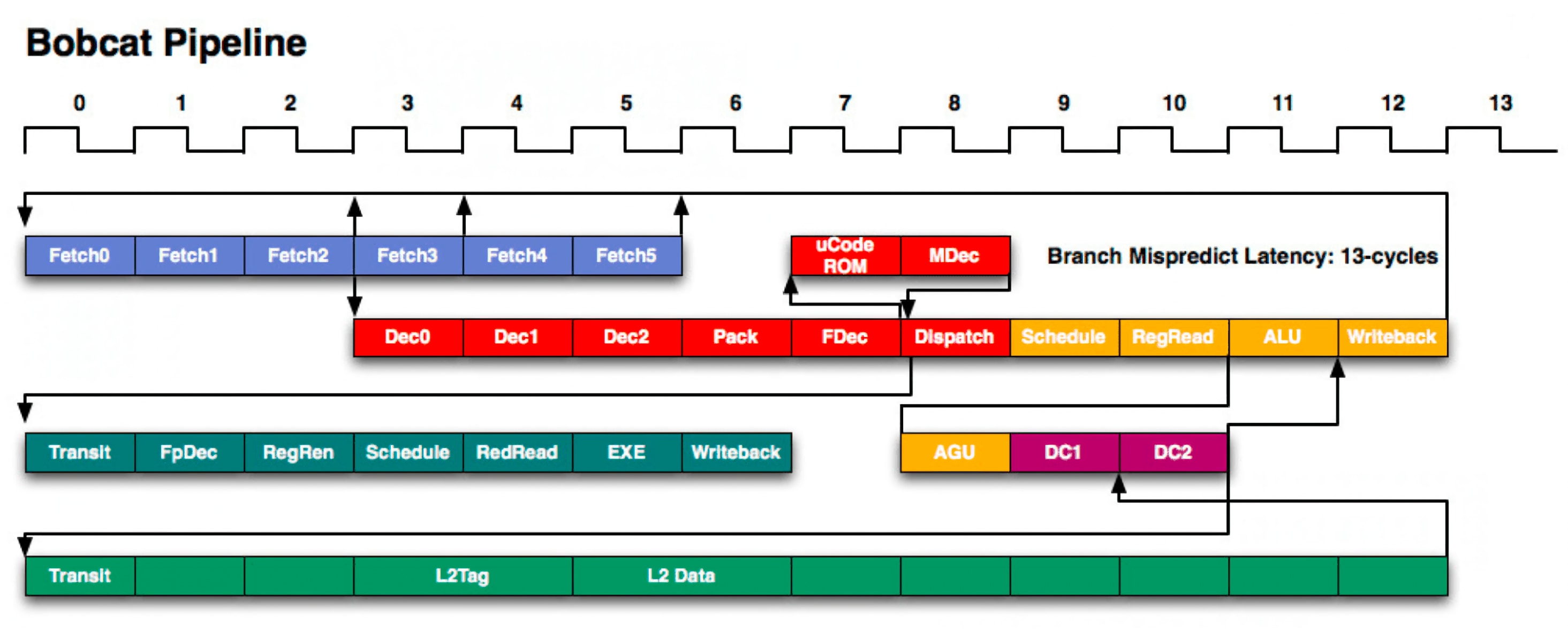

In order to fully understand why this is an issue, we'll need to take another diversion and talk about pipelining. Each step in the instruction cycle may take a few cycles to complete. That means that while an instruction is being fetched, the ALU would otherwise be sitting idle. To maximize a CPU's efficiency, we divide each stage in a process called pipelining.

The classic way to understand this is through an analogy to doing laundry. You have two loads to do and washing and drying each take an hour. You could put the first load in the washer and then the dryer when it's done, and then start the second load. This would take four hours. However, if you divided the work and started the second load washing while the first load was drying, you could get both loads done in three hours. The one hour reduction scales with the number of loads you have and the number of washers and dryers. It still takes two hours to do an individual load, but the overlap increases the total throughput from 0.5 loads/hr to 0.75 loads/hr.

CPUs use this same method to improve instruction throughput. A modern ARM or x86 CPU may have 20+ pipeline stages which means at any given point, that core is processing 20+ different instructions at once. Each design is unique, but one sample division may be 4 cycles for fetch, 6 cycles for decode, 3 cycles for execute, and 7 cycles for updating the results back to memory.

Back to branches, hopefully you can start to see the issue. If we don't know that an instruction is a branch until cycle 10, we will have already started executing 9 new instructions that may be invalid if the branch is taken. To get around this issue, CPUs have very complex structures called branch predictors. They use similar concepts from machine learning to try and guess if a branch will be taken or not. The intricacies of branch predictors are well beyond the scope of this article, but on a basic level, they track the status of previous branches to learn whether or not an upcoming branch is likely to be taken or not. Modern branch predictors can have 95% accuracy or higher.

Once the result of the branch is known for sure (it has finished that stage of the pipeline), the program counter will be updated and the CPU will go on to execute the next instruction. If the branch was mispredicted, the CPU will throw out all the instructions after the branch that it mistakenly started to execute and start up again from the correct place.

Out-Of-Order Execution

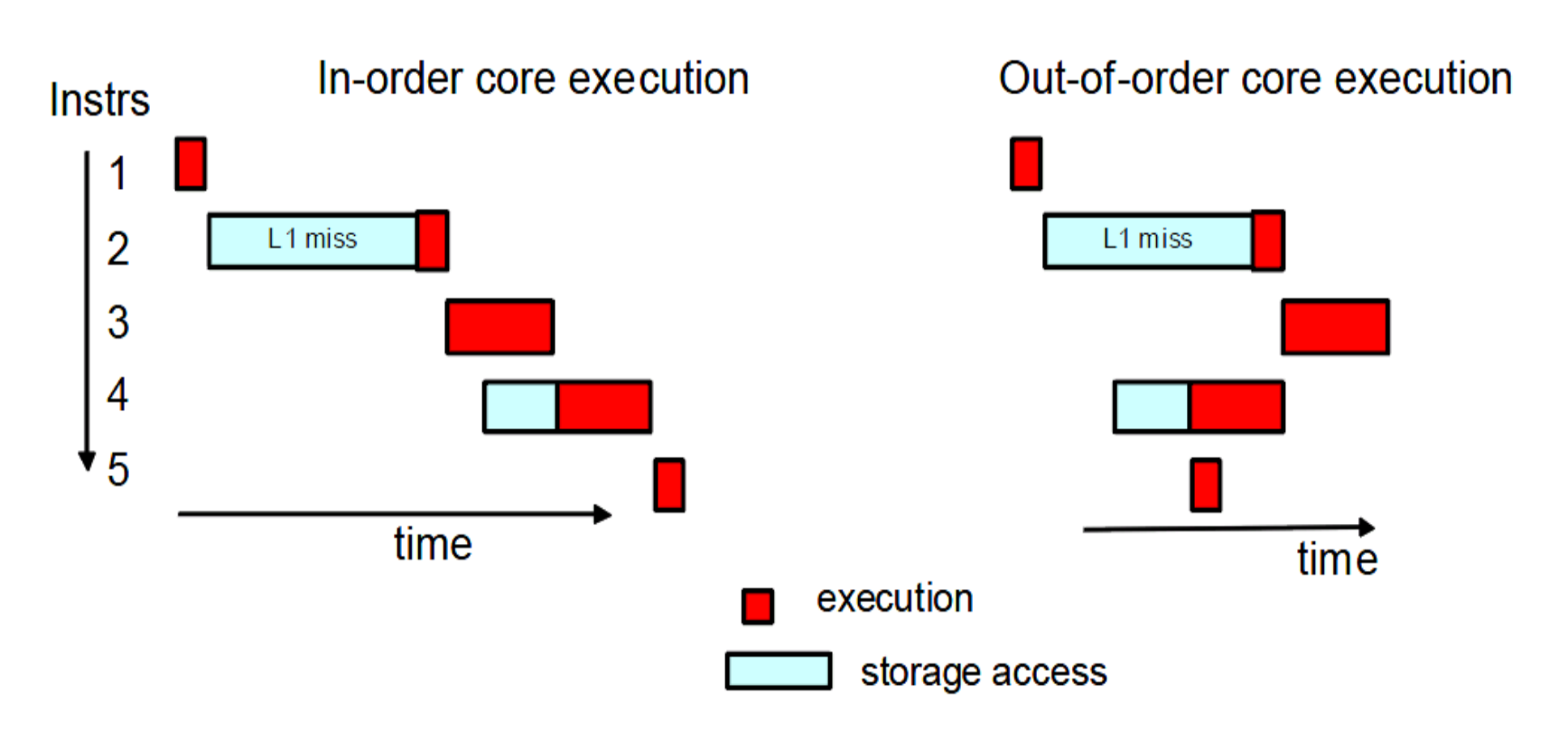

Now that we know how to execute the three most common types of instructions, let's take a look at some of the more advanced features of a CPU. Virtually all modern processors don't actually execute instructions in the order in which they are received. A paradigm called out-of-order execution is used to minimize downtime while waiting for other instructions to finish.

If a CPU knows that an upcoming instruction requires data that won't be ready in time, it can switch the instruction order and bring in an independent instruction from later in the program while it waits. This instruction reordering is an extremely powerful tool, but it is far from the only trick CPUs use.

Another performance improving feature is called prefetching. If you were to time how long it takes for a random instruction to complete from start to finish, you'd find that the memory access takes up most of the time. A prefetcher is a unit in the CPU that tries to look ahead at future instructions and what data they will require. If it sees one coming that requires data that the CPU doesn't have cached, it will reach out to the RAM and fetch that data into the cache. Hence the name pre-fetch.

Accelerators and the Future

Another major feature starting to be included in CPUs are task-specific accelerators. These are circuits whose entire job is perform one small task as fast as possible. This might include encryption, media encoding, or machine learning.

The CPU can do these things on its own, but it is vastly more efficient to have a unit dedicated to them. A great example of this is onboard graphics compared to a dedicated GPU. Surely the CPU can perform the computations needed for graphics processing, but having a dedicated unit for them offers orders of magnitude better performance. With the rise of accelerators, the actual core of a CPU may only take up a small fraction of the chip.

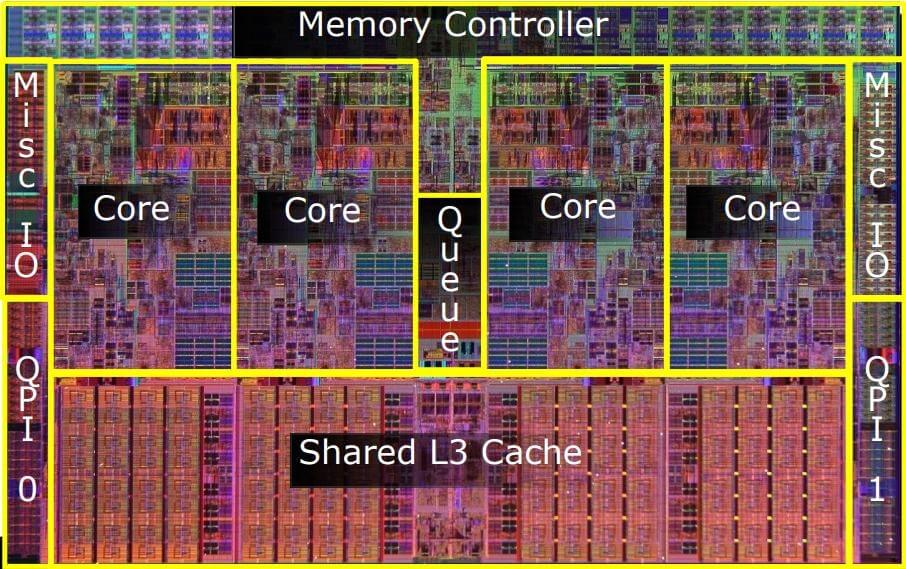

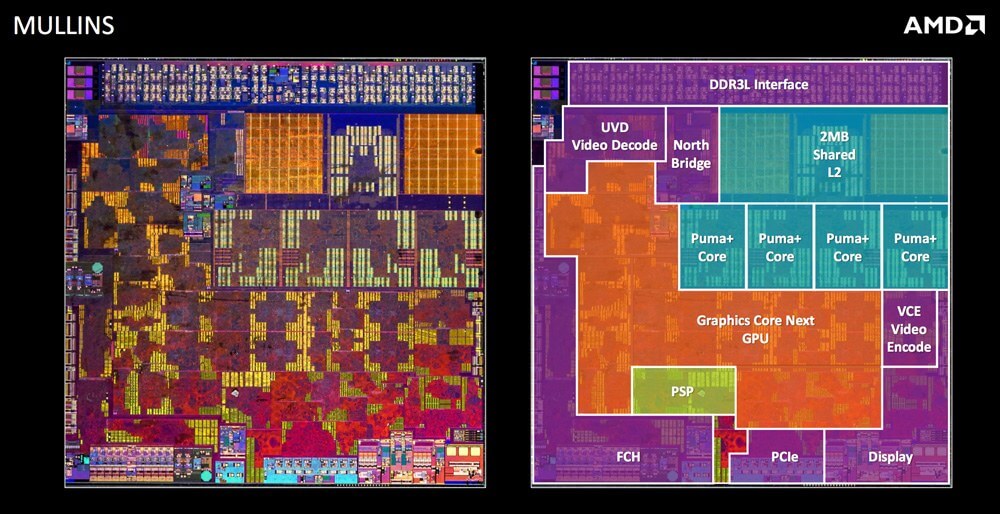

The picture below shows an Intel CPU from several years back. Most of the space is taken up by cores and cache. The second picture below it is for a much newer AMD chip. Most of the space there is taken up by components other than the cores.

Going Multicore

The last major feature to cover is how we can connect a bunch of individual CPUs together to form a multicore CPU. It's not as simple as just putting multiple copies of the single core design we talked about earlier. Just like there's no easy way to turn a single-threaded program into a multi-threaded program, the same concept applies to hardware. The issues come from dependence between the cores.

For, say, a 4-core design, the CPU needs to be able to issue instructions 4 times as fast. It also needs four separate interfaces to memory. With multiple entities operating on potentially the same pieces of data, issues like coherence and consistency must be resolved. If two cores were both processing instructions that used the same data, how do they know who has the right value? What if one core modified the data but it didn't reach the other core in time for it to execute? Since they have separate caches that may store overlapping data, complex algorithms and controllers must be used to remove these conflicts.

Proper branch prediction is also extremely important as the number of cores in a CPU increases. The more cores are executing instructions at once, the higher the likelihood that one of them is processing a branch instruction. This means the instruction flow may change at any time.

Typically, separate cores will process instruction streams from different threads. This helps reduce the dependence between cores. That's why if you check Task Manager, you'll often see one core working hard and the others hardly working. Many programs aren't designed for multithreading. There may also be certain cases where it's more efficient to have one core do the work rather than pay the overhead penalties of trying to divide up the work.

Physical Design

Most of this article has focused on the architectural design of a CPU since that's where most of the complexity is. However, this all needs to be created in the real world and that adds another level of complexity.

In order to synchronize all the components throughout the processor, a clock signal is used. Modern processors typically run between 3.0GHz and 5.0GHz and that hasn't seemed to change in the past decade. At each of these cycles, the billions of transistors inside a chip are switching on and off.

Clocks are critical to ensure that as each stage of the pipeline advances, all the values show up at the right time. The clock determines how many instructions a CPU can process per second. Increasing its frequency through overclocking will make the chip faster, but will also increase power consumption and heat output.

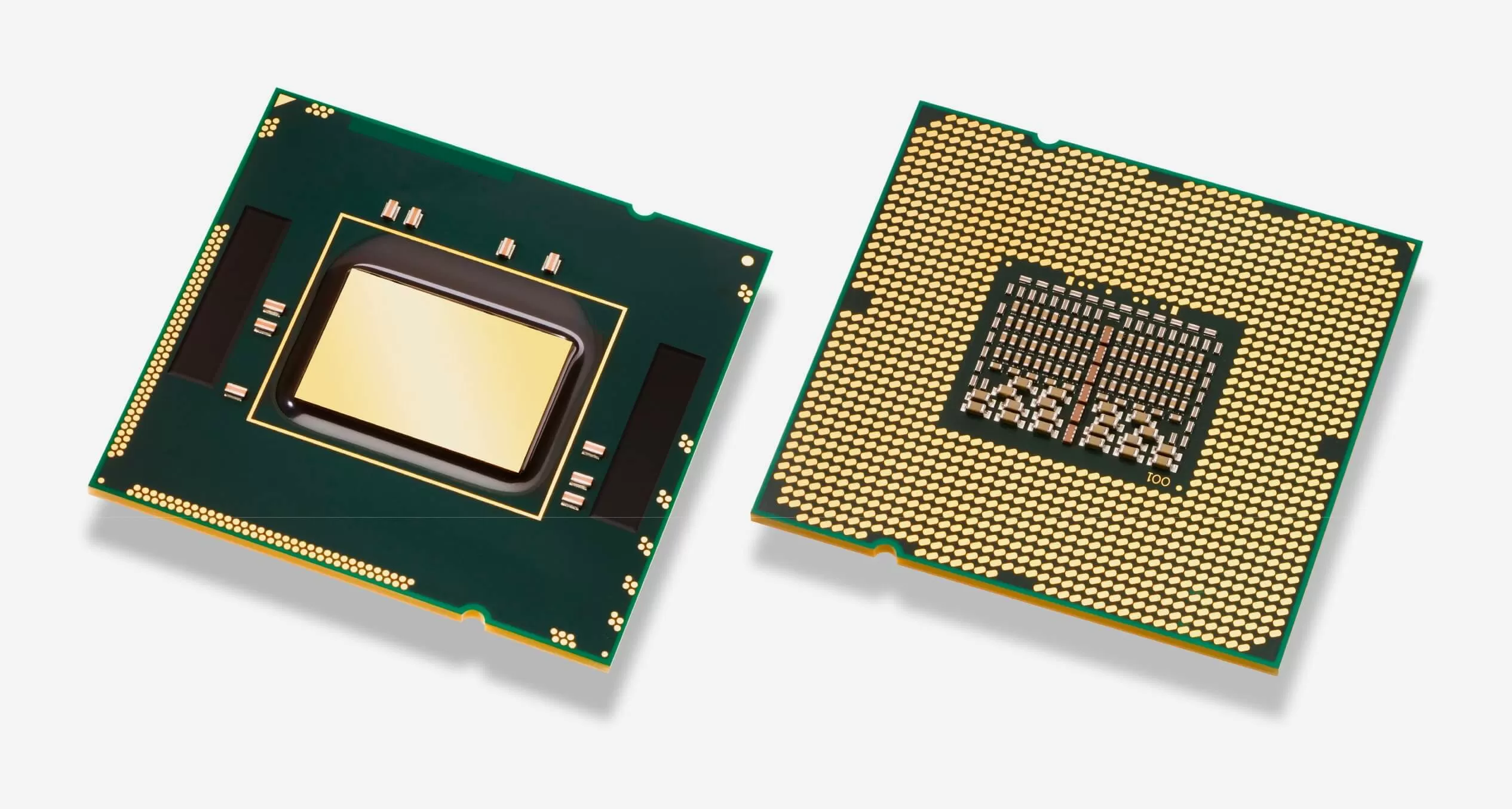

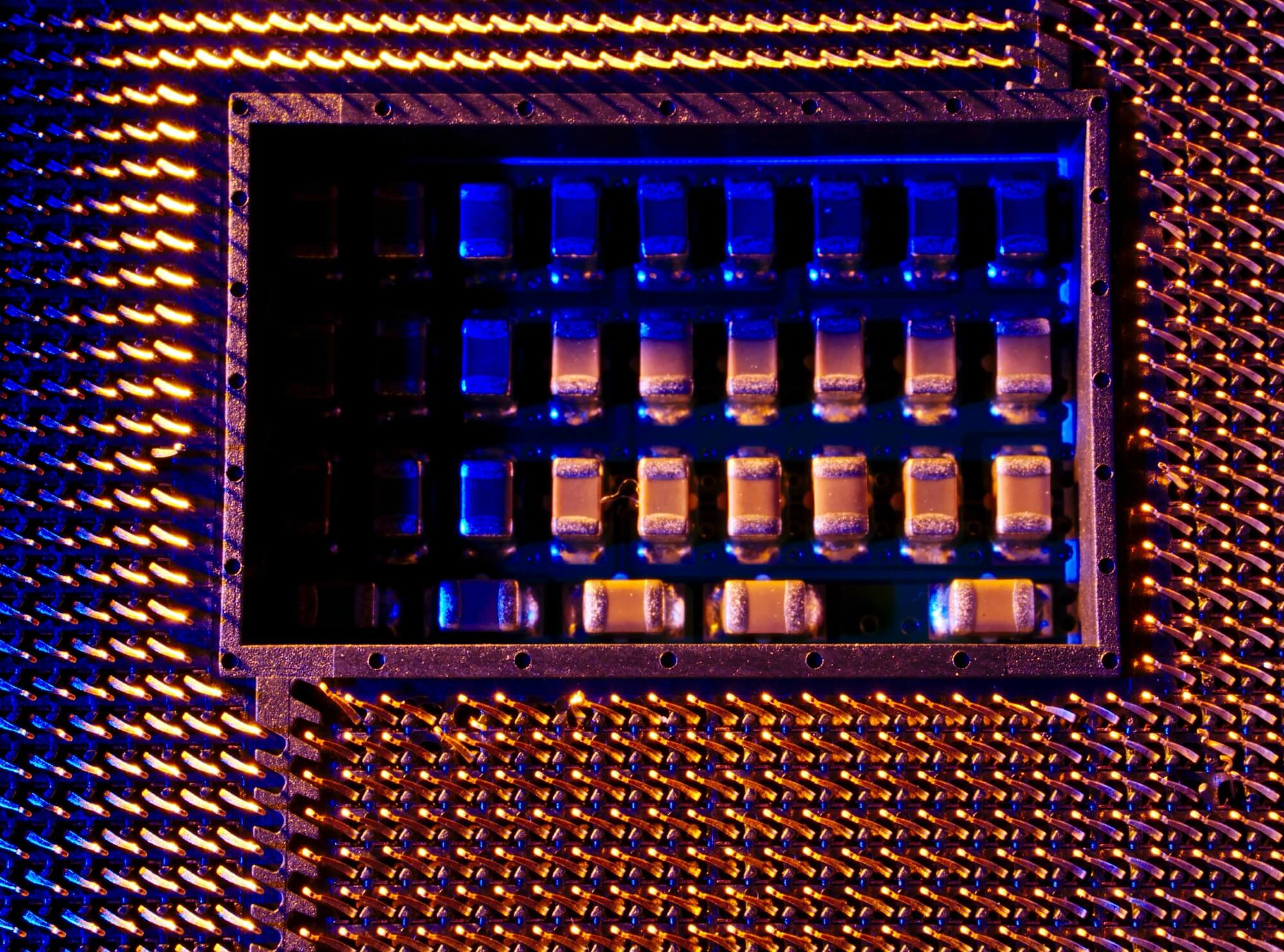

Heat is a CPU's worst enemy. As digital electronics heat up, the microscopic transistors can start to degrade. This can lead to damage in a chip if the heat is not removed. This is why all CPUs come with heat spreaders. The actual silicon die of a CPU may only take up 20% of the surface area of a physical device. Increasing the footprint allows the heat to be spread more evenly to a heatsink. It also allows more pins for interfacing with external components.

Modern CPUs can have a thousand or more input and output pins on the back. A mobile chip may only have a few hundred pins though since most of the computing parts are within the chip. Regardless of the design, around half of them are devoted to power delivery and the rest are used data communications. This includes communication with the RAM, chipset, storage, PCIe devices, and more. With high performance CPUs drawing a hundred or more amps at full load, they need hundreds of pins to spread out the current draw evenly. The pins are usually gold plated to improve electrical conductivity. Different manufacturers use different arrangements of pins throughout their many product lines.

Putting It All Together with an Example

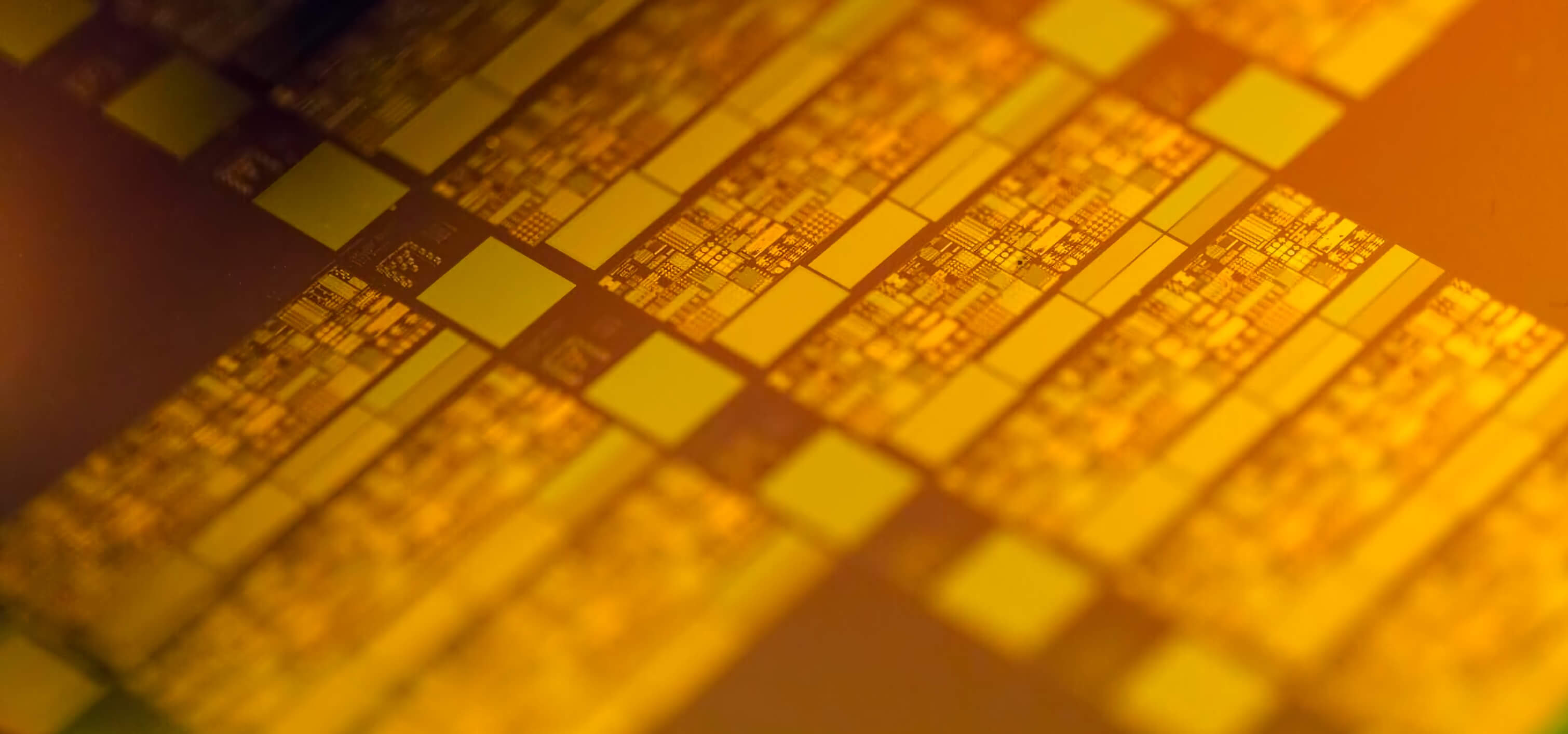

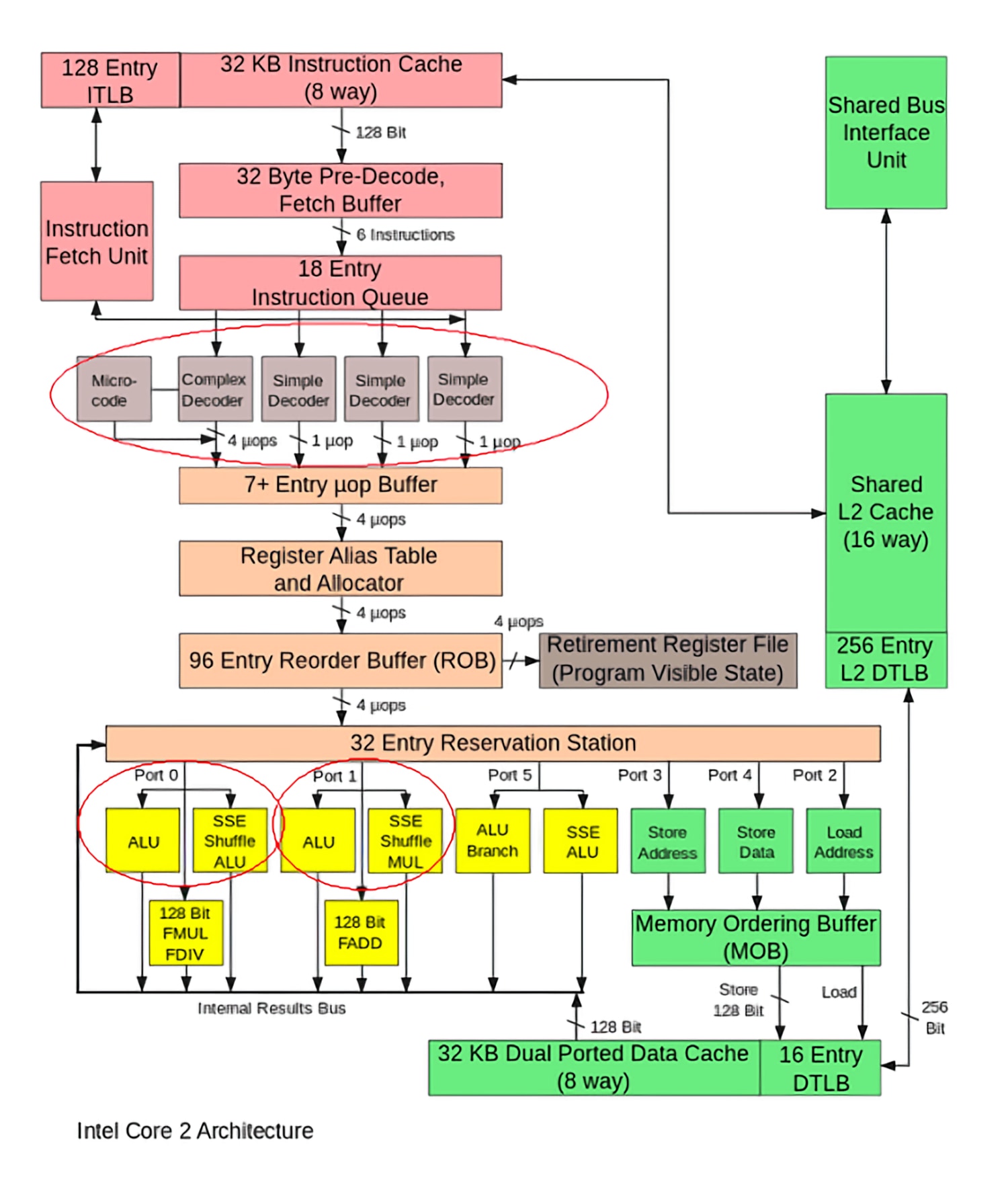

To wrap things up, we'll take a quick look at the design of an Intel Core 2 CPU. This is from way back in 2006, so some parts may be outdated, but details on newer designs are not available.

Starting at the top, we have the instruction cache and ITLB. The Translation Lookaside Buffer (TLB) is used to help the CPU know where in memory to go to find the instruction it needs. Those instructions are stored in an L1 instruction cache and are then sent into a pre-decoder. The x86 architecture is extremely complex and dense so there are many steps to decoding. Meanwhile, the branch predictor and prefetcher are both looking ahead for any potential issues caused by incoming instructions.

From there, the instructions are sent into an instruction queue. Recall back to how the out-of-order design allows a CPU to execute instructions and choose the most timely one to execute. This queue holds the current instructions a CPU is considering. Once the CPU knows which instruction would be the best to execute, it is further decoded into micro-operations. While an instruction might contain a complex task for the CPU, micro-ops are granular tasks that are more easily interpreted by the CPU.

These instructions then go into the Register Alias Table, the ROB, and the Reservation Station. The exact function of these three components is a bit complex (think graduate level university course), but they are used in the out-of-order process to help manage dependencies between instructions.

A single "core" will actually have many ALUs and memory ports. Incoming operations are put into the reservation station until an ALU or memory port is available for use. Once the required component is available, the instruction will be processed with the help from the L1 data cache. The output results will be stored and the CPU is now ready to start on the next instruction. That's about it!

While this article was not meant to be a definitive guide to exactly how every CPU works, it should give you a good idea of their inner workings and complexities. Frankly, no one outside of AMD and Intel actually know how their CPUs work. Each section of this article represents an entire field of research and development so the information presented here just scratches the surface.

Keep Reading

If you are interested in learning more about how the various components covered in this article are designed, check out Part 2 of our CPU design series. If you're more interested in learning how a CPU is physically made down to the transistor and silicon level, check out Part 3.