Almost every desktop PC has one. They have billions of transistors, can use hundreds of watts of power, and can cost over a thousand dollars. They are masterpieces of electronic engineering and generate extremes in product loyalty and disdain... and yet the number of things they normally do can be counted one just one hand. Welcome to the world of graphics cards!

Graphics cards are among the PC components to which we dedicate the most time on TechSpot, so clearly they need a proper dissection. Let's assemble the team and head for the operating table. It's time to delve into the innards that make up a graphics card, splitting up its various parts and seeing what each bit does.

Enter the dragon

Ah, the humble graphics card, or to give it a more fulsome title: the video acceleration add-in expansion card. Designed and manufactured by global, multi-billion dollar businesses, they're often the largest and singularly most expensive component inside a desktop PC.

Right now on Amazon, the top 10 best selling ones range from just $53 to an eye-watering $1,200, with the latter coming in it 3.3 pounds (1.5 kg) in weight and over 12 inches (31 cm) in length. And all of this is to do what, exactly? Use silicon chips on a circuit board to, for most users, create 2D & 3D graphics, and encode/decode video signals.

That sounds like a lot of metal, plastic, and money to do just a few things, so we better get stuck into one and see why they're like this. For this anatomy piece, we're using a graphics card made by XFX, a Radeon HD 6870 like the one we tested way back in October 2010.

First glances don't reveal much: it's quite long at nearly 7 inches (22 cm) but most of it seems to be plastic. We can see a metal bracket, to hold it firmly in place in the computer, a big red fan, and a circuit board connector.

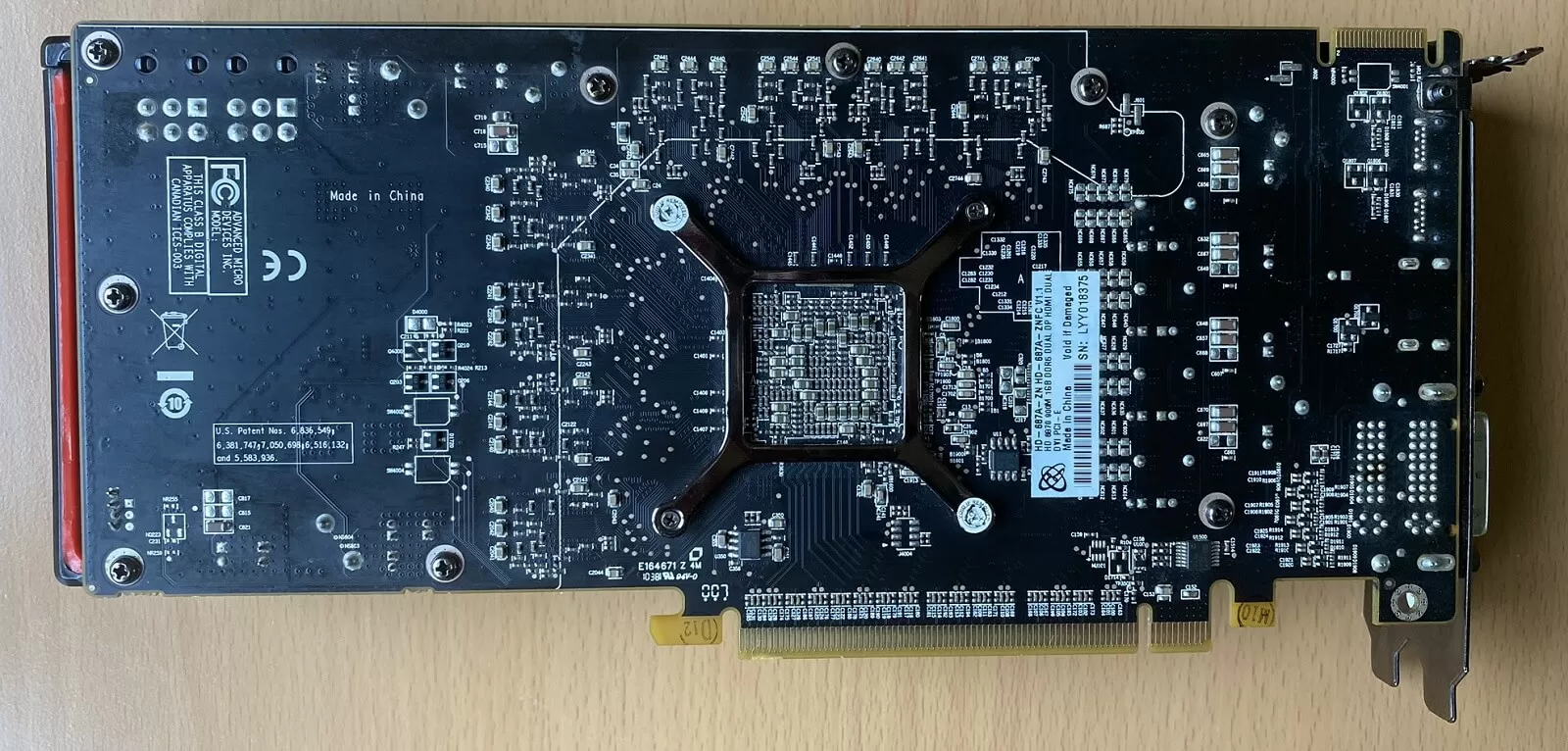

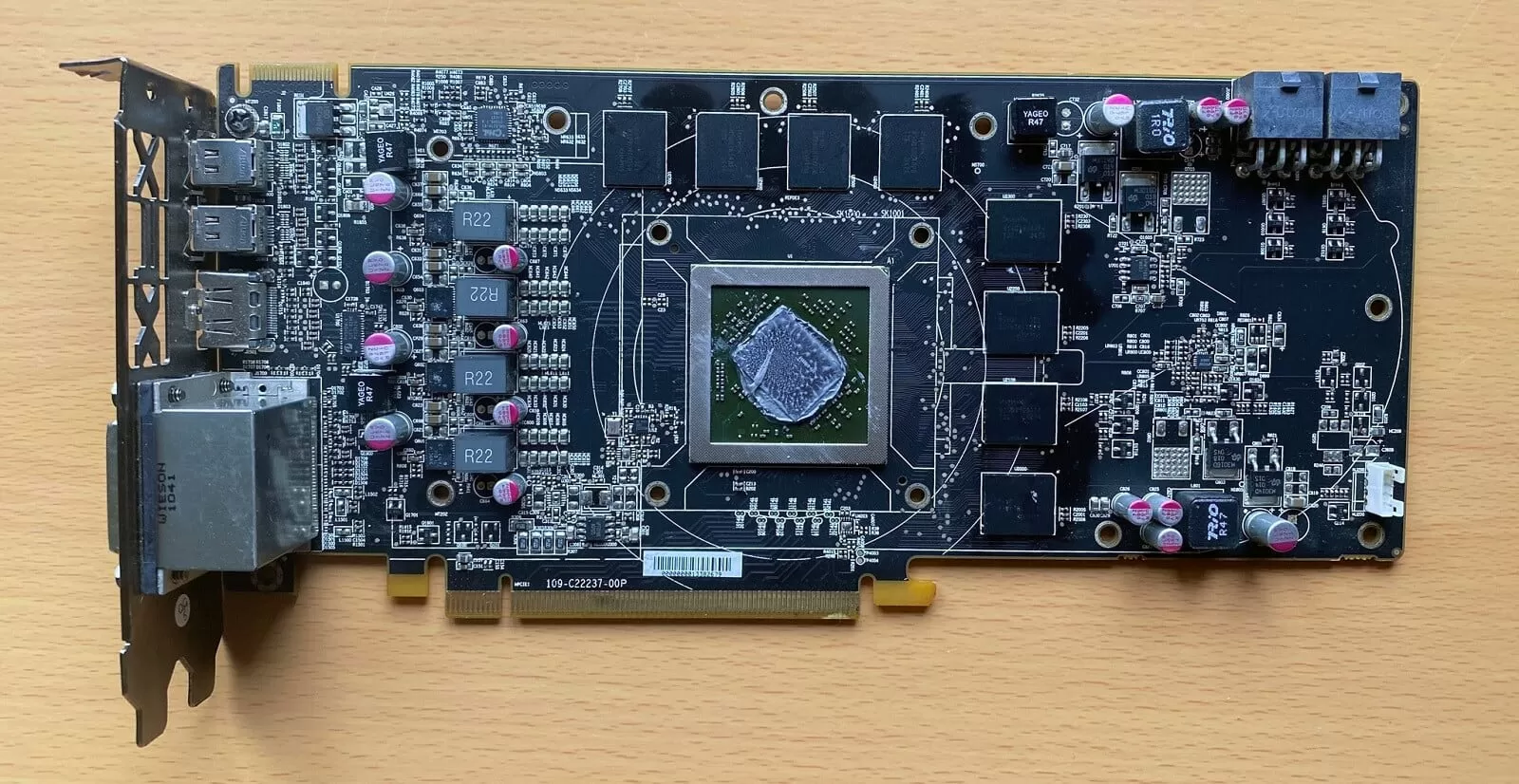

Let's flip it over and see if there's more to be seen on the other side:

The first thing you notice is another metal bracket, framing a whole host of electronic components. The rest of the circuit board is reasonably empty, but we can see lots of connecting wires coming from the middle section, so there's obviously something important there and it must be pretty complex...

It's hot as heck in here!

All chips are integrated circuits (ICs, for short) and they generate heat when they're working. Graphics cards have thousands of them packed into a small volume, which means they'll get pretty toasty if the heat isn't managed in some way.

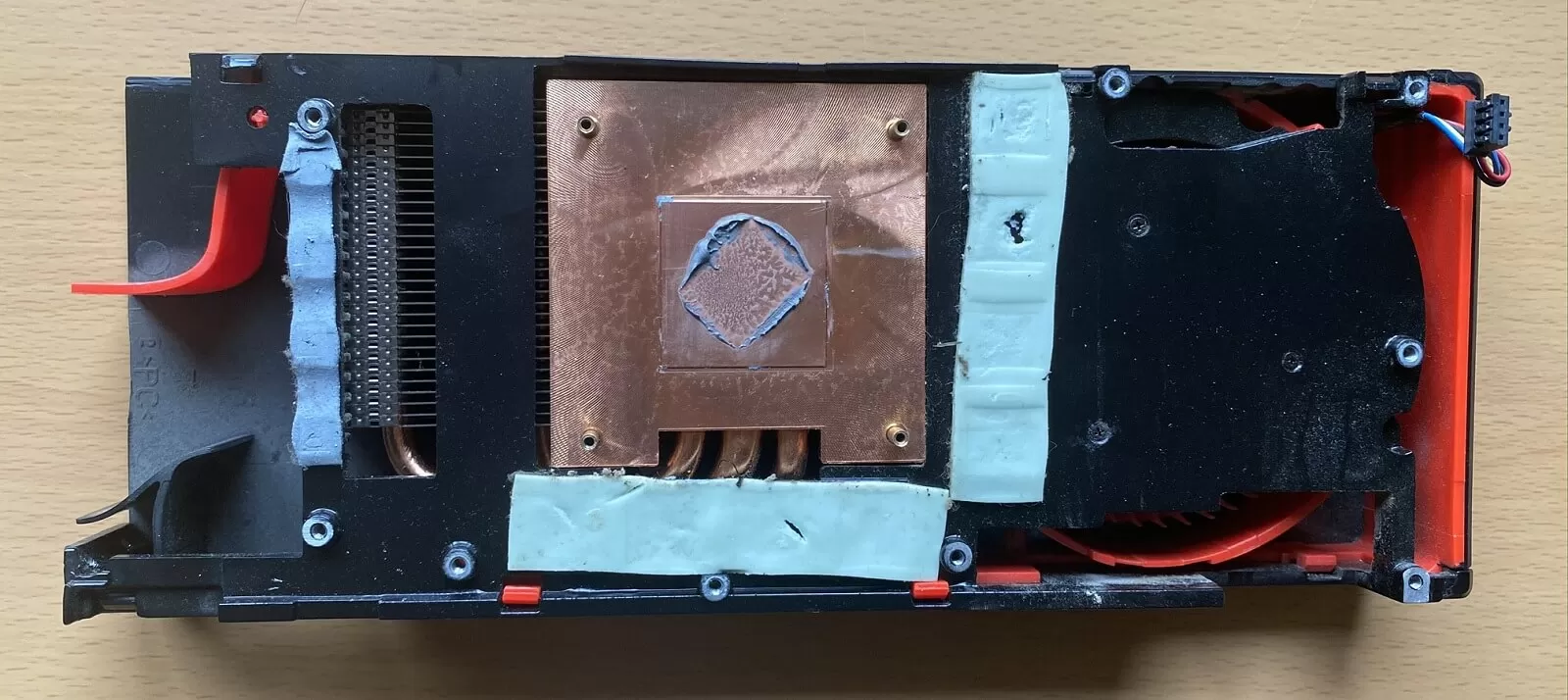

As a result, most graphics cards are hidden underneath some kind of cooling system and ours is no exception. Out with the screwdrivers and in with a blatant disregard for warranty warning stickers... this is a pretty old unit anyhow:

This cooling system is generally known as a 'blower' type: it sucks air from inside the computer case, and drives it across a large chunk of metal, before blasting it out of the case.

In the image above, we can see the metal block, and sticking to it are the remains of a runny substance generally known as thermal paste. Its job is to fill in the microscopic gaps between the graphics processor (a.k.a. the GPU) and the metal block, so that heat gets transferred more efficiently.

We can also see 3 blue strips called thermal pads, as the soft, squishy material provides a better contact between some parts on the circuit board and the base of the cooler (which is also metal). Without these strips, those parts would barely, if at all, touch the metal plate and wouldn't be cooled.

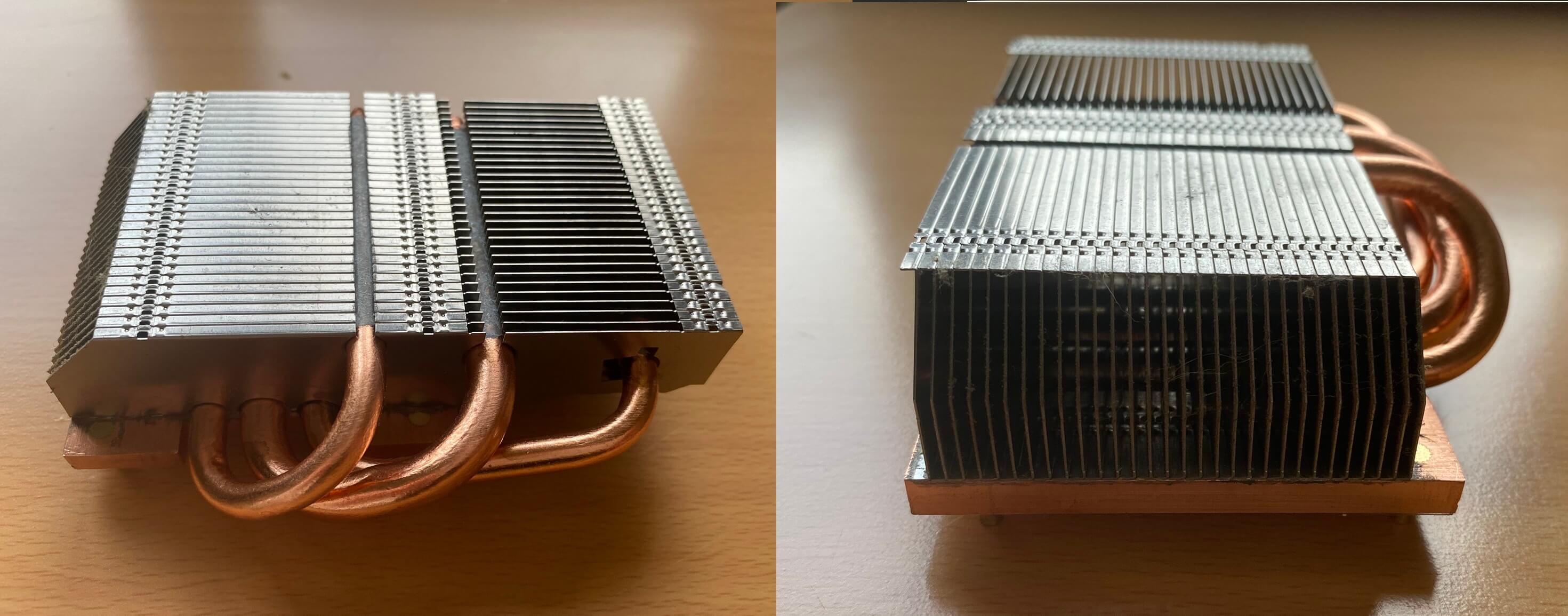

Let's take a closer look at the main chunk of metal – in our example, it's a block of copper, with 3 copper pipes coming off it, into multiple rows of aluminum fins. The name for whole thing is a heatsink.

The pipes are hollow and sealed at both ends; inside there is a small quantity of water (in some models, it's ammonia) that absorbs heat from the copper block. Eventually, the water heats up so much that it becomes vapor and it travels away from the source of the heat, to the other end of the pipe.

There, it transfers the heat to the aluminum fins, cooling back down in the process and become a liquid again. The inner surface of the pipes is rough, and a capillary action (also called wicking) carries the water across this surface until it reaches the copper plate again.

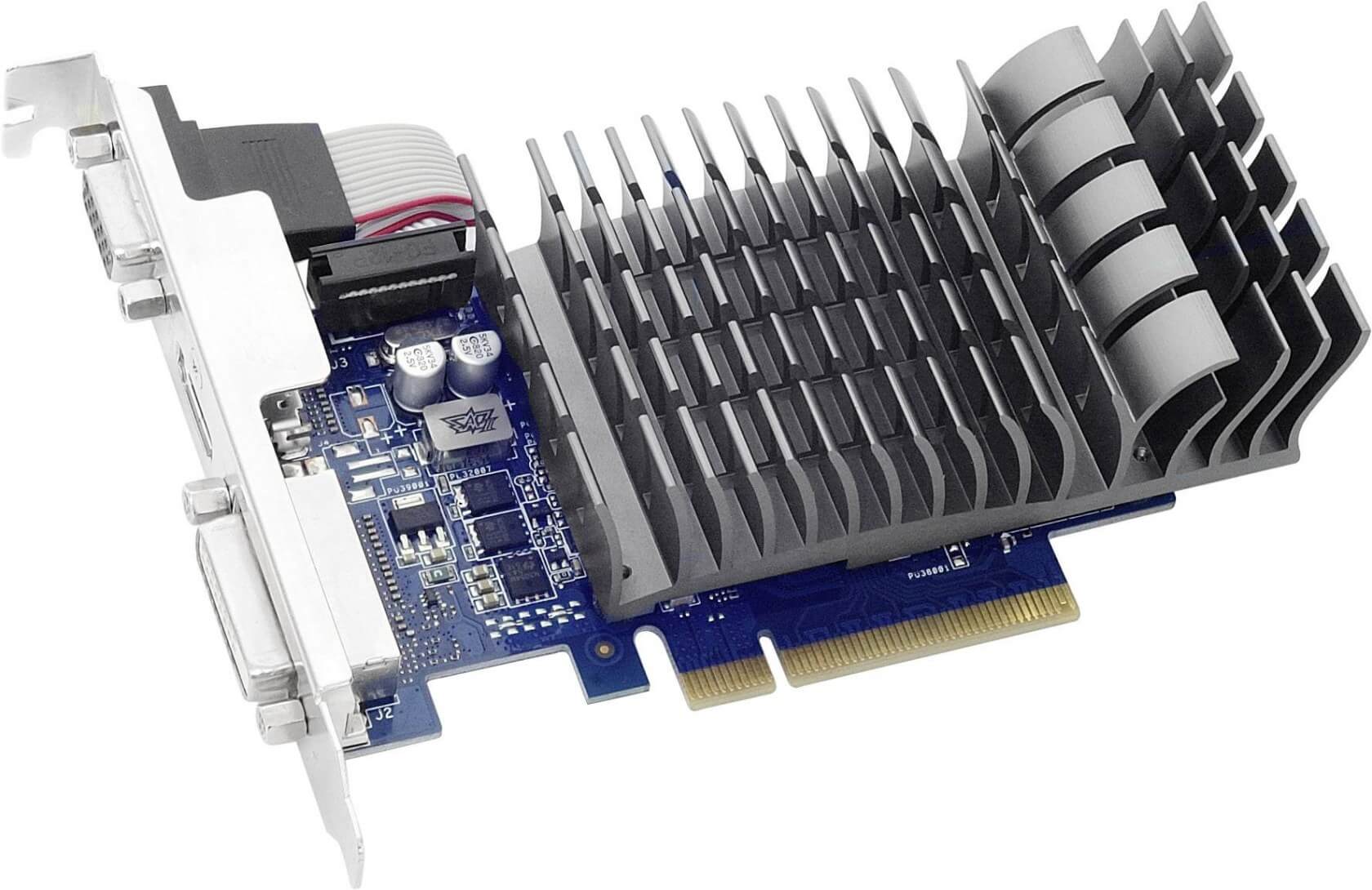

Heatpipes, as they are commonly called, don't appear on every graphics card. A budget, low-end model won't produce much heat and so won't need them. The same models often don't use copper for the heatsink, to save money, as shown in the image below.

This Asus GeForce GT 710 shows the typical approach for the low-end, low-power graphics cards market. Such products rarely use more than 20W of electrical power, and even though most of that ends up as heat, it's just not enough to bother the chip hiding under the cooler.

One problem with the blower type of cooler is that the fan used to blast air across the metal fins (and then out of the PC case), has got a tough job to do and they're usually no wider than the graphics card itself. This means they need to spin pretty fast, and that generates lots of noise.

The common solution to that problem is the open cooler – here the fan just directs air down into the fins, and the rest of the plastic/metal surrounding the fan just lets this hot air into the PC case. The advantage of this is that the fans can be bigger and spin slower, generally resulting in a quieter graphics card. The downside? The card is bulkier and the inside temperature of the computer will be higher.

Of course, you don't have to use air to go all Netflix and chill with your graphics card. Water is really good at absorbing heat, before it rises in temperature (roughly 4 times better than air), so naturally you can buy water cooling kits and install them, or sell a kidney or two, and buy a graphics card with one already fitted.

The card above is EVGA's GeForce RTX 2080 Ti KiNGPiN GAMING - the blower cooler is just for the RAM and other components on the circuit board; the main processing chip is water cooled. All yours for a snip at $1,800.

You may be surprised to know that the RTX 2080 Ti has a 'normal' maximum power consumption of 250W, which is less that the RX 5700 XT we mentioned a little earlier.

Such excessive cooling systems aren't there for everyday, run-of-the-mill settings though: you'd go with one of these setups, if you want to raise the voltages and clock speeds to ludicrous levels, and spit fire like a real dragon.

The brains behind the brawn: Enter the GPU

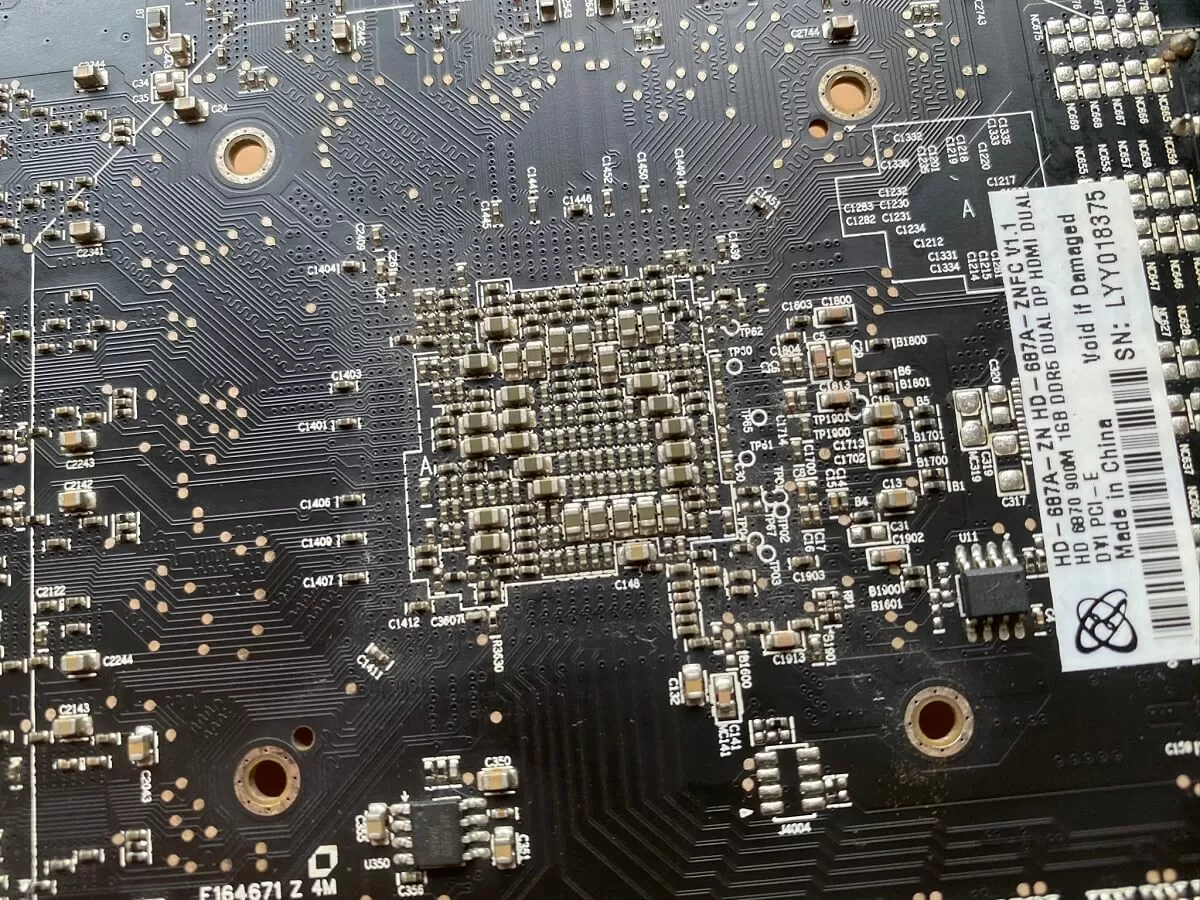

Now that we've stripped off the cooling system from our graphics card, let's see what we have: a circuit board with a chunky chip in the middle, surrounded by smaller black chips, and a plethora of electrical components everywhere else.

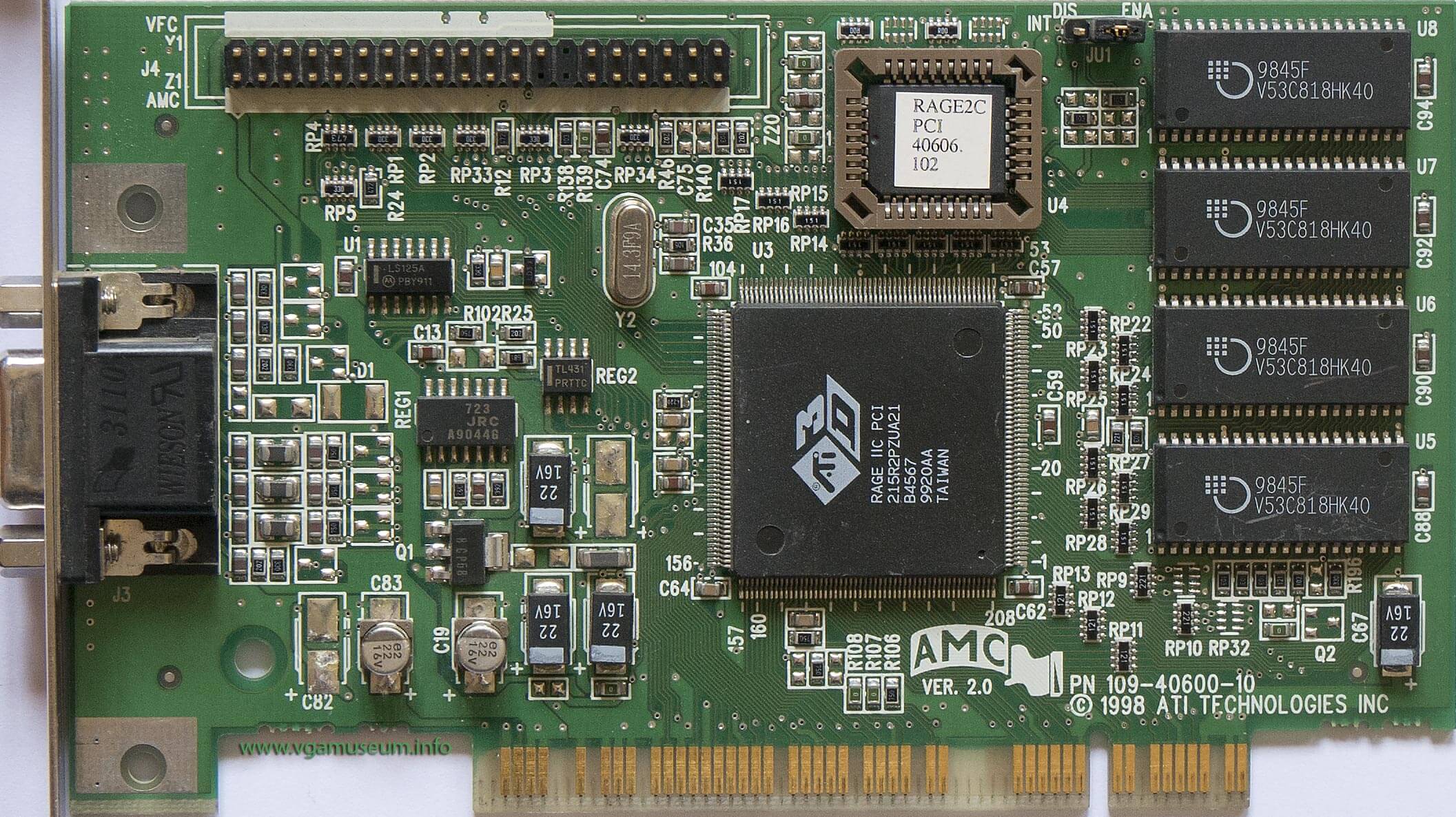

No matter what graphics card you have, they all have the same kind of parts and follow a similar layout. Even if we go all the way back to 1998, and look an ancient ATi Technologies graphics card, you can still see roughly the same thing:

Like our disassembled HD 6870, there's a large chip in the middle, some memory, and a bunch of components to keep it all running.

The big processor goes by many names: video adapter, 2D/3D accelerator, graphics chip to name just a few. But these days, we tend to call it a graphics processing unit or GPU, for short. Those 3 letters have been in use for decades, but Nvidia will claim that they were first to use it.

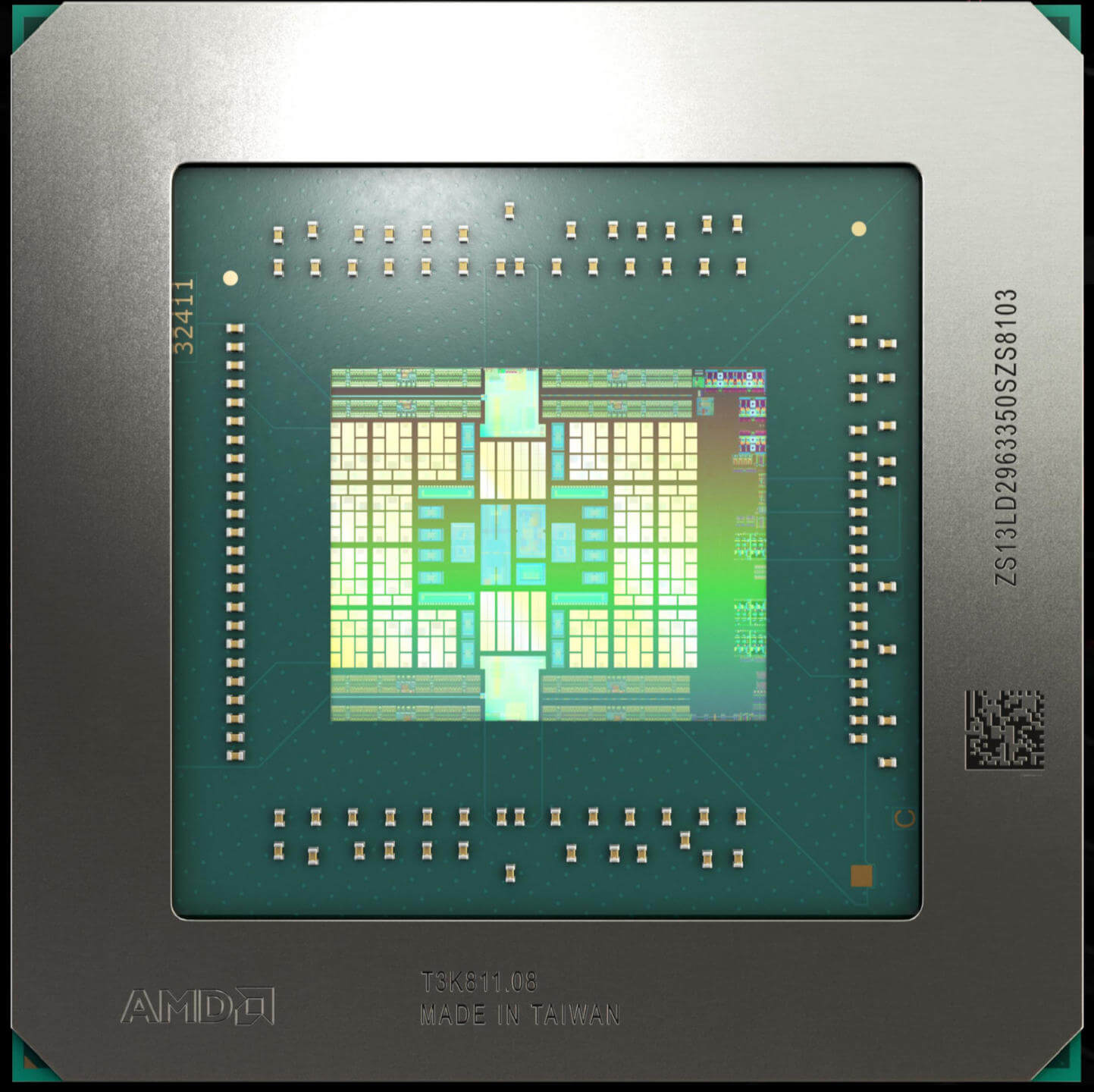

Not that it matters today. All GPUs have pretty much the same structure inside the chip we see:

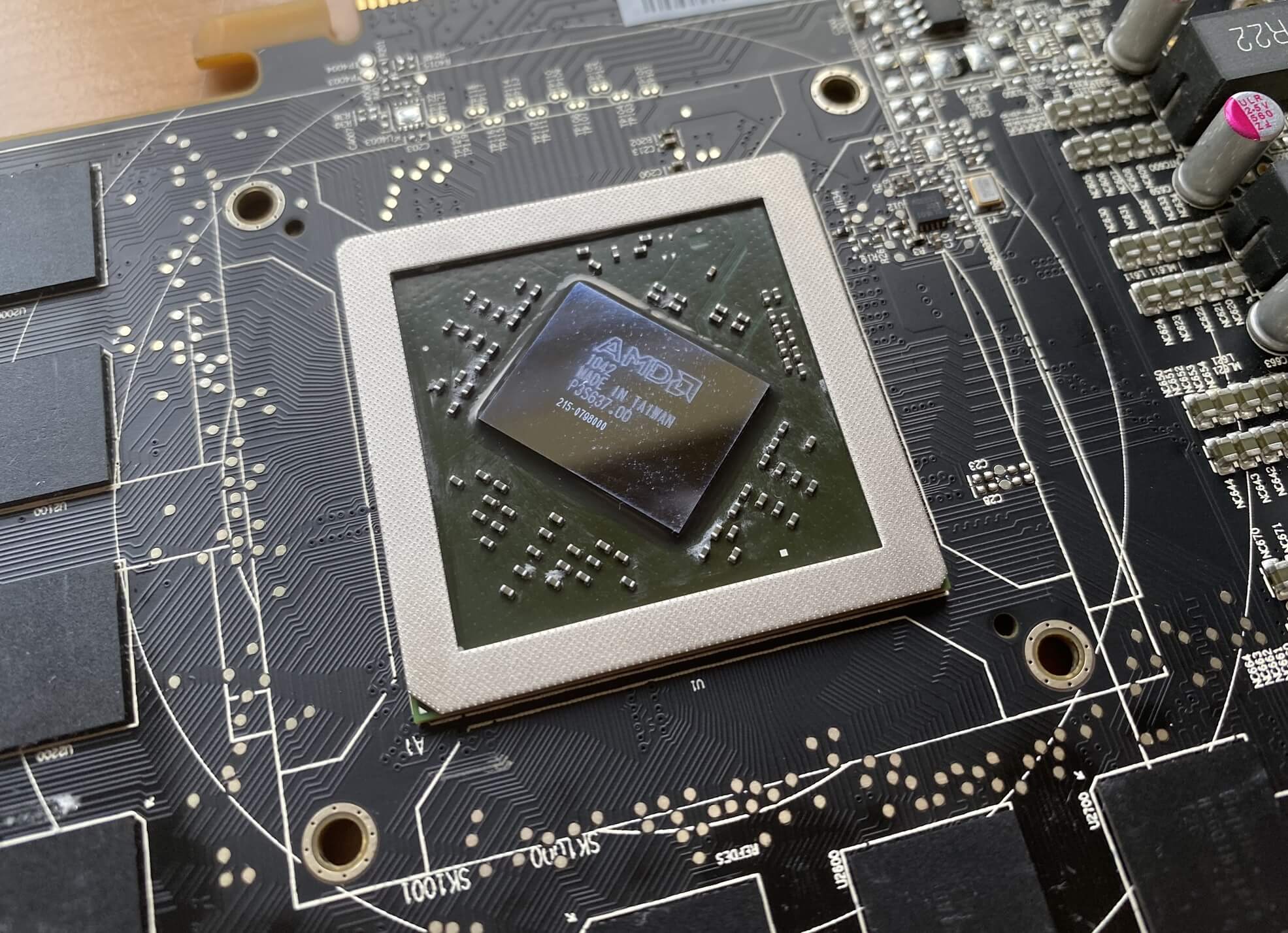

This processor was designed by AMD and manufactured by TSMC; it has codenames such as TeraScale 2 for the overall architecture, and Barts XT for the chip variant. Packed into 0.4 square inches (255 mm2) of silicon are 1.7 billion transistors.

This mind-boggling number of electronic switches make up the different ASICs (application specific integrated circuits) that GPUs sport. Some just do set math operations, like multiplying and adding two numbers; others read values in memory and convert them into a digital signal for a monitor.

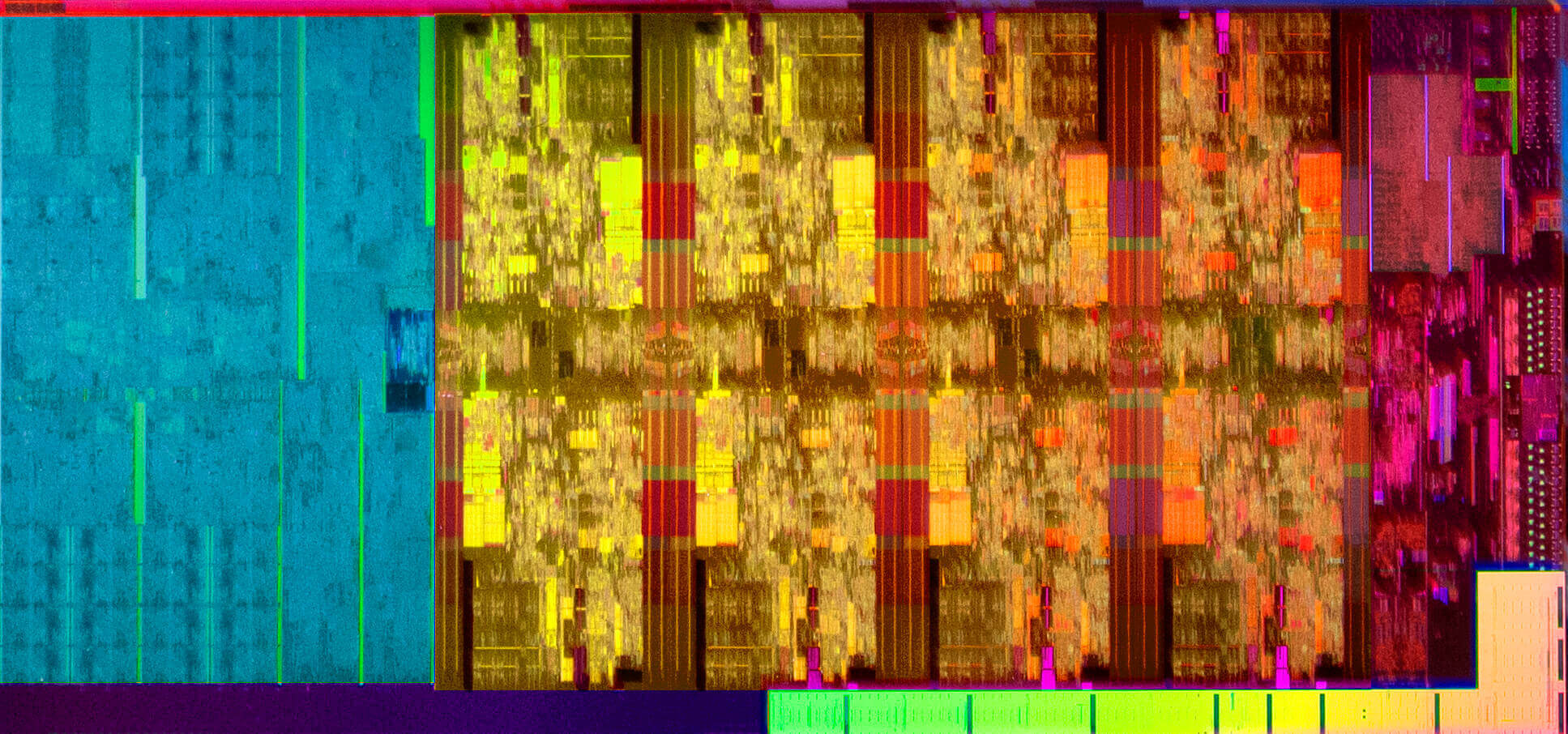

GPUs designed to lots of things all at the same time, so a large percentage of the chip's structure consists of repeated blocks of logic units. You can see them clearly in the following (heavily processed) image of AMD's current Navi range of GPUs:

See how there are 20 copies of the same pattern? These are the main calculating units in the chip and do the bulk of the work to make 3D graphics in games. The strip that runs roughly down the middle is mostly cache – high speed internal memory for storing instructions and data.

On the edges, top and bottom, are the ASICs that handle communications with the RAM chips on the card, and the far right of the processor houses circuits for talking to the rest of the computer and encoding/decoding video signals.

You can read up about the latest GPU designs from AMD and Nvidia, as well as Intel, if you want a better understanding of the guts of the GPU. For now, we'll just point that if you want to play the latest games or blast through machine learning code, you're going to need a graphics processor.

But not all GPUs come on a circuit board that you jam into your desktop PC. Lots of CPUs have a little graphics processor built into them; below is an Intel press shot (hence the rather blurry nature to it) of a Core i7-9900K.

The coloring has been added to identify various areas, where the blue section on the left is the integrated GPU. As you can see it takes up roughly one third of the entire chip, but because Intel never publicly states transistor counts for their chips, it's hard to tell just how 'big' this GPU is.

We can estimate, though, and compare the largest and smallest GPUs that AMD, Intel, and Nvidia offer out of the latest architectures:

| Manufacturer | AMD | Intel | Nvidia | |||

| Architecture | RNDA | Gen 9.5 | Turing | |||

| Chip/model | Navi 10 | Navi 14 | GT3e | GT1 | TU102 | TU117 |

| Transistors count (billions) | 10.3 | 6.4 | Some | Fewer | 18.6 | 4.7 |

| Die size (mm2) | 251 | 158 | Around 80? | 30 or so | 754 | 200 |

The Navi GPUs are built on a TMSC 7nm process node, compared to Intel's own 14nm and a specially refined 16nm node that TMSC offers for Nvidia (it's called 12FFN); this means you can't directly compare between them, but one thing is for sure – GPUs have lots of transistors in them!

To give a sense of perspective, that 21 year old Rage IIC processor shown earlier has 5 million transistors packed into a die size of 39 mm2. AMD's smallest Navi chip has 1,280 times more transistors in an area that's only 4 times larger – that's what 2 decades of progress looks like.

An elephant never forgets

Like all desktop PC graphics cards, ours has a bunch of memory chips soldered onto the circuit board. This is used to store all of the graphics data needed to create the images we see in games, and is nearly always a type of DRAM designed specifically for graphics applications.

Initially called DDR SGRAM (double data rate synchronous graphics random access memory) when it appeared on the market, today now goes by the abbreviated name of GDDR.

Our particular sample has 8 Hynix H5GQ1H23AFR GDDR5 SDRAM modules, running at 1.05 GHz. This type of memory is still found on lots of cards available today, although the industry is generally moving towards the more recent version, GDDR6.

GDDR5 and 6 work in a similar way: a baseline clock (the one mentioned above) is used to time the issue of instructions and data transfers. A separate system is then used to shift bits to and from the memory modules, and this is done as a block transfer internally. In the case of GDDR5, 8 x 32 bits is processed for each read/write access, whereas GDDR6 is double that value.

This block is set as a sequential stream, 32 bits at a time, at a rate that's controlled by a different clock system again. In GDDR5, this runs at twice the speed of the baseline clock, and data gets shifted twice per tick of this particular clock.

So in our Radeon HD 6870, with 8 Hynix chips in total, the GPU can transfer up to 2 (transfer per tick) x 2 (double data clock) x 1.05 (baseline clock) x 8 (chips) x 32 (width of stream) = 1075.2 Gbits per second or 134.4 GB/s. This calculated figure is known as the theoretical memory bandwidth of the graphics card, and generally, you want this to be as high as possible. GDDR6 has two transfer rate models, normal double rate and a quad model (double the double!).

Not all graphics cards use GDDR5/6. Low-end models often rely on older DDR3 SDRAM (such as the passively cooled example we showed earlier), which isn't designed specifically for graphics applications. Given that the GPU on these cards will be pretty weak, there's no loss in using generic memory.

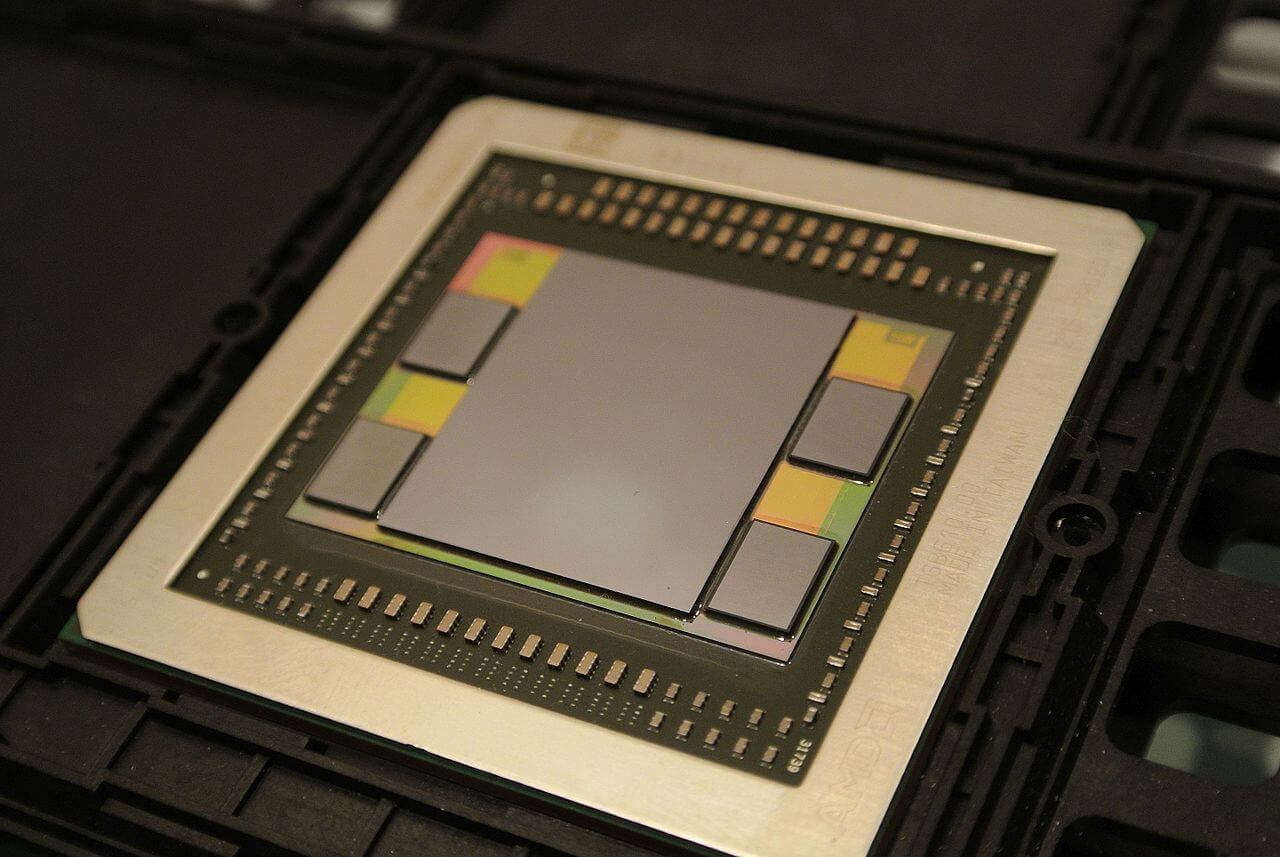

Nvidia dabbled with GDDR5X for a while – it has the same quad data rate as GDDR6, but can't be clocked as fast – and AMD has used HBM (High Bandwidth Memory) on-and-off for a couple of years, in the likes of the Radeon R9 Fury X, the Radeon VII, and others. All versions of HBM offer huge amounts of bandwidth, but it's more expensive to manufacture compared to GDDR modules.

Memory chips have to be directly wired to the GPU itself, to ensure the best possible performance, and sometimes that means the traces (the electrical wiring on the circuit board) can be a little weird looking.

Notice how some traces are straight, whereas others follow a wobbly path? This is to ensure that every electrical signal, between the GPU and the memory module, travels along a path of exactly the same length; this helps prevents anything untoward from happening.

The amount of memory on a graphics card has changed a lot since the first days of GPUs.

The ATi Rage 3D Charger we showed earlier sported just 4MB of EDO DRAM. Today, it's a thousand times greater, with 4 to 6 GB being the expected norm (top end models often double that again). Given that laptops and desktop PCs are just recently moving away from having 8 GB of RAM as standard, graphics cards are veritable elephants when it comes to their memory amounts!

Ultra fast GDDR memory is used because GPUs need to read and write lots of data, in parallel, all the time they're working; integrated GPUs often don't come with what's called local memory, and instead, have to rely on the system's RAM. Access to this is much slower than having gigabytes of GDDR5 right next to the graphics processor, but these kinds of GPUs aren't powerful enough to really require it.

I demand power and lots of it

Like any device in a computer, graphics cards need electrical power to work. The amount they need mostly depends on what GPU the card is sporting as the memory modules only need a couple of watts each.

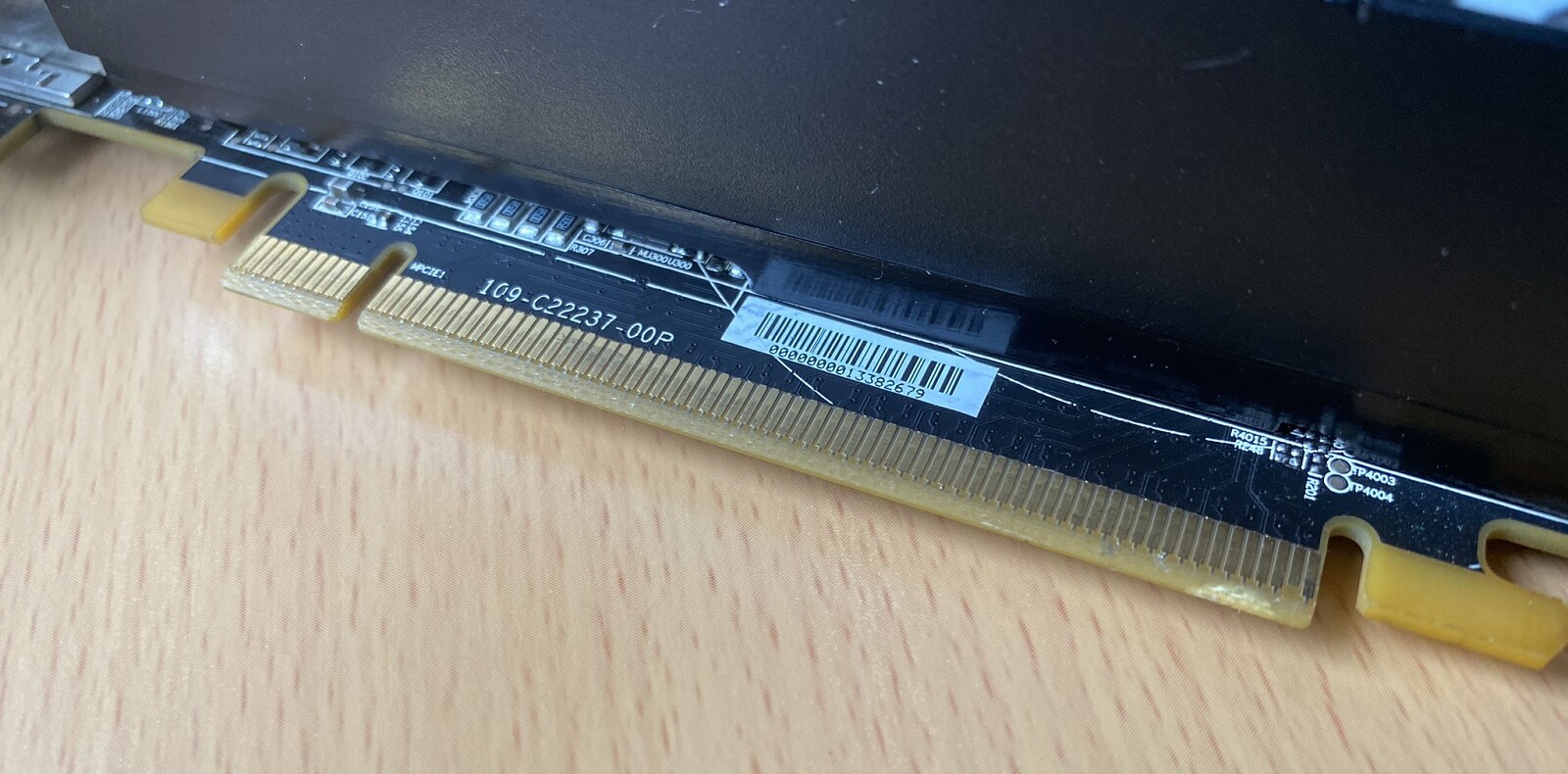

The first way that the card can get its power is from the expansion slot it's plugged into, and virtually every desktop PC today uses a PCI Express connection.

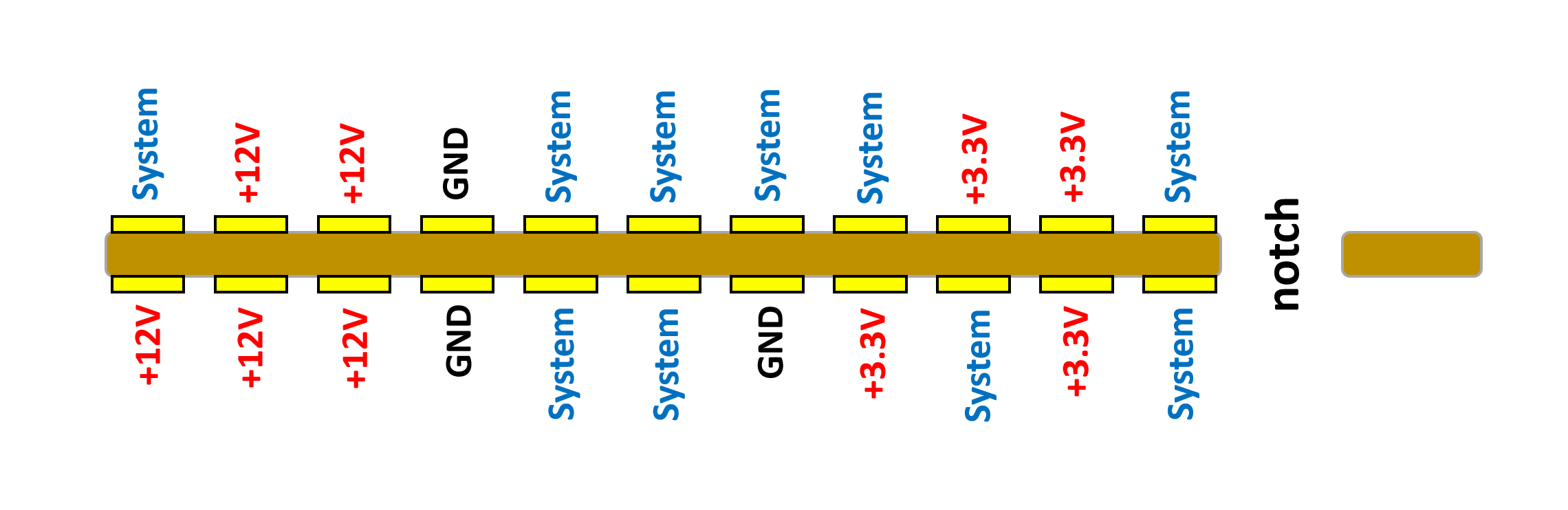

In the image above, the power is supplied by the smaller strip of pins to the left - the long strip on the right is purely for instructions and data transfer. There are 22 pins, 11 per side, in the short strip but not all of those pins are there to power the card.

Almost half the 22 pins are for general system tasks – checking the card is okay, simple powering on/off instructions, and so on. The latest PCI Express specification places limits as to how much current can be drawn, in total, off the two sets of voltage lines. For modern graphics cards, it's 3 amps off the +3.3V lines and 5.5 amps off the +12; this provides a total of (3 x 3.3) + (5.5 x 12) = 75.9 watts of power.

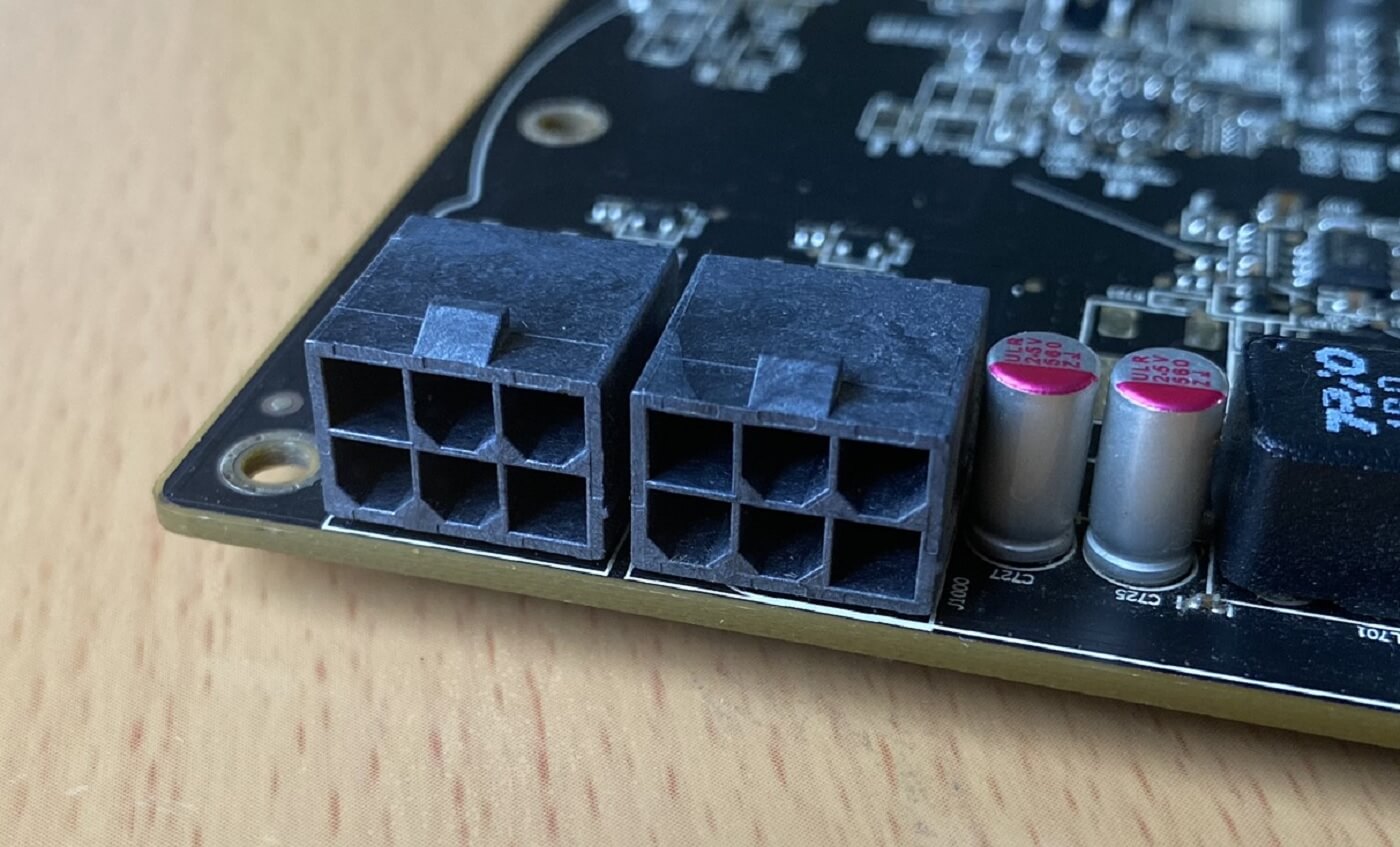

So what happens if your card needs more than that? For example, our Radeon HD 6870 sample needs at least 150W – double what we can get from the connector. In those cases, the specification provides a format that can be followed by manufacturers in the form of extra +12V lines. The format comes in two types: a 6 pin and an 8 pin connector.

Both formats provide three more +12 voltage lines; the difference between them lies in the number of ground lines: three for the 6 pin and five for the 8 pin version. The latter allows for more current to be drawn through the connector, which is why the 6 pin only provides an additional 75W of power, whereas the larger format pushes this up to 150W.

Our card has two 6 pin connectors, so with the PCI Express expansion slot, it can take up 75 + (2 x 75) = 225 watts of power; more than enough for this model's needs.

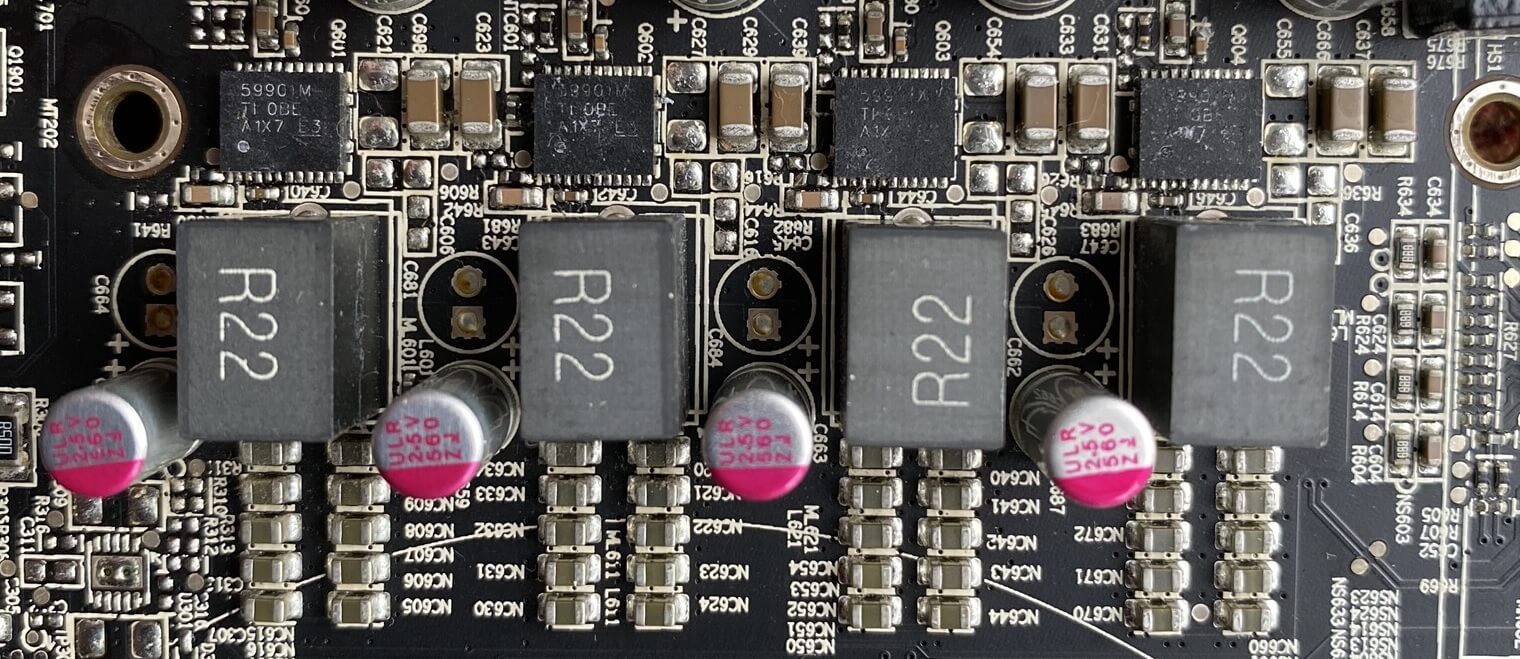

The next problem to manage is the fact that the GPU and memory chips don't run on +3.3 or +12 voltages: the GDDR5 chips are 1.35V and the AMD GPU requires 1.172V. That means the supply voltages need to be dropped and carefully regulated, and this task is handled by voltage regulator modules (VRMs, for short).

We saw similar VRMs when we looked at motherboards and power supply units, and they're the standard mechanism used today. They also get a bit toasty when they're working away, which is why they're also (or hopefully also!) buried underneath a heatsink to keep them within their operating temperature range.

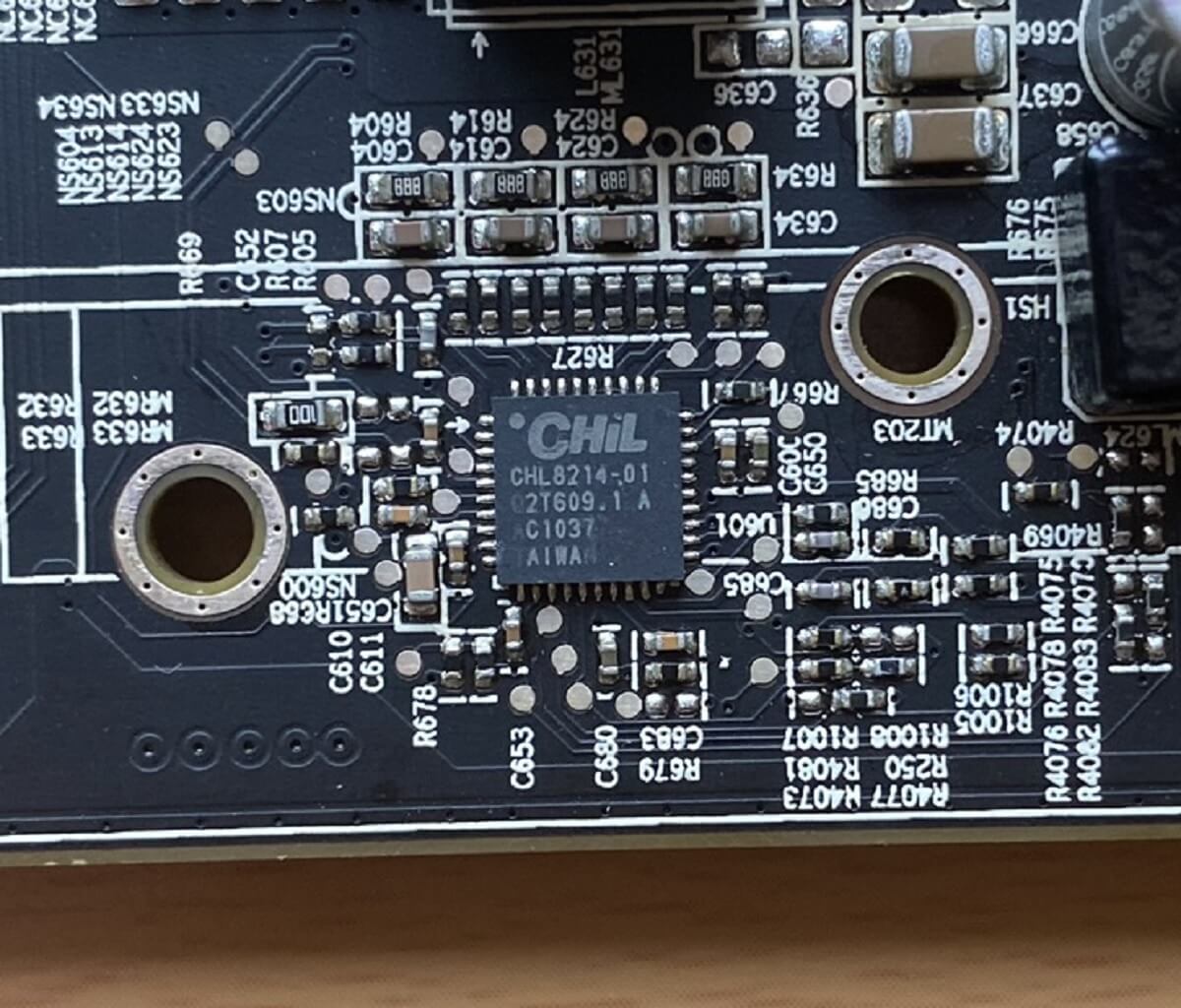

Just like with motherboard and CPUs, the number and type (read: quality) of the VRMs has an impact on how stable the GPU is, when it's being overclocked. Included in this, is the quality of the overall power controlling chip.

This decade-old Radeon HD 6870 uses a CHIL CHL821401, which is a 4+1 phase PWM controller (so it can handle the 4 VRMs seen above, plus another voltage regulation system); it can also keep track of temperatures and how much current is being drawn. It can set the VRMs to change between one of three different voltages, a feature that's used heavily in modern GPUs as they switch to a lower voltage when idling, to save power and keep heat/noise down.

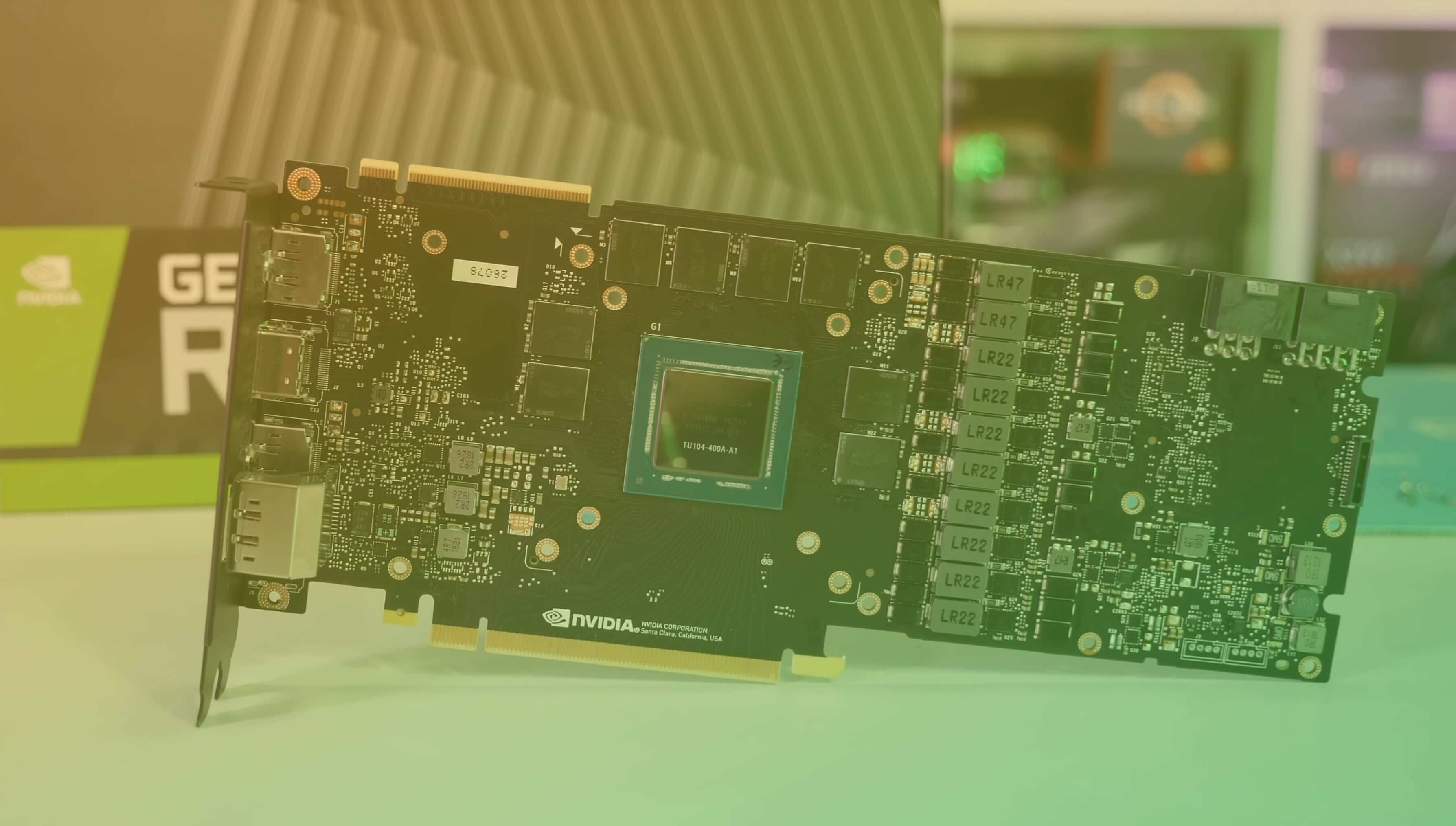

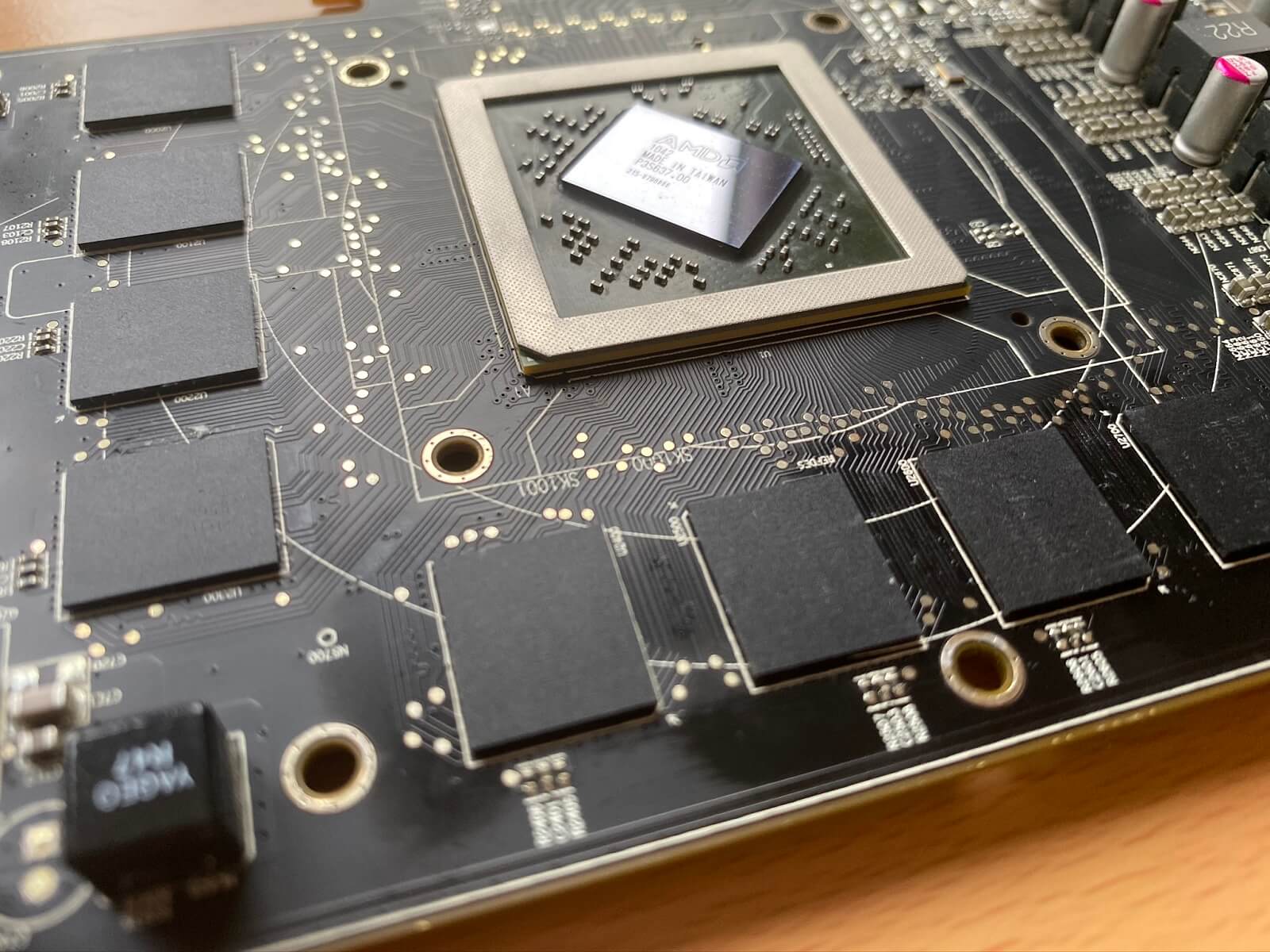

The more power the GPU requires, the more VRMs and the better the PWM controller will need to be. For example, in our review of Nvidia's GeForce RTX 2080, we pulled off the cooling system to look at the circuit board and the components:

You can see a battery of ten VRMs running down the full height of the card, to the right of the GPU; the likes of EVGA offer graphics cards with nearly double that number! These cards are, of course, designed to be heavily overclocked and when they are, the power consumption of the graphics card will be well over 300W.

Fortunately, not every graphics card out there has insane energy requirements. The best mid-range products out there right now are between 125 and 175W, which is roughly in the same ballpark as our Radeon HD 6870.

The ins and outs of a graphics card

So far we've looked at the electronic components on the circuit board and how power is supplied to them. Time to see how instructions and data are sent to the GPU, and how it then sends its results off to a monitor. In words, the input/output (I/O) connections.

Instructions and data are sent and received via the PCI Express connector we saw before. It's all done through the pins on the long section of the slot connector. All of the transmit pins are on one side, and the receive pins on the other.

PCI Express communication is done using differential signalling, so two pins are used together, sending 1 bit of data per clock cycle. Forever, pair of data pins there are a further 2 pins for grounding, so a full set comprises 2 send pins, 2 receive pins, and 4 ground pins. Together, they're collectively called a lane.

The number of lanes used by the device is indicated by the label x1, x4, x8, or x16 - referring to 1 lane, 4 lanes, etc. Almost all graphics cards use 16 lanes (i.e. PCI Express x16), which means the interface can send/receive up to 16 bits per cycle.

The signal sending the data run at 4 GHz in a PCI Express 3.0 connector, but data can be timed for sending twice per cycle. This gives a theoretical data bandwidth of 4 GHz x 2 per cycle x 16 bit = 128 Mbits/sec or 16 MB/sec each way.

It's actually less than that, because PCI Express signalling uses an encoding system that sacrifices some of bits (around 1.5%) for signal quality. The very latest version of the PCI Express specification, 4.0, doubles this to 32 GB/s; there are two more specifications in development that multiply this again by 2 respectively.

Some graphics cards, like our HD 6870, have an additional connector, as shown below:

This lets you couple two or more cards together, so that they can share data quickly when working as a multi-GPU system. Each vendor has their own name for it: AMD calls theirs CrossFire, Nvidia does SLI. The former doesn't use this connector anymore, and instead just does everything through the PCI Express slot.

If you look back up this page at the image for the GeForce RTX 2080, you'll see that there are two such multi-GPU connections – this is Nvidia's newer version, called NVLink. It's mostly targeted towards professional graphics and compute cards, rather than general gaming ones. Despite the efforts of AMD and Nvidia to get multi-GPU systems into mainstream use, it's not been overly successful, and these days you're just better off getting the best single GPU that you can afford.

Every desktop PC graphics will also have at least one method to connect a monitor to it, but most have several. They do this because monitors come in all kinds of models and budgets, which means the card will need to support as many of those as possible.

Our stripped down Radeon has 5 such outputs:

- 2x mini DisplayPort 1.2 sockets

- 1x HDMI 1.4a socket

- 1x DVI-D (digital only) dual-link socket

- 1x DVI-I (digital and analogue) dual-link socket

Have a look:

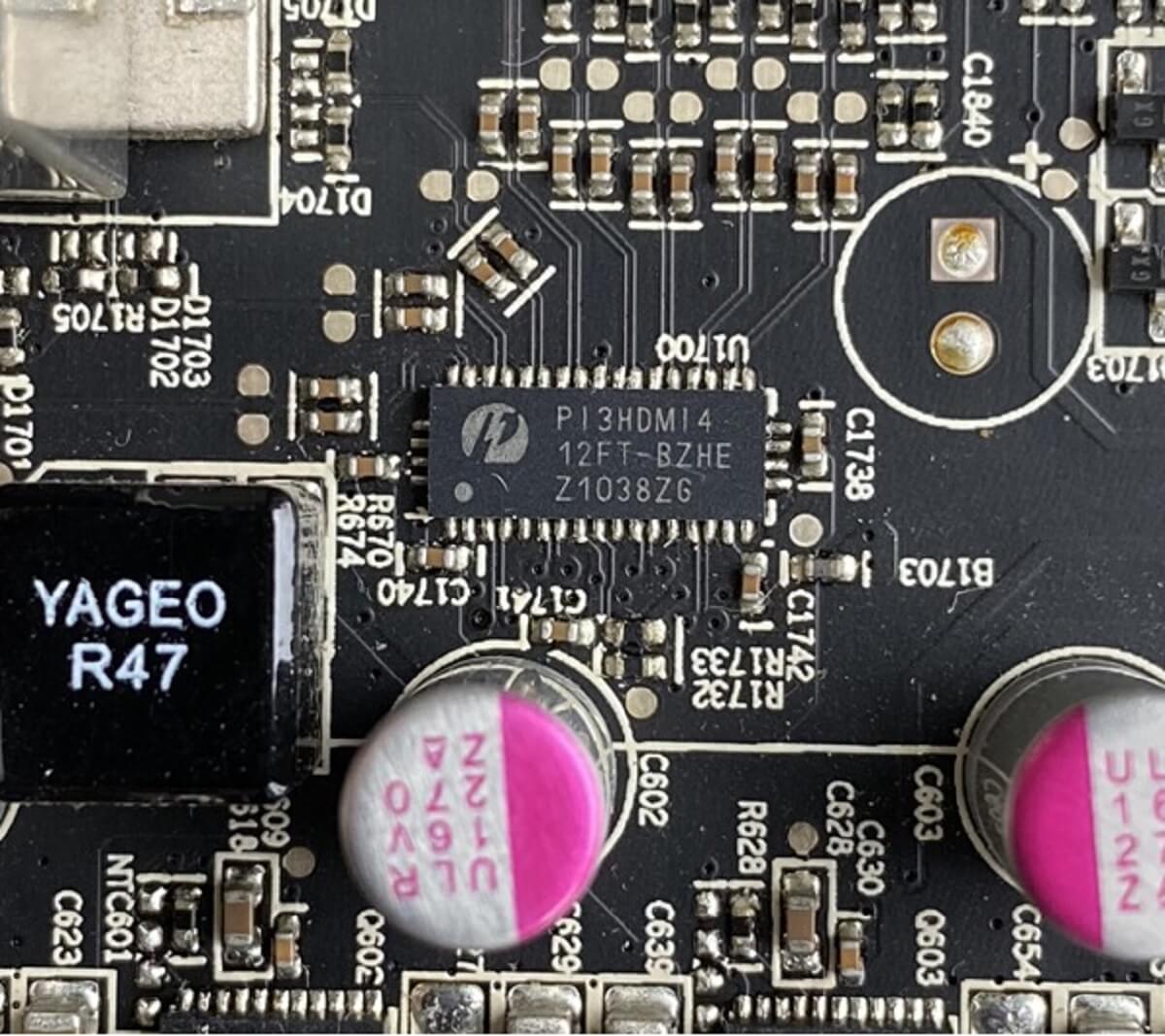

As well supporting as many monitor types as possible, having multiple output sockets also means you can attach more than one display to the graphics card. Some of this monitor juggling gets handled by the GPU itself, but sometimes an extra chip is needed to a bit of signal sleight of hand. In our card, it has a Pericom P13HDMI4 HDMI switch to do some of this:

That tiny little chip converts HDMI data, which contains digital video and audio streams, into the picture-only signals for the DVI sockets. The specification of these connections is a lot more important these days, because of changes in how we're using our monitors.

The rise of esports has the monitor industry steering towards ever higher refresh rates (the number of times per second that the monitor redraws the image on the screen) – 10 years ago, the vast majority of monitors were capped at 60 or 75 Hz. Today, you can get 1080p screens that can run at 240 Hz.

Modern graphics cards are also very powerful, and many are capable of running at high resolutions, such as 1440p and 4K, or offer high dynamic range (HDR) outputs. And to add to the long list of demands, lots of screens support variable refresh rate (VRR) technology; a system which prevents the monitor from trying to update its image, while the graphics card is still drawing it.

There are open and proprietary formats for VRR:

To be able to use these features (e.g. high resolutions, high and variable refresh rates, HDR), there are 3 questions that have to be answered: does the monitor support it? Does the GPU support it? Does the graphics card use output connectors capable of doing it?

If you head off and buy one of the latest cards from AMD (Navi) or Nvidia (Turing), here's what output systems they support:

| Manufacturer | AMD | Nvidia |

| DVI | Dual-Link Digital | Dual-Link Digital |

| 1600p @ 60Hz, 1080p @ 144Hz | 1600p @ 60Hz, 1080p @ 144Hz | |

| DisplayPort | 1.4a (DSC 1.2) | 1.4a (DSC 1.2) |

| 4K HDR @ 240Hz, 8K HDR @ 60Hz | 4K HDR @ 144Hz, 8K HDR @ 60Hz | |

| HDMI | 2.0b | 2.0b |

| 4K @ 60Hz, 1080p @ 240Hz | 4K @ 60Hz, 1080p @ 240Hz | |

| VRR | DP Adaptive-sync, HDMI VRR, FreeSync | DP Adaptive-sync, HDMI VRR, G-Sync |

The above numbers don't paint the full picture, though. Sure, you could fire 4K frames at over 200 Hz through the DisplayPort connection to the monitor, but it won't be with the raw data in the graphics card's RAM. The output can only send so many bits per second, and for really high resolutions and refresh rates, it's not enough.

Fortunately, data compression or chroma subsampling (a process where the amount of color information sent is reduced) can be used to ease the load on the display system. This is where slight differences in graphics card models can make a difference: one might use a standard compression system, a proprietary one or a variant of chroma subsampling.

20 years ago, there were big differences in the video outputs of graphics cards, and you were often forced to make the choice of sacrificing quality for speed. Not so today... phew!

All that for just graphics?

It might seem just a wee bit odd that so much complexity and cost is needed to just draw the images we see, when we're playing Call of Mario: Deathduty Battleyard. Go back to near the start of this article and look again at that ATi 3D Charger graphics card. That GPU could spit out up to 1 million triangles and color in 25 million pixels in a second. For a similarly priced card today, those numbers jump by a factor of over 2,000.

Do we really need that kind of performance? The answer is yes: partly because today's gamers have much higher expectations of graphics, but also because making realistic 3D visuals, in real-time, is super hard. So when you're hacking away at dragons, zooming through Eau Rouge into Raidillon, or frantically coping with a Zerg Rush, just spare a few seconds for your graphics card – you're asking a lot of it!

But GPUs can do more than just process images. The past few years has seen an explosion in the use of these processors in supercomputers, for complex machine learning and artificial intelligence. Cryptomining became insanely popular in 2018, and graphics cards were ideal for such work.

The buzzword here is compute -- a field normally in the domain of the CPU, the GPU has now taken over in specific areas that require massive parallel calculations, all done using high precision data values. Both AMD and Nvidia make products aimed at this market, and they're nearly always sporting the biggest and most expensive graphics processors.

Speaking of which, have you ever wondered what the guts of a $2,500 graphics card looks like? Gamers Nexus must have been curious, too, because they went ahead and pulled one apart:

If you're tempted to do the same with yours, please be careful! Don't forget that all those electronic components are quite fragile and we doubt any retailer will replace it, if you mess things up.

So whether your graphics card cost $20, $200, or $2,000, they're all fundamentally the same design: a highly specialized processor, on a circuit board filled with supporting chips and other electronic components. Compared to our dissection of a motherboard and power supply unit, there's less stuff to pull apart, but what's there is pretty awesome.

And so we say goodbye to the remains of our Radeon HD 6870 graphics card. The bits will go in a box and get stored in a cupboard. Somewhat of an ignominious end to such a marvel of computing technology, that wowed us by making incredible images, all made possible through the use of billions of microscopic transistors.

If you've got any questions about GPUs in general or the one you're using right now in your computer, then send them our way in the comments section below. Stay tuned for even more anatomy series features.