Today we're going to compare the Ryzen 7 3700X and Core i5-10600K in a number of games, but we'll be doing so with low or esports level of quality settings in games such as Fortnite, World of Tanks, Rocket League, and about half a dozen other competitive titles.

This is quite different to the game tests featured in our CPU reviews where we test with high and ultra quality presets in modern and often very demanding titles such as Shadow of the Tomb Raider, Battlefield V and Red Dead Redemption 2, to name just a few. And although in these tests we use a GeForce RTX 2080 Ti graphics card and test at 1080p as well as 1440p, in many instances performance is still more limited by the GPU.

We're not talking about a hard GPU bottleneck here, but the GPU is often the more performance limiting component. Of course, that doesn't matter too much because using an RTX 2080 Ti at 1080p is already a bit unrealistic, as most gamers with a $1,000+ graphics card would play these AAA titles at 1440p using high quality settings.

When reviewing the Core i5-10600K for example, we noted that while the Ryzen 7 3700X was 5% slower at 1080p, that margin might grow by a small margin in more CPU-limited gaming scenarios, for instance playing Fortnite with competitive quality settings. This led us to recommend the Intel processor for those who must have every last frame possible, but for everyone else the 3700X is just a better value more well-rounded product.

Still, it would be good to know just how much more performance you can get from the Intel processor and if that extra performance is something you can actually take advantage of. Now for this test we're looking at out of the box performance with XMP loaded using CL14 DDR4-3200 memory, but we're not power limiting the 10600K. In our review we found that a 5.1 GHz overclock could boost gaming performance by as much as 12%, so keep that in mind.

We also considered adding in overclocking results for both CPUs, though ultimately we didn't think that would make this article any better given what we've found in the past when turning AMD and Intel CPUs for maximum gaming performance. Intel CPUs typically enjoy more headroom when it comes to core frequency, but the AMD CPUs benefit massively from memory tuning, and in the end that results in similar performance gains.

For testing we've lined up 9 games, all tested at 1080p and 1440p using low quality settings with both an RTX 2080 Ti as well as an RTX 2060 Super.

Benchmark Time!

Starting with Battlefield V at 1080p using the low quality preset, we've manually removed the 200 fps cap, setting it to 600 fps. The results with the RTX 2080 Ti look as though they are still capped but we assure you they are not. The 10600K peaked at 211 fps in this test.

Interestingly, both CPUs maxed the RTX 2080 Ti at just shy of 200 fps and we see similar results when using the RTX 2060 Super. Standing still and looking at the sky for example would see the frame rate spike to around 250 fps, but when actually executing the benchmark pass frame rates rarely went over 200 fps.

Despite dropping down to the RTX 2060 Super we see very similar results which is interesting because the 2080 Ti with the ultra quality preset is ~50% faster, though that's based on our 1440p data which we'll look at now.

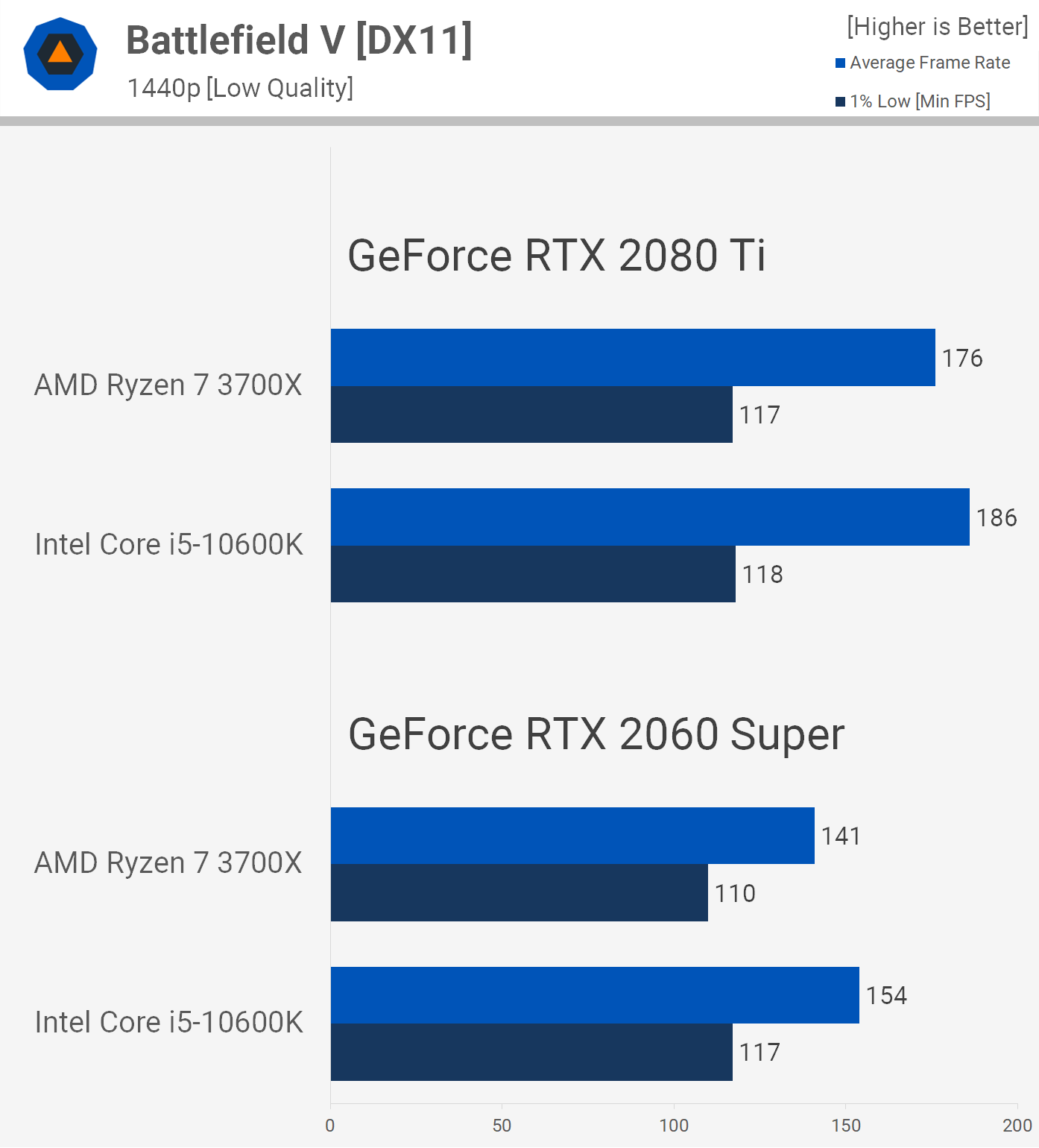

Moving to 1440p and here we start to see some separation. These results really do hint at a frame cap for the 1080p testing but that wasn't the case. Anyway, at 1440p the 10600K was up to 6% faster than the 3700X with the RTX 2080 Ti installed, though the 1% low performance was virtually identical.

What's really surprising to see is the margins extended with the slower RTX 2060 Super, here the 10600K was 9% faster. You'd expect the margins to close up with the slower GPU but that wasn't the case in Battlefield V using the DX11 API. Some strange and unexpected results in Battlefield V for sure, but it's worth nothing that while slower, it would be near impossible to tell the difference between the 3700X and 10600K.

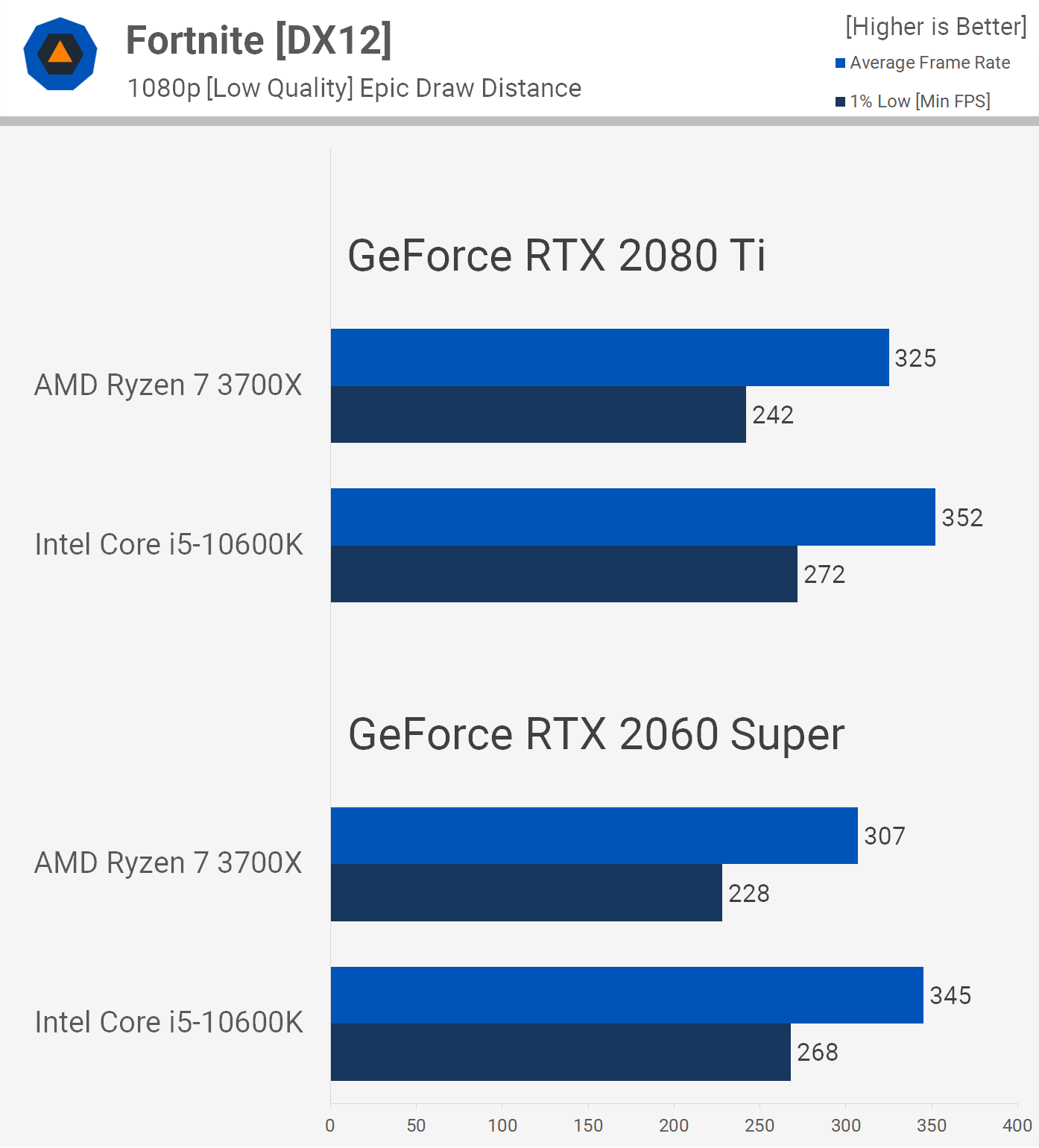

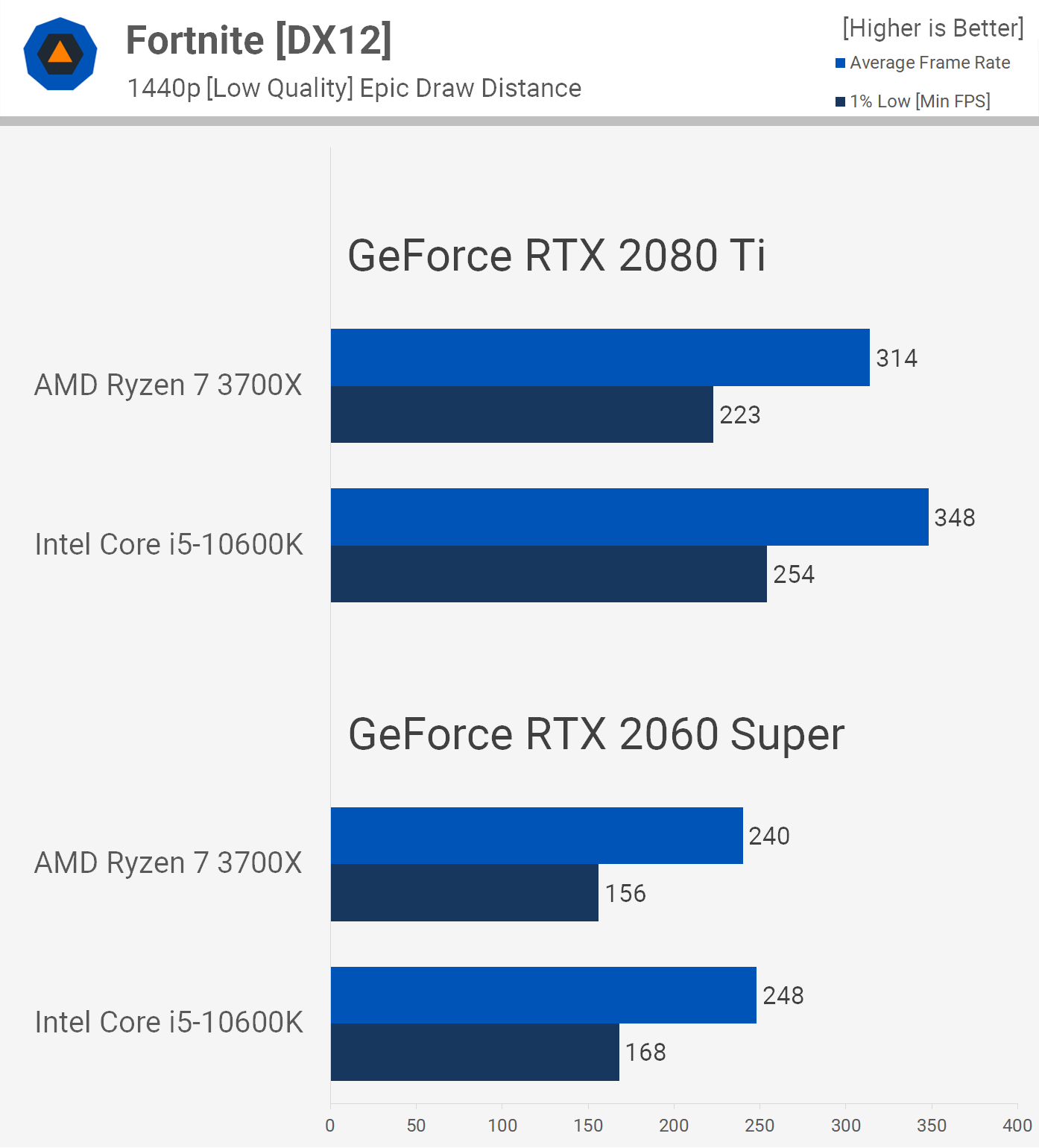

Next up we have Fortnite and for testing this title we set everything to low with the exception of draw distance which was maxed out using the 'epic' quality setting. We're also using the DX12 API in a 20 v 20 Team Rumble before the first circle closes.

Fortnite is a very difficult game to test with, at least if the goal is to report accurate data because once a week Epic breaks older replays when updating the game, forcing me to create a similar replay. However, we've noticed that even when recreating the exact same benchmark pass, the results can be quite different to the previous replay depending on where the other players are and what they're doing.

In the past when using the epic quality settings this difference wasn't that noticeable, but with the low quality settings the results could vary by up to 100 fps which is insane. So for this testing we created a replay on the Intel test system and then copied it over to the AMD system and ran all the benchmarks on the same day, allowing us to provide an apples to apples comparison.

Here we see that on average the 10600K was 8% faster than the 3700X, hitting 352 fps. The Intel processor was also 12% faster when comparing the 1% low data, but it's worth noting both pushed well over 200 fps at all times in our benchmark. That said, we are heavily CPU bound in both scenarios as we see when dropping down to the RTX 2060 Super that frame rates only decline by a slim 5% margin.

It's also very interesting to note that the margin between the 10600K and 3700X actually grows slightly with the slower GPU, now the Intel processor is 12% faster when comparing the average frame rate and 18% faster when comparing 1% low data. That's a significant delta, though we're still looking at well over 200 fps at all times with the Ryzen processor, so for those of you rocking a 144 Hz monitor, that's probably not going to be an issue for you.

Things change quite a bit at 1440p and now we're starting to see GPU bound results when using the RTX 2060 Super.

The 10600K was still up to 14% faster with the RTX 2080 Ti and despite both CPUs delivering well over 200 fps at all times, that is a decent performance advantage for Intel. For those using slower GPUs such as the 2060 Super you're looking at up to an 8% margin in favor of Intel and looking at the numbers I think it's fair to say even pro gamers wouldn't be able to tell the difference.

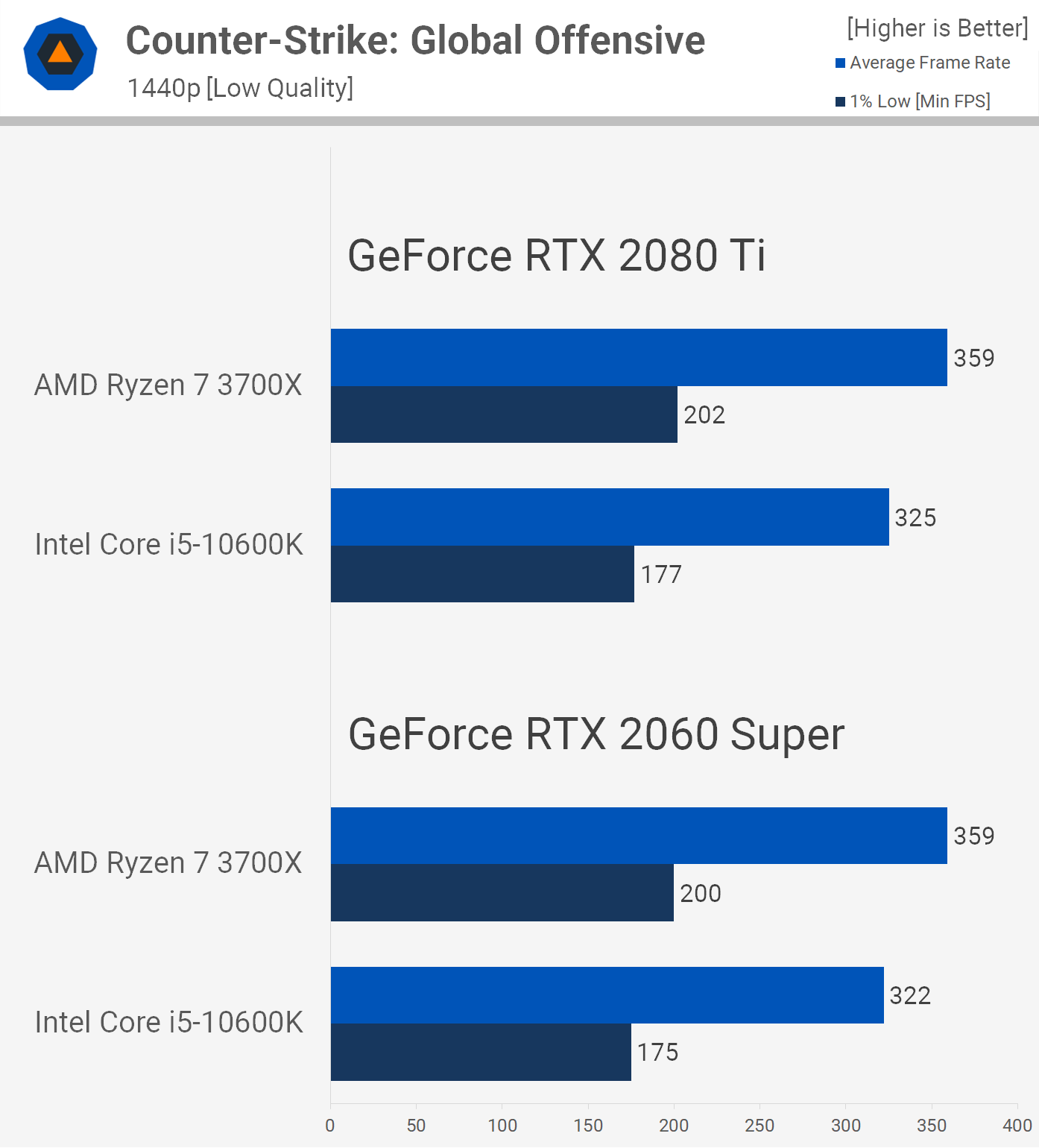

Moving on to the Counter-Strike: Global Offensive, for this test we're not using the Steam workshop FPS Benchmark but a custom benchmark pass on the map 'Vertigo' against some bots. Full disclaimer: I don't play CSGO, but those who do tell me the FPS Benchmark isn't indicative of actual gameplay performance, so hopefully this custom pass is more useful.

Testing at 1080p we find a strong CPU bottleneck with both the 3700X and 10600K as the RTX 2080 Ti and 2060 Super results are virtually identical. What's interesting here is that the 3700X is faster than the 10600K, delivering 8% better average performance and 22% stronger 1% lows, using either GPU.

The 3700X was also able to keep the frame rate above 200 fps at all times whereas the 10600K dripped down to 184 fps. We're not sure how much difference that really makes, but we see CSGO gamers often claiming you need at least 300 fps in this title for competitive play. Again not a CSGO player, so I have no idea how true that is. For casual players though we're sure 180 fps is still cutting it.

At 1440p we're looking at similar margins and here the 3700X was up to 14% faster.

This is a slightly surprising result, though going into this we knew CSGO was a title where 3rd-gen Ryzen was extremely capable, but it's nice to validate what we saw previously using the 'very high' quality settings.

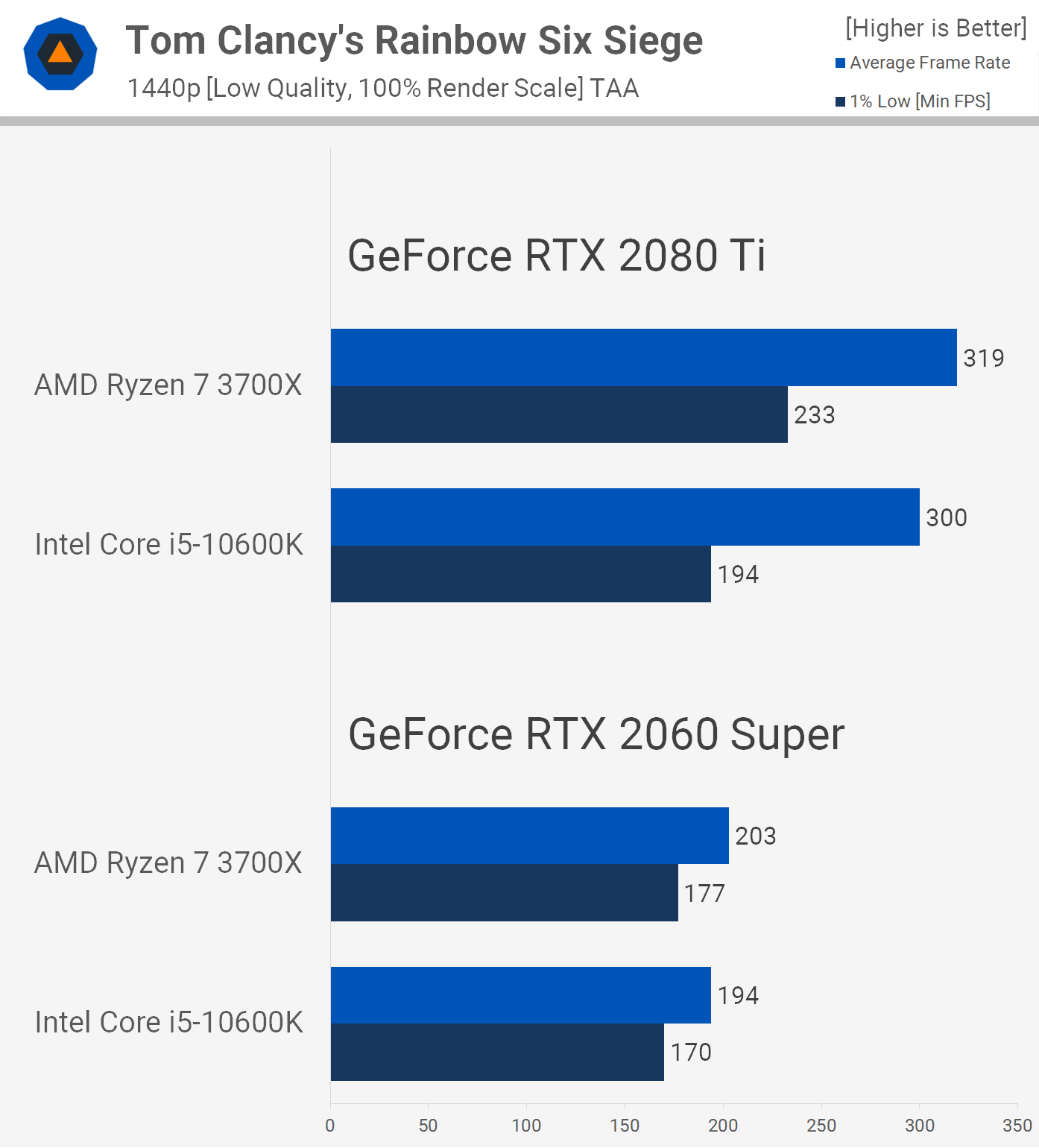

Rainbow Six Siege was featured in our 10600K review and using the ultra quality settings the 10600K and 3700X were very evenly matched, the Ryzen CPU was slightly slower when comparing the average frame rate but 5% when comparing the 1% low data.

Here we're seeing with the low quality settings that the performance is virtually identical with the 2080 Ti, the 3700X was just 3% faster when comparing the average frame rate, but that's also within the margin of error.

We see a 7% performance advantage for the 3700X when using the slower 2060 Super, not a huge margin but it does indicate that the Ryzen CPU is a little faster in this title. It's worth noting that with these competitive settings either CPU enabled over 250 fps at all times.

Increasing the resolution can increase CPU load and here we see the 10600K drop off when using the RTX 2080 Ti, trailing the 3700X by a 17% margin when comparing the 1% low data, though the average frame rate is more evenly matched. Then when using the 2060 Super we find results that are largely GPU bound.

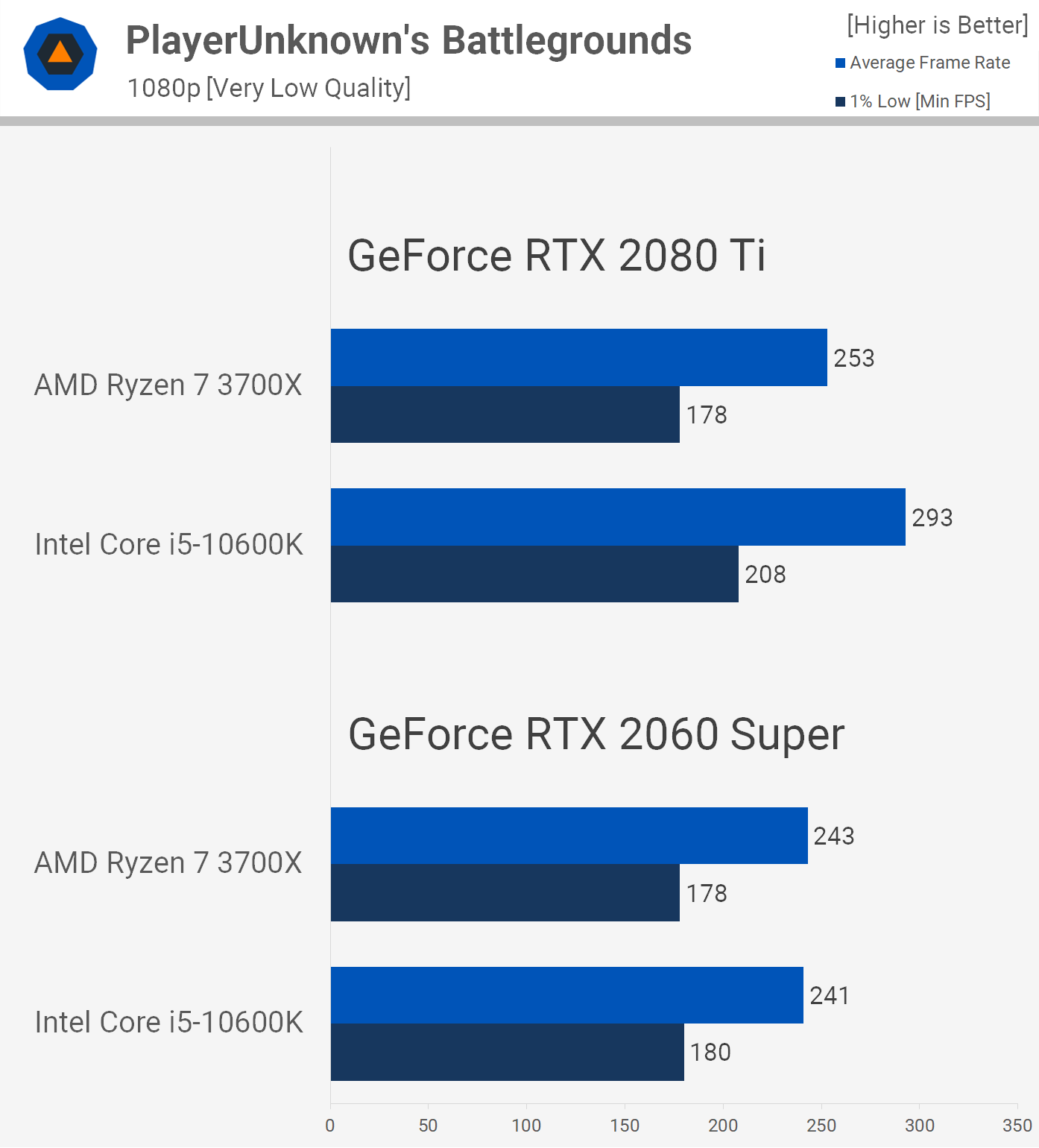

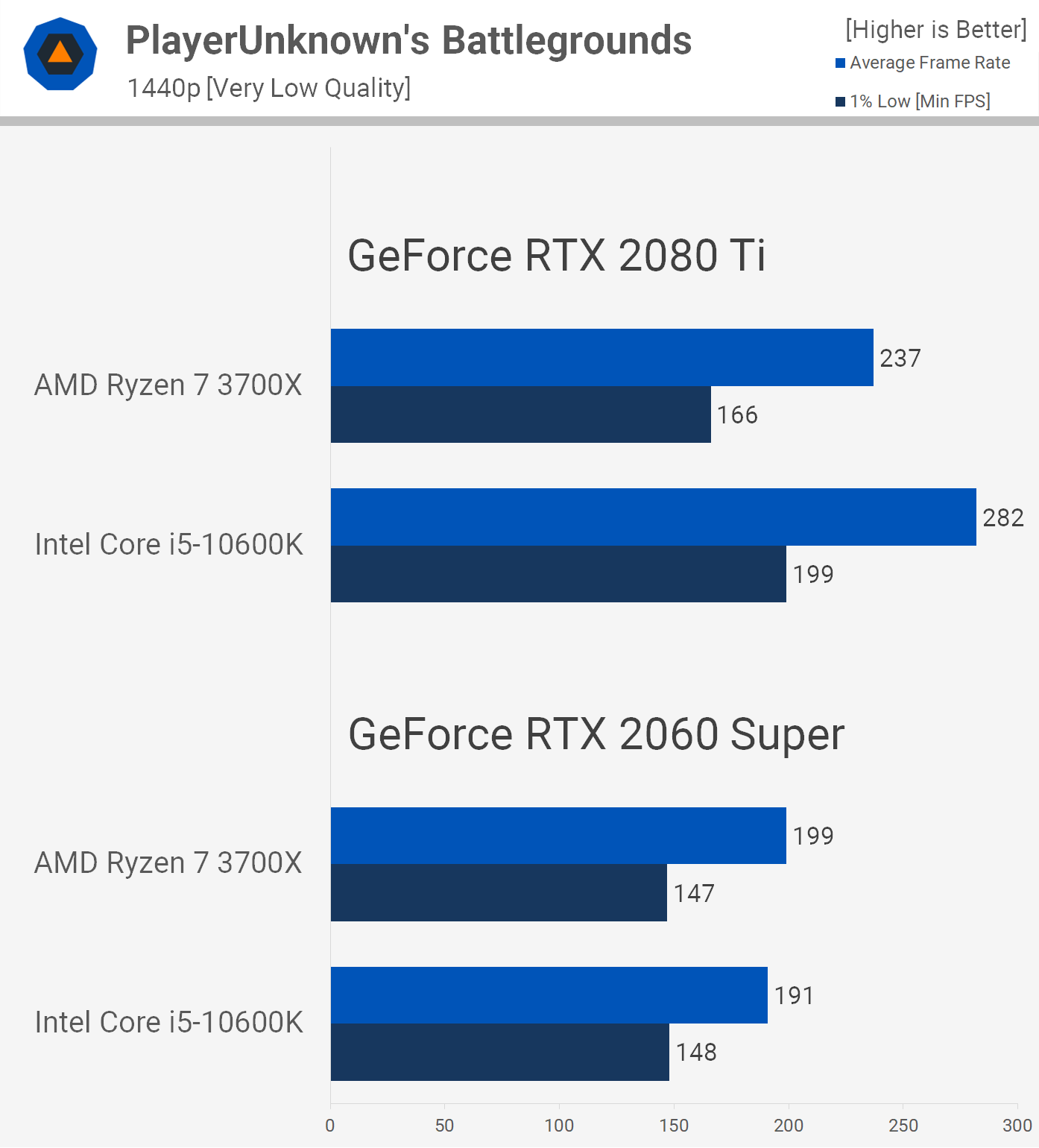

PUBG is a title we know to play better with Intel CPUs when using the high quality settings, so unsurprisingly Ryzen loses here with the very low quality preset enabled. The 10600K was up to 17% faster when using the RTX 2080 Ti at 1080p, keeping the frame rate above 200 fps at all times.

Dropping down to the RTX 2060 Super sees the results become GPU limited and now there's no difference between the processors.

We're looking at basically the same margins at 1440p using either GPU, the 10600K was up to 20% faster with the RTX 2080 Ti and just 4% faster with the 2060 Super.

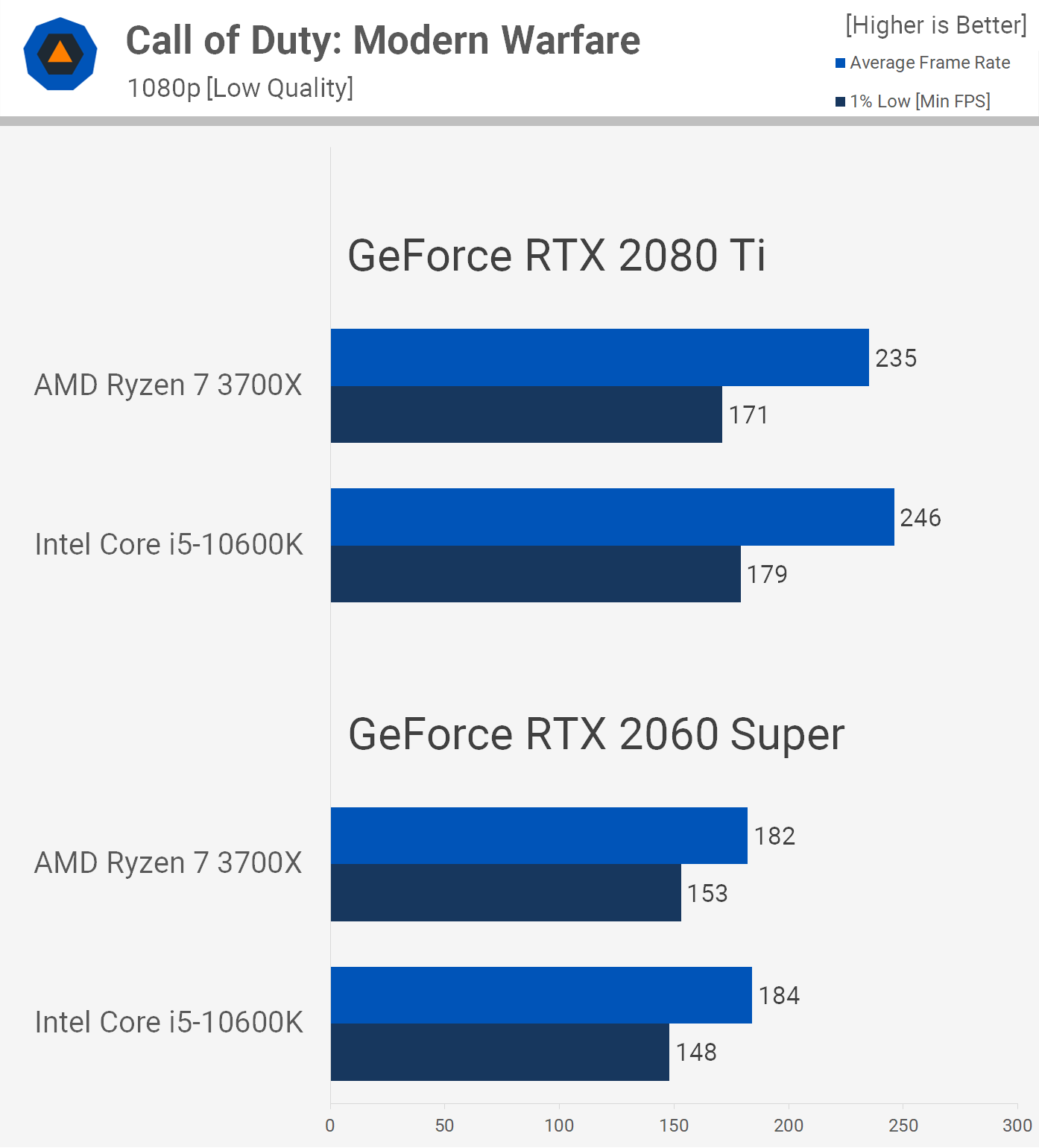

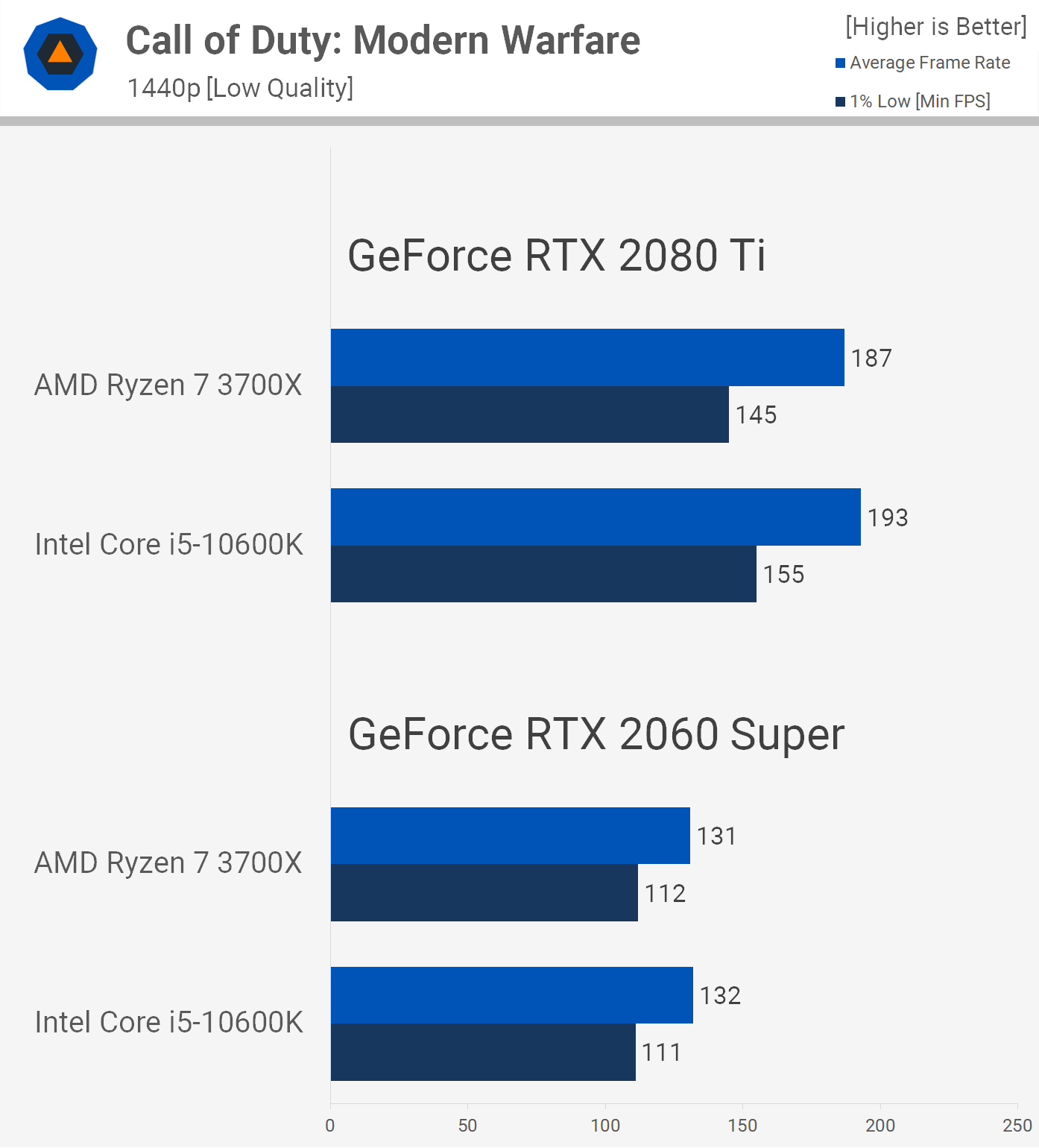

Frame rates in Call of Duty: Modern Warfare using the low quality settings are very competitive, here the 10600K was at most 5% faster.

We see much the same at 1440p. The 10600K was up to 7% faster when comparing the 1% low data with the RTX 2080 Ti, but overall the experience was much the same.

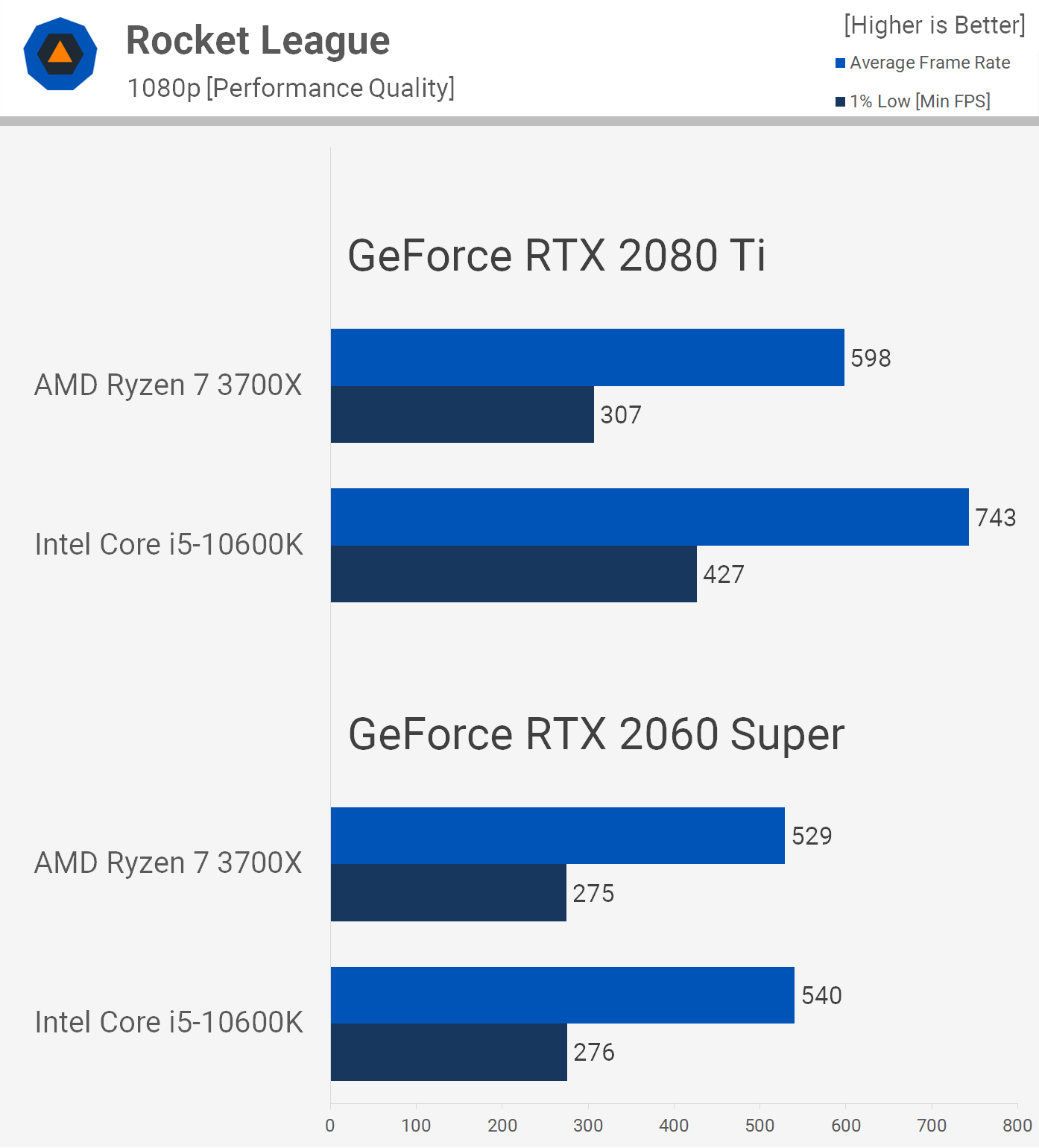

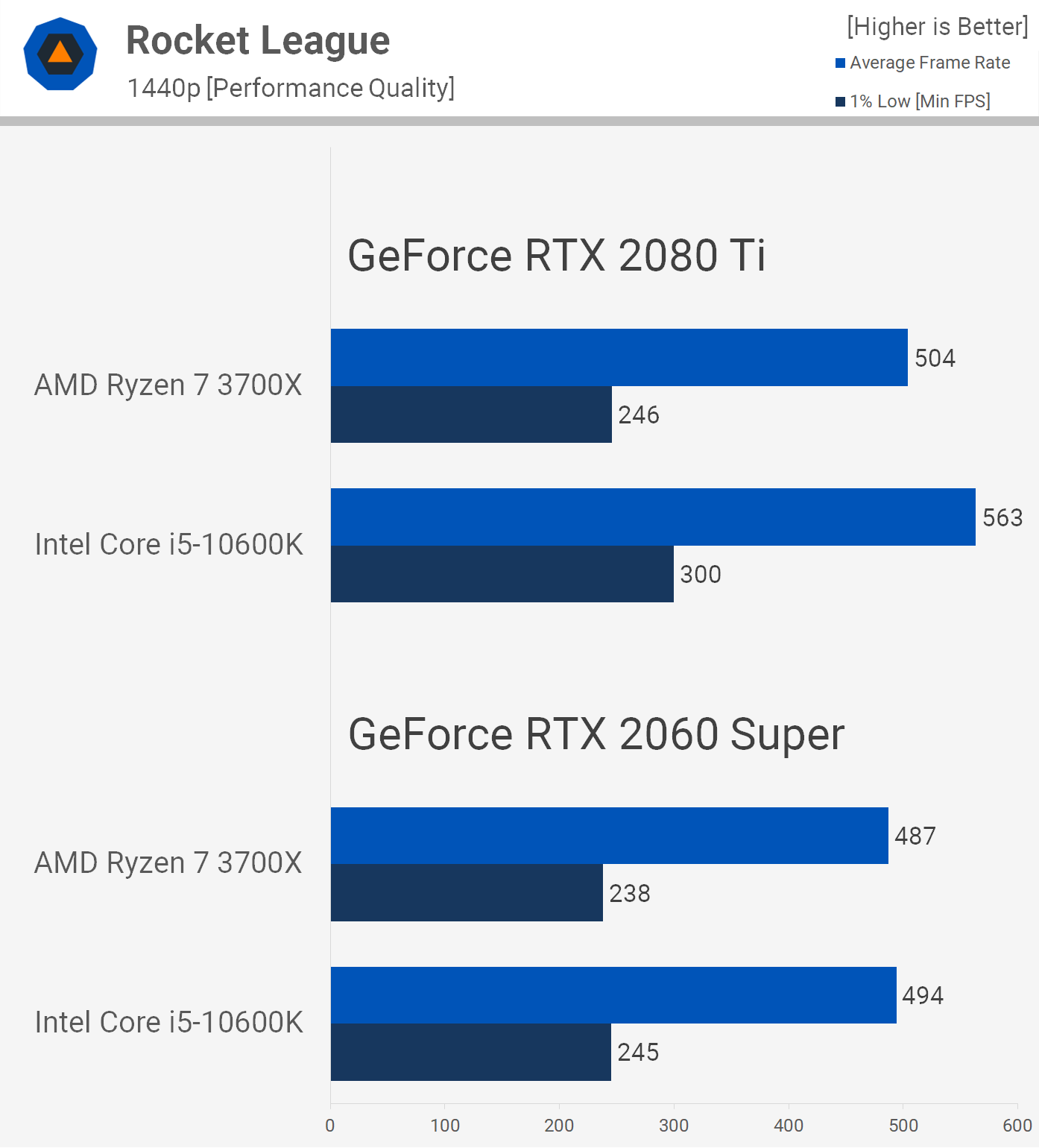

Rocket League has a 250 fps cap but you can remove that by editing a configuration file and we've done exactly that for testing. The 10600K destroys the 3700X in Rocket League with the limits removed, boosting 1% low performance with the RTX 2080 Ti by almost 40% and the average frame rate by 24%.

When using a more realistic GPU for this title the margins are neutralized and now the 3700X and 10600K deliver the same performance with the RTX 2060 Super.

The margins seen at 1080p with the RTX 2080 Ti close up significantly at 1440p and now the Intel processor is up to 22% faster. The 10600K is clearly the faster CPU in Rocket League with the limits removed, but we do question how useful that extra performance is, especially given the game has a 250 fps cap by default.

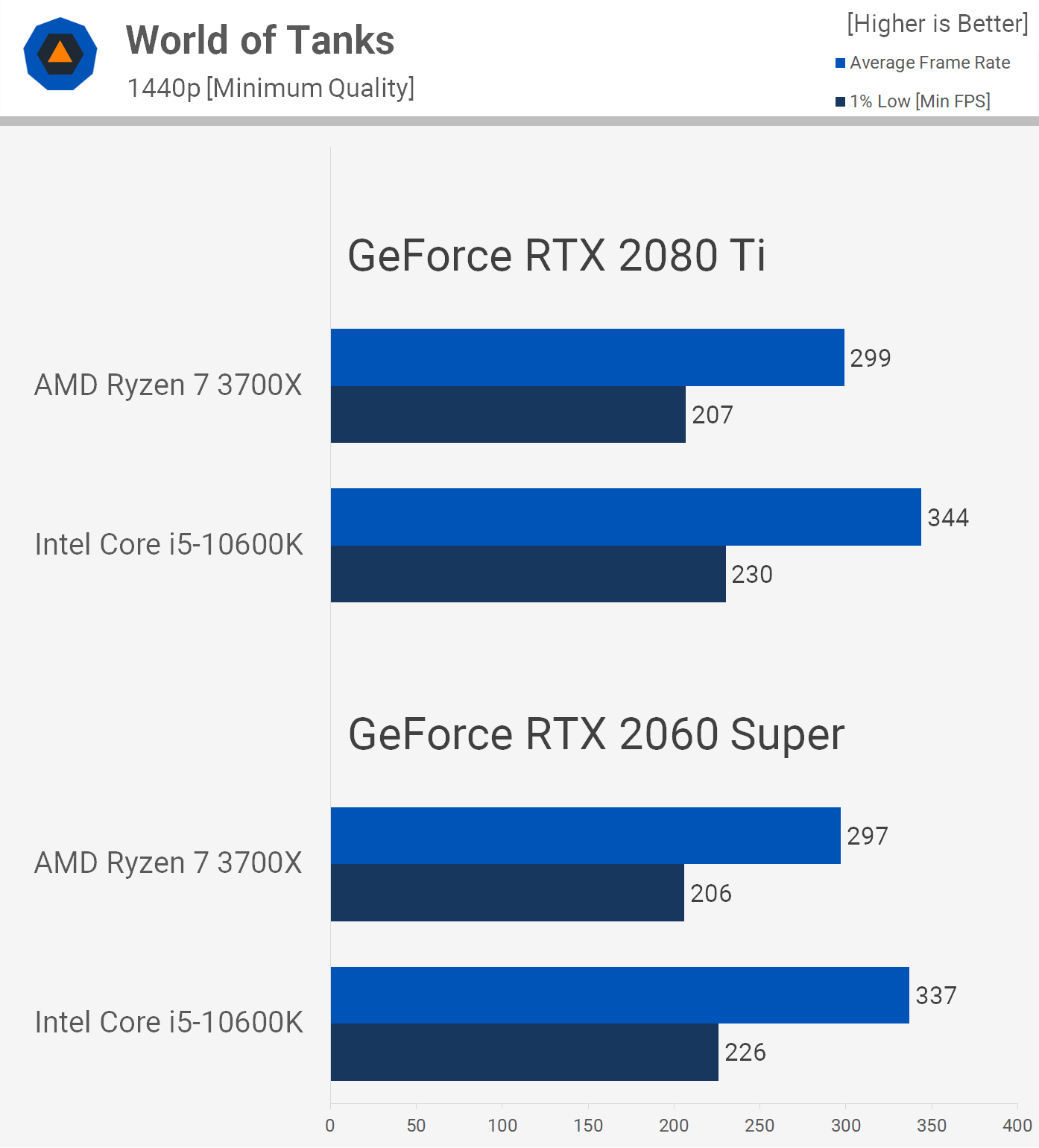

Next up we have World of Tanks using the HD Client but with the minimum quality settings enabled. Here we're looking at a strong CPU bottleneck as both the RTX 2080 Ti and 2060 Super see the same level of performance. We're also looking at over 200 fps at all times, making the 10600K up to 13% faster. This is another game where I'm not sure going over 200 fps is beneficial to anyone, so the margin between these AMD and Intel processors could very well be irrelevant.

Demonstrating just how CPU bound we were in the previous set are the 1440p results which show virtually the same numbers with both CPUs enabling over 200 fps at all times.

There's not much more to say on this one. It's our understanding that World of Tanks is a relatively slow-paced tank shooter, so going well over 200 fps may not be entirely beneficial.

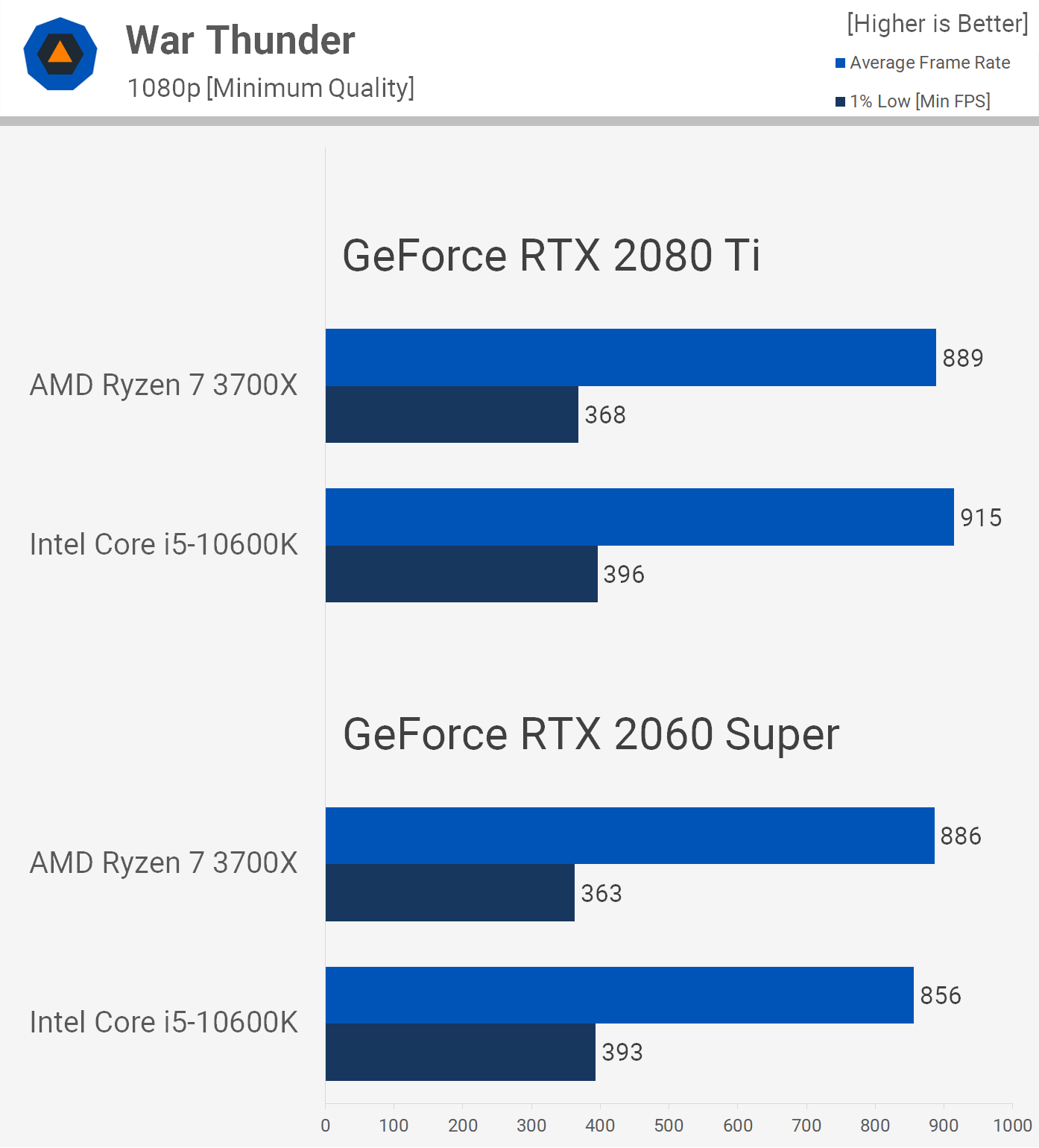

The last game we tested is War Thunder which is showing some pretty wild frame rates using either GPU or CPU. In both cases we are CPU bound, and looking at well over 350 fps at all times.

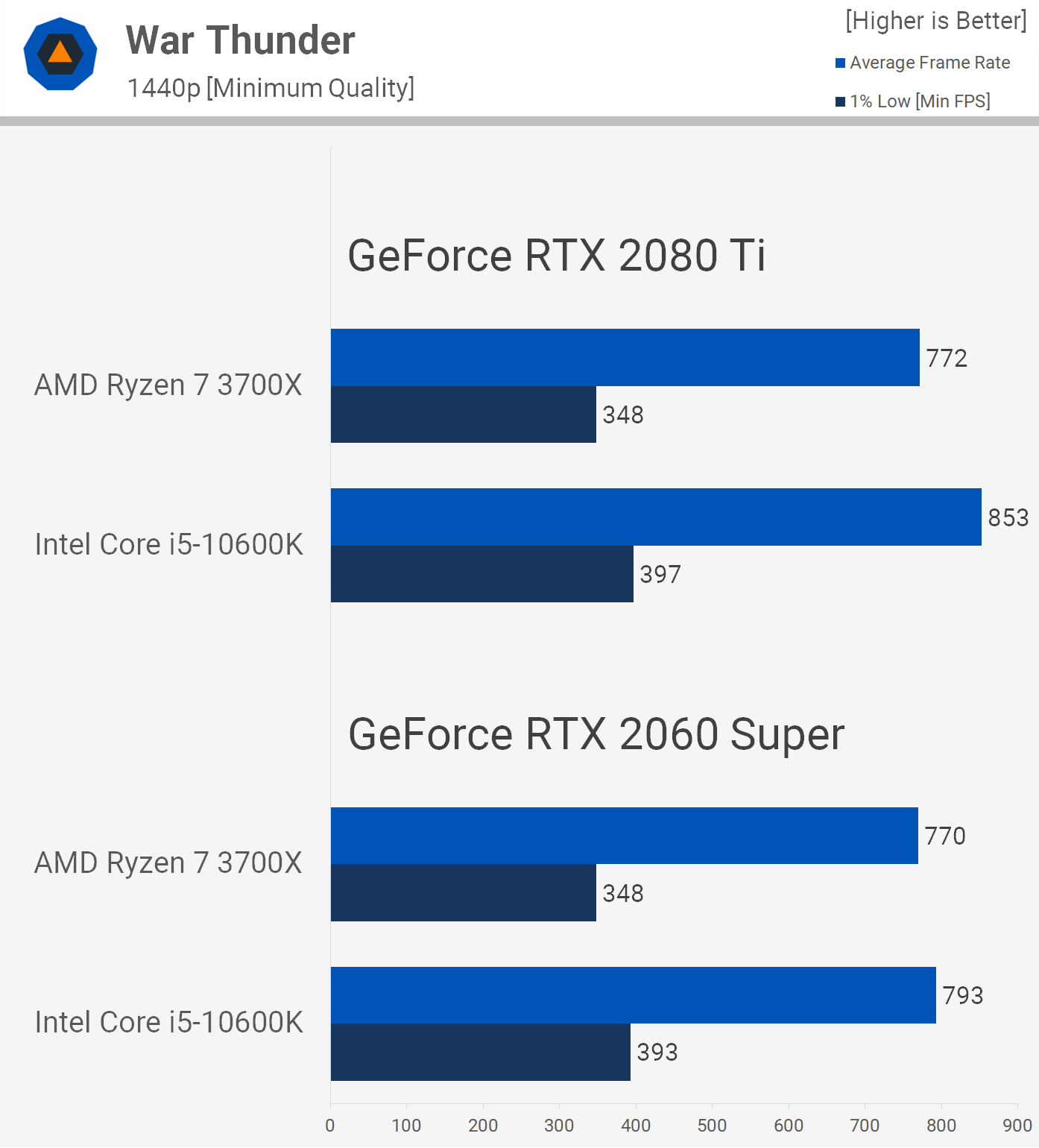

Nothing changes at 1440p. Here the 10600K is up to 14% faster, but the 3700X dipped to just 348 fps, so the margins are largely irrelevant at this point.

This last graph looks at the performance of both CPUs as an average across the 9 games tested. With the RTX 2080 Ti, the 10600K was 10% faster than the 3700X when comparing the 1% low data, but just 2% faster with the RTX 2060 Super. We think it's fair to say that for most of you the gaming performance difference between these two CPUs is going to be close to zero.

Closing Notes

There were a few surprising results for sure, but overall we think this comparison went as expected. In our Core i5-10600K review we found that the Intel processor was on average 6% faster than the Ryzen 7 3700X. That was using high to ultra quality presets. In this test, we found that margin has extended to 7%, or 10% if we focus on the 1% low data, when running low quality settings in a number of competitive titles.

This doesn't change anything we said in our review. For those who missed it, we basically said that if you want to maximum performance in most games, then get the 10600K, but for the most part you won't be able to tell the difference. That being the case, we recommended the 3700X for its stronger productivity performance which you will often benefit from and we also expect the two extra cores to come handy down the road.

Looking back over the results we saw comparable performance in Battlefield V and Call of Duty Modern Warfare, while the 3700X was faster in Counter-Strike where we were seeing well over 300 fps on average and slightly faster in Rainbow Six Siege where we were seeing over 400 fps on average.

Likewise, the 10600K was faster in Fortnite, another title where both CPUs allowed for over 300 fps on average. In Rocket League both maintained 300+ fps at all times, same with World of Tanks, and then PUBG where both averaged well over 200 fps, so how much does that extra performance really matter?

For example, when going from 300 to 330 fps, which would be a 10% increase, you're looking at a 0.3ms improvement in latency. Once you exceed the refresh rate of almost all modern monitors the only benefit becomes input latency and we'd say 0.3ms is imperceptible for virtually everyone.

Bottom line, the Core i5-10600K is a pretty good gaming CPU. You'll potentially pay a little extra for the cooler and motherboard, but in terms of value it makes out pretty well in a gaming-only scenario. But if the 10600K is a good gaming CPU, so is the 3700X and there's literally zero chance the 10600K will be the faster gaming CPU in 3 year's time, worst case for AMD, the margins remain the same. So feel free to pick your favorite platform, and game on!