It's been a long, long time since we dedicated a full review to a Celeron processor, but we've been keen to explore the most entry-level Alder Lake CPUs after testing the impressive Core i3-12100F. So we're going right to the bottom of the lineup with the Celeron G6900...

At $42 MSRP (currently $75 at retailers), this is a very affordable chip, but is it any good, and who should buy it? These are the questions we hope to address in this review.

Let's quickly go over the specs. The Celeron G6900 is a dual-core processor sporting just two P-cores and no hyper-threading, so right away that's going to raise some red flags, and rightfully so. We're not entirely sure what a dual-core can achieve these days, even a powerful one, but we suspect not a lot.

| Intel Core i5 12600K | Intel Core i5 12600 | Intel Core i3 12100F | Intel Pentium G7400 Gold | Intel Celeron G6900 | ||

|---|---|---|---|---|---|---|

| MSRP $ | $320 | $223 | $122 | $64 | $42 | |

| Release Date | November 2021 | January 2022 | ||||

| Cores / Threads | 6P+4E / 16 | 6P+oE / 12 | 4P+0E / 8 | 2P+0E / 4 | 2P+0E / 2 | |

| Base Frequency | 2.8 / 3.7 GHz | 3.3 GHz | 3.3 GHz | 3.7 GHz | 3.4 | |

| Max Turbo | 3.6 / 4.9 GHz | 4.8 GHz | 4.3 GHz | N/A | ||

| L3 Cache | 20 MB | 18 MB | 12 MB | 6 MB | 4 MB | |

| Memory | DDR5-4800 / DDR4-3200 | |||||

| Socket | LGA 1700 | |||||

Because there's only two cores, the combined L2 cache capacity is just 2.5 MB and then we have 4MB of L3 cache. Compare that with the Core i3-12100F which packs 12MB L3, and then the Core i5-12400 with 18MB, and you get a sense for just how measly the Celeron is.

It's also worth noting that the two cores operate at 3.4 GHz with no turbo boost.

The G6900 does include integrated graphics, which makes sense for this type of product that's largely destined for low budget office use. Of course, we wouldn't expect much out of the UHD 710 as it's only designed for general use, which is true for the G6900 in general.

This is perhaps the most intriguing thing about this CPU, we know it's only intended for very basic office type use, but what are the limitations?

Can it handle any modern gaming? Can it even run a program like Adobe Premiere? Of course, we're going to find out. Along the way, we're going to do some "for science" type testing by including the Core i9-12900K, but with only two P-cores enabled and all E-cores disabled, with both HT enabled and disabled.

This will allow us to compare Intel Alder Lake architecture with just two P-cores enabled, but in the case of the 12900K, the cores are clocked 47% higher with 7.5x more L3 cache. So we're basically creating the ultimate dual-core processor and I still wonder, will that be enough processing power for any of today's games?

For testing the Celeron G6900 we're using the MSI B660M Mortar Wi-Fi DDR4 with 32GB of dual-rank, dual-channel DDR4-3200 CL14 memory, the same modules we use for all our DDR4 testing. The Alder Lake K-SKU CPUs have been tested on the MSI Z690 Tomahawk Wi-Fi DDR4 using the same memory, and all boards were updated to the latest BIOS revision. We've also updated all Ryzen data using the MSI X570S Tomahawk Wi-Fi motherboard.

All gaming benchmarks have been updated for the AM4 and LGA 1700 CPUs with Resizable BAR enabled, using the Radeon RX 6900 XT graphics card. The operating system of choice is Windows 11. That covers it, let's now dive into the results...

Application Benchmarks

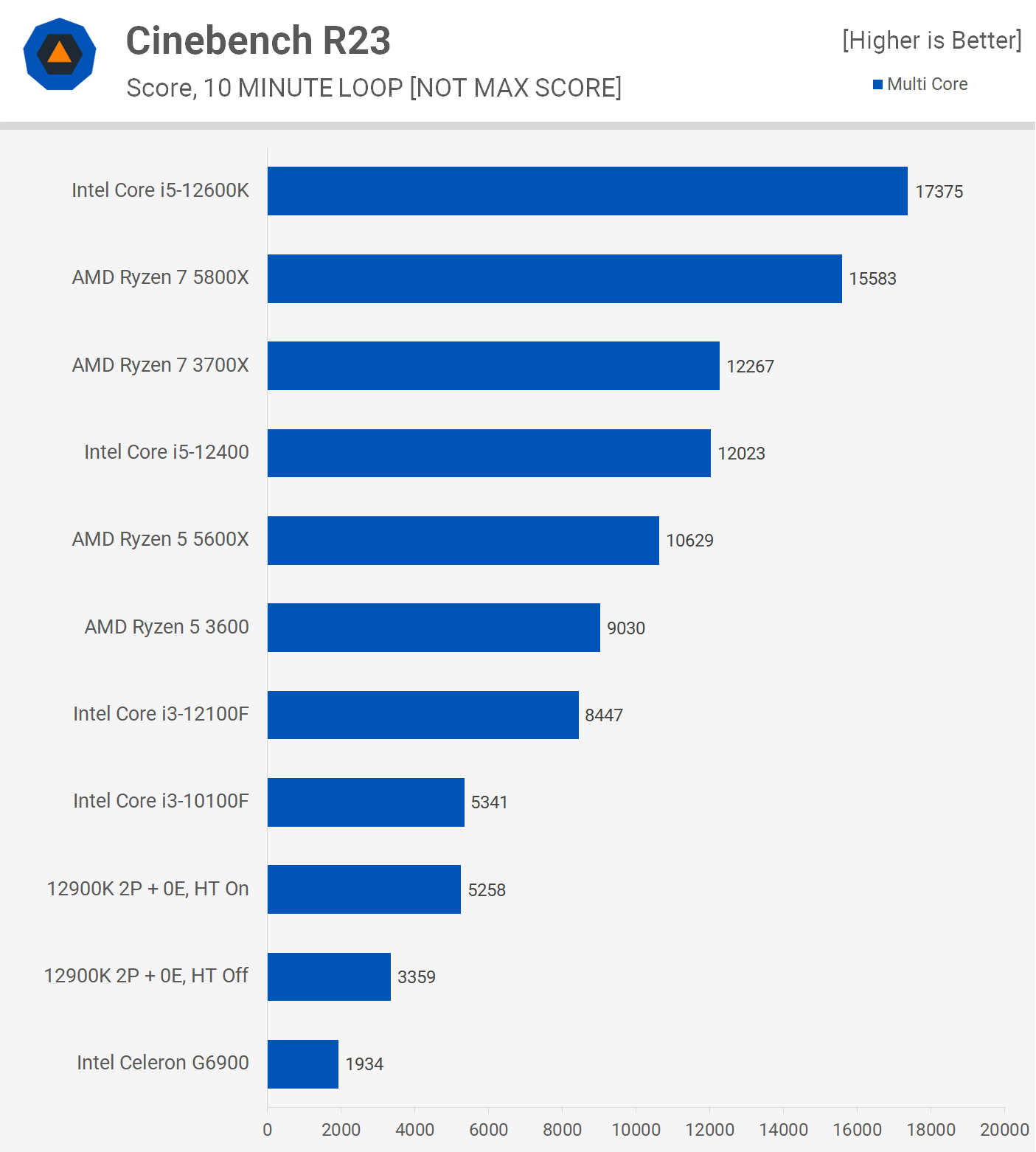

Starting with Cinebench R23 multi-core, we find the G6900 firmly at the bottom of our graph. With just two cores it produced a score of 1934 fps and that meant the Core i3-10100F was 176% faster, a massive difference. Given you can buy the Core i3 processor for $85 right now, it makes the G6900 very hard to justify for just $10 less, so that's not a great start.

As for the 'for science' testing, we see that the 12900K with just two cores and two threads was 74% faster than the G6900, which is impressive given it's clocked 47% higher. So the extra L3 cache is making up a good portion of that gain.

Then with hyper-threading enabled, the 2 P-core 12900K configuration matched the Core i3-10100F which is impressive. Sure, the 12900K has a lot more L3 cache, but the fact that Alder Lake with just 2 cores and 4 threads can match a 10th-gen part with 4 cores and 8 threads is mighty impressive.

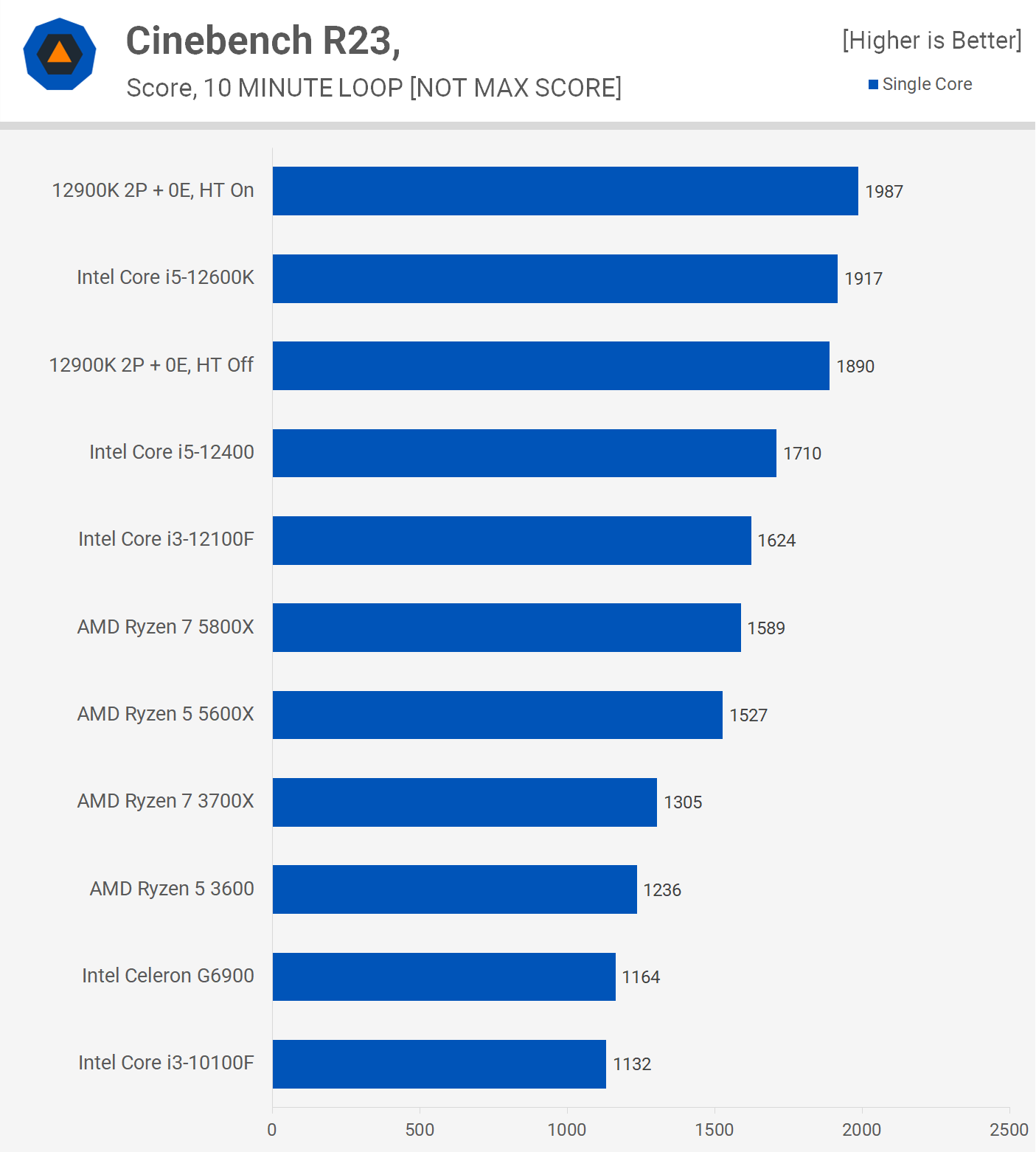

The G6900 fares a lot better on the single core test, basically matching the Core i3-10100F which is impressive given the 10100F is clocked 6% higher with 50% more L3 cache. Then we see the 12900K with the same core configuration as the G6900 is 62% faster thanks to a boost in core clock speed as well as the large L3 cache capacity.

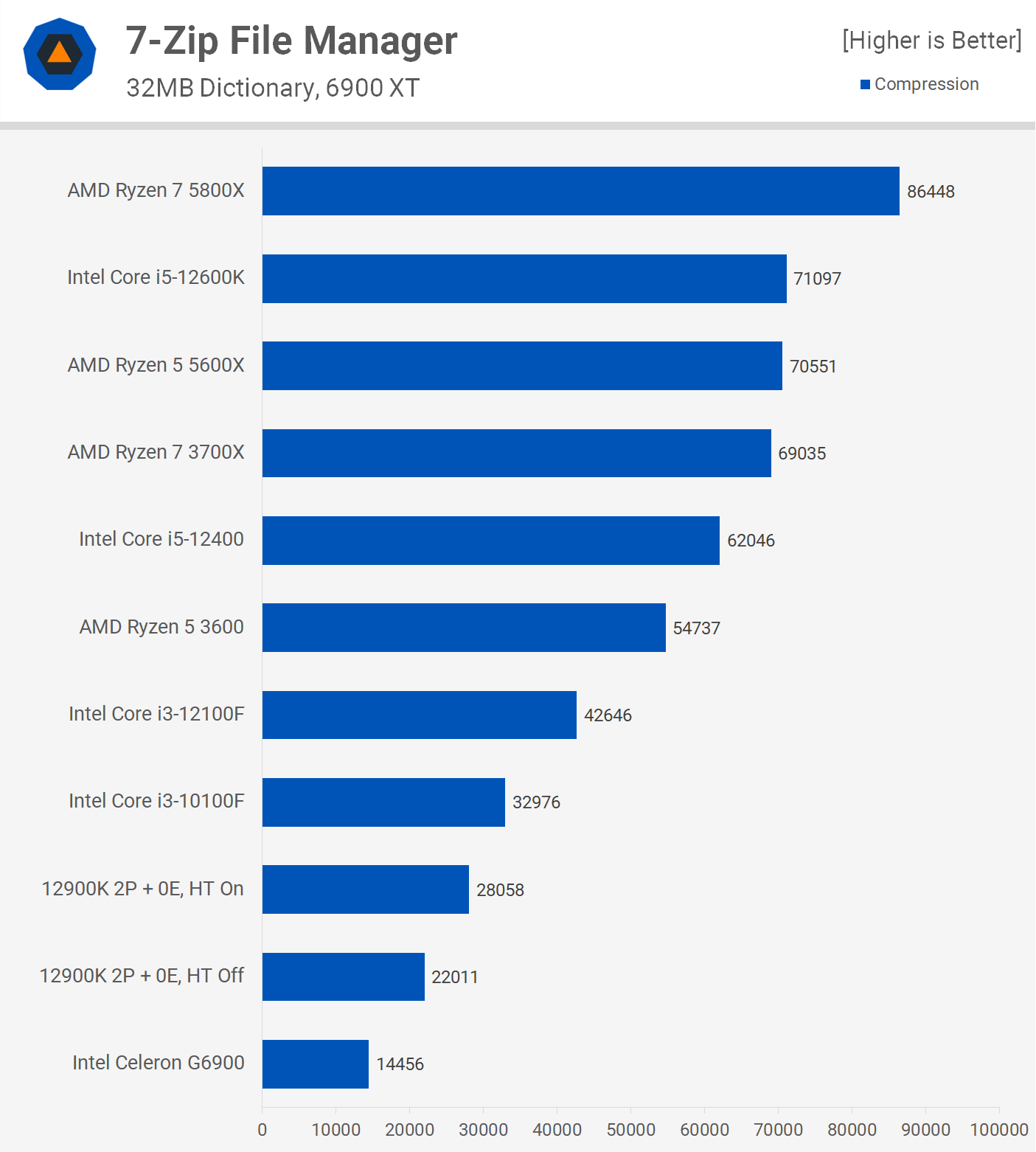

Moving on, the 7-Zip File Manager compression test shows just under half the performance of the 10100F, which costs a mere $10 extra, so it's hard to make a case for the G6900.

The situation worsens when looking at decompression performance, which leverages simultaneous multi-threading technology well, and the G6900 doesn't support SMT. Here the 10100F is over 3x faster. The 12900K dual-core configuration without SMT was 56% faster than the G6900 and then an additional 48% faster with SMT enabled.

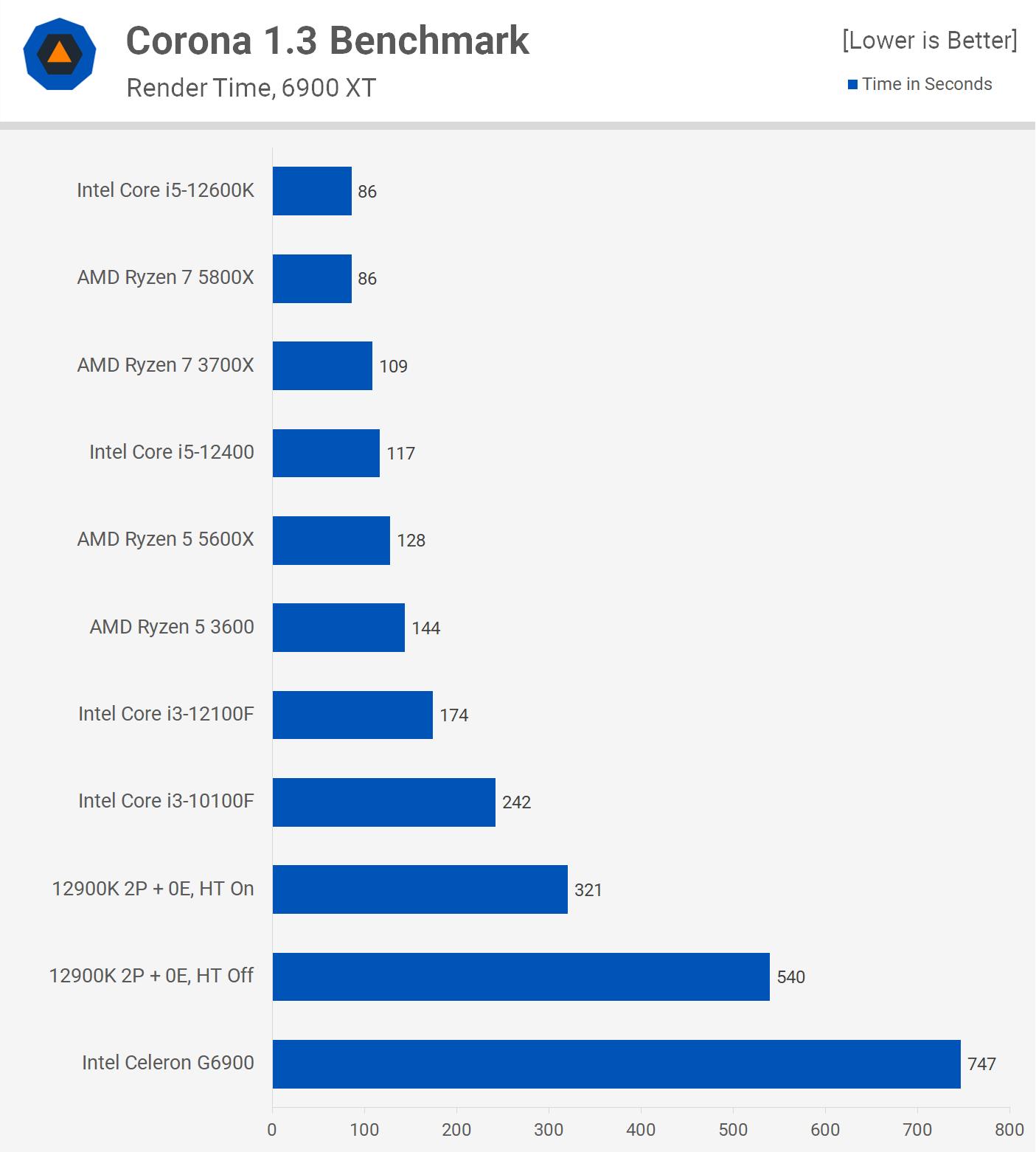

The Celeron G6900 is painfully slow for rendering tasks and frankly you'd never use it for this kind of workload. The 10100F was 3x faster and it's considered a slower processor for this sort of work.

Ideally, the G6900 needs to be clocked higher, and of course, a larger L3 cache wouldn't hurt, as the 12900K running the same core configuration was roughly 40% faster.

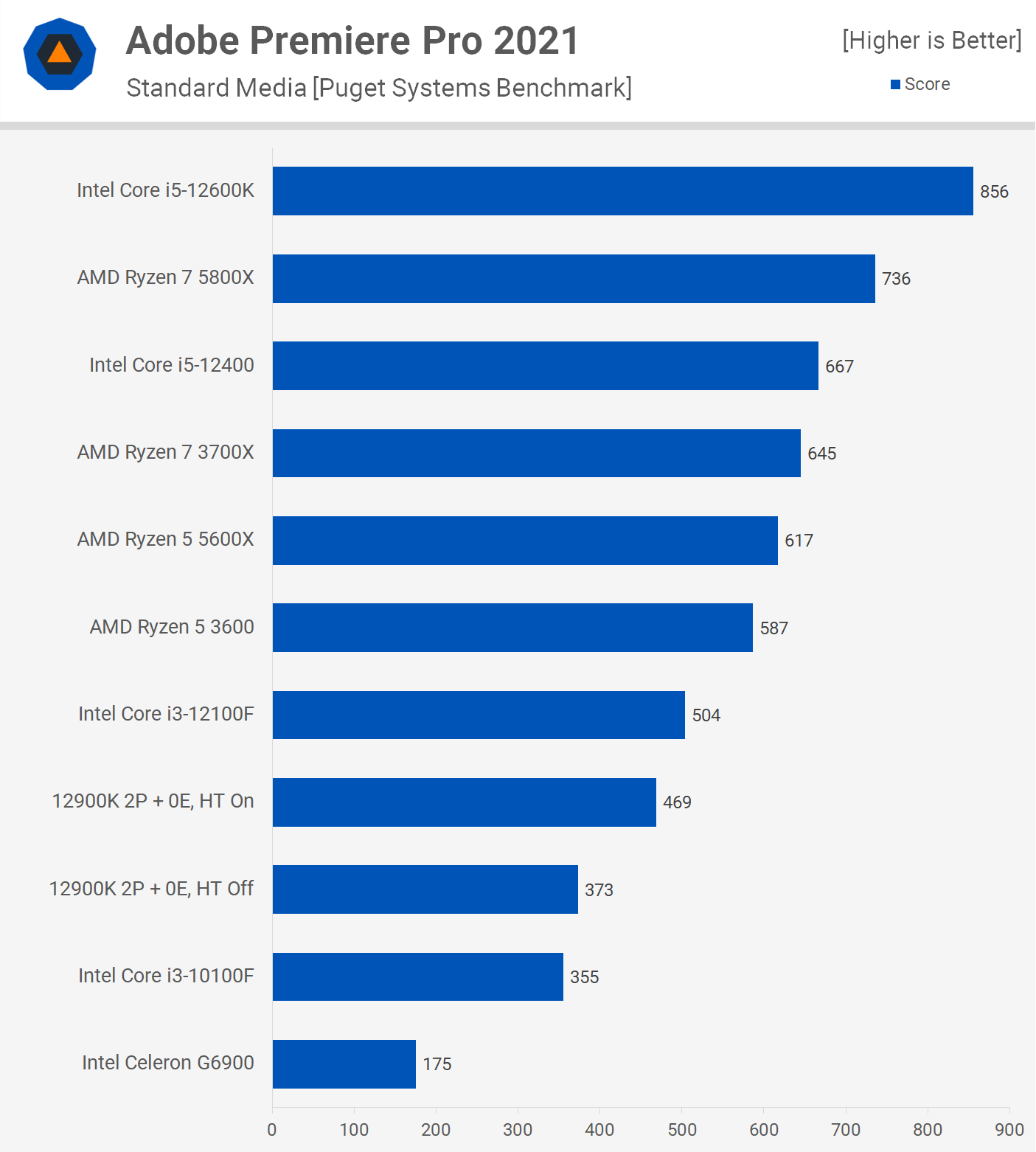

The Celeron G6900 was somewhat usable in Adobe Premiere Pro 2021, you could certainly edit a video with this CPU, but the encode times and applying certain effects would be painfully slow. Here you're looking at roughly twice the performance from the Core i3-10100F.

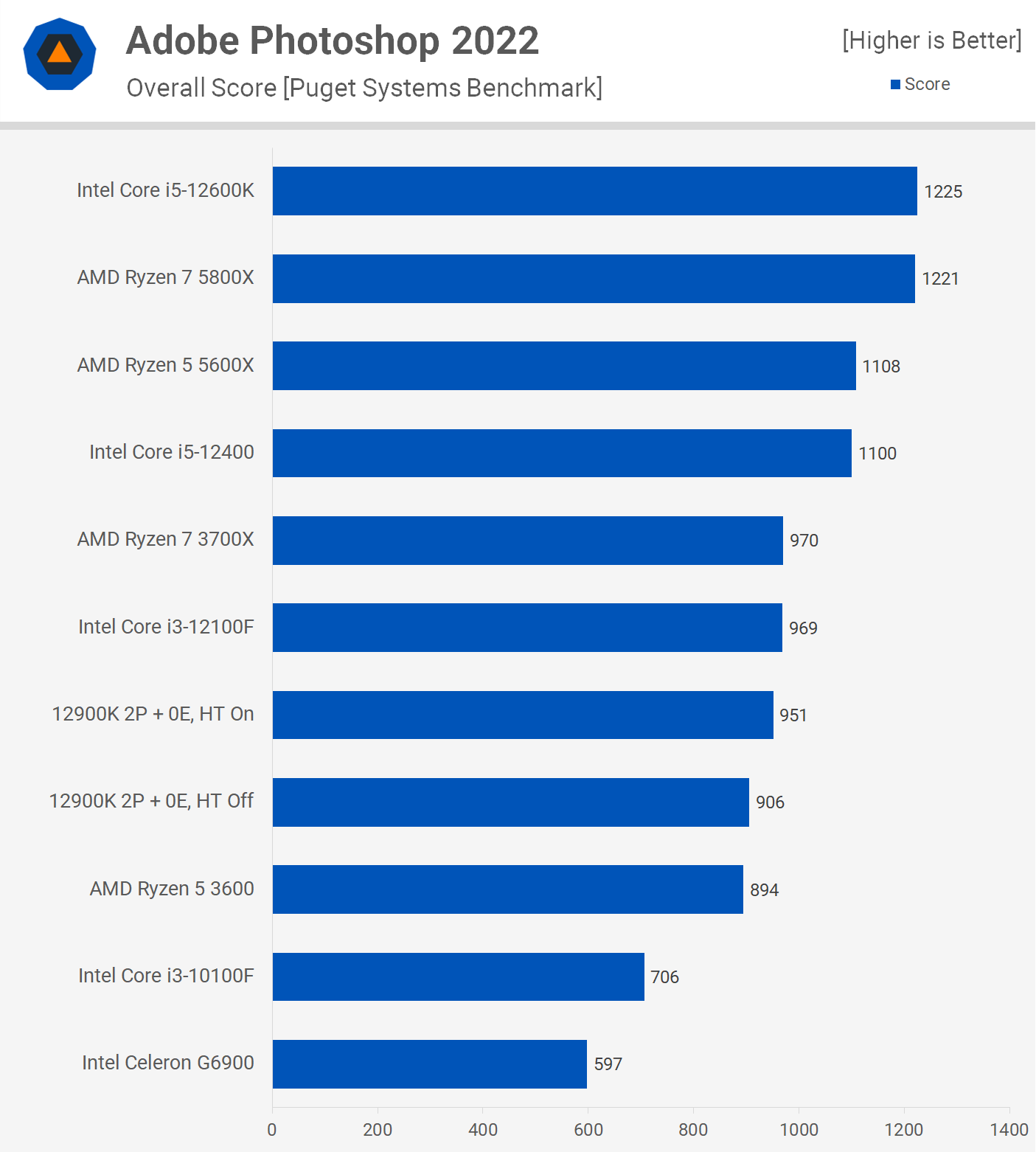

Adobe Photoshop is more of a lightly threaded application, so here the G6900 does fare a bit better, but even so it was well down on the 10100F's score and miles slower than older parts like the Ryzen 5 3600. The dual-core 12900K configuration boosted performance by a massive 52%.

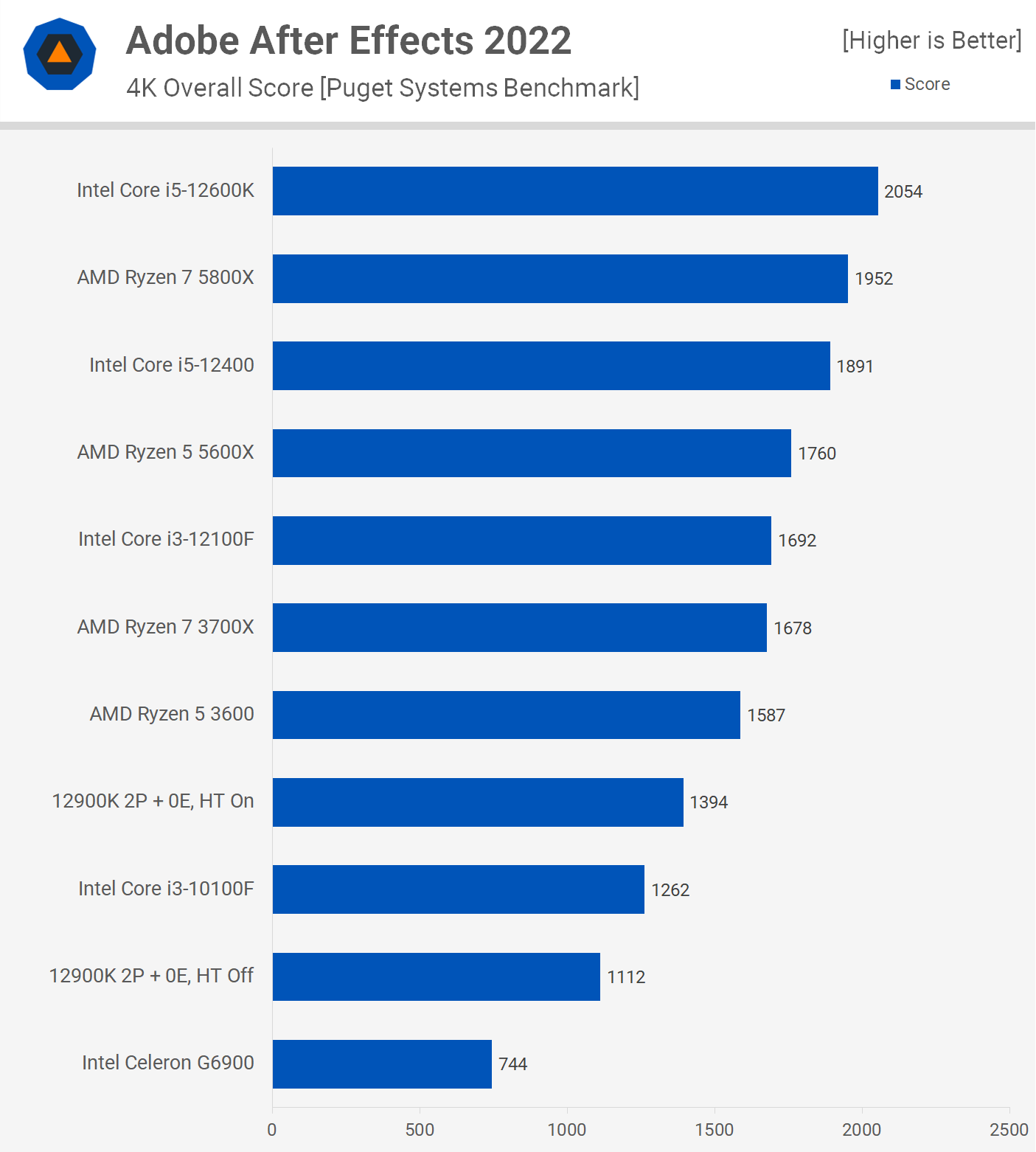

After Effects is a blend of single and multi-threaded loads, so the G6900 doesn't perform as well and the 10100F was 70% faster. We'd say clock speed is the biggest issue here, next to the lack of cores, as the dual-core 12900K configuration was 49% faster and enabling hyper-threading boosted performance by a further 25%.

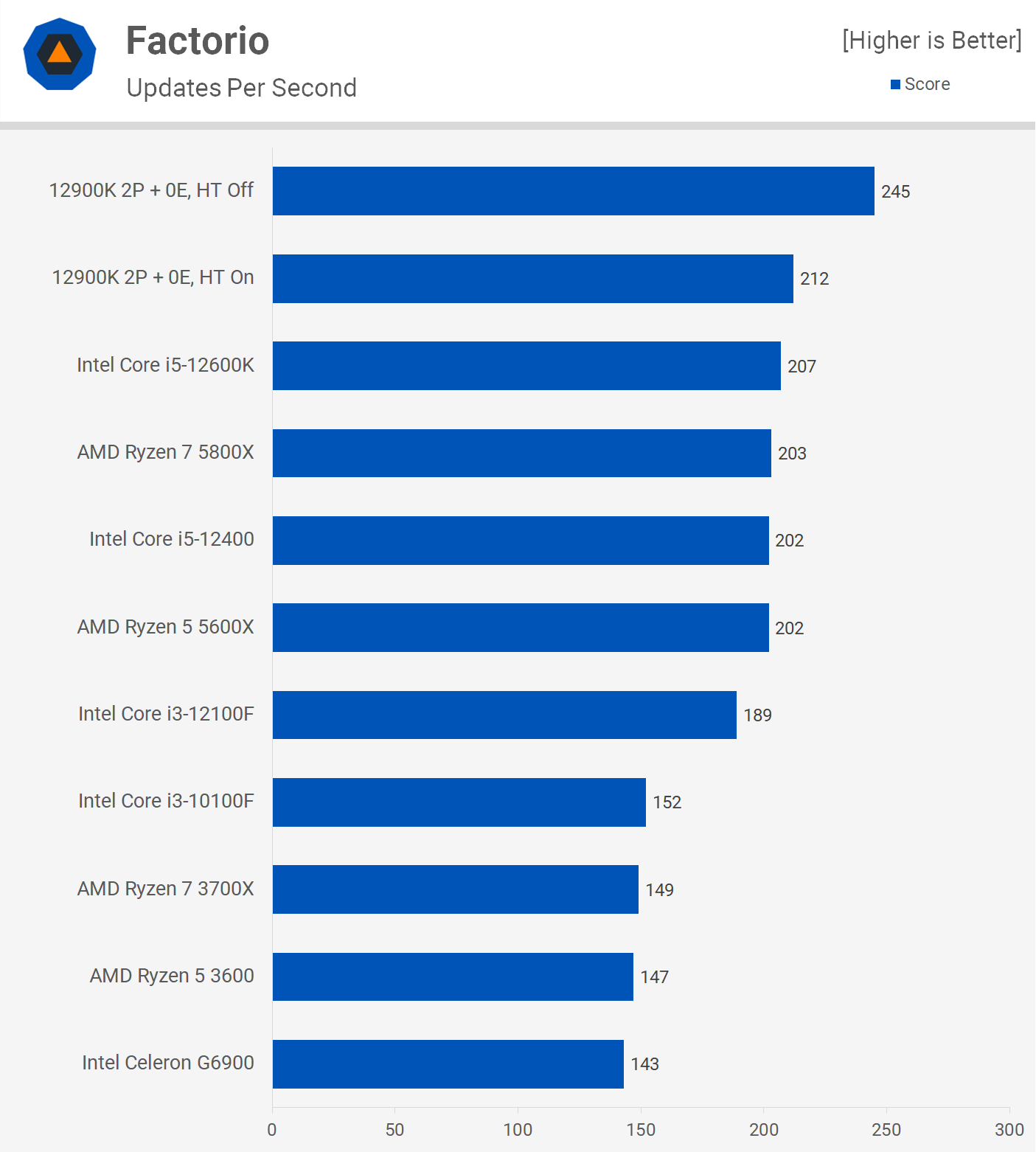

With Factorio in the application benchmarks we're not measuring FPS, but updates per second. This automated benchmark calculates the time it takes to run 1,000 updates. This is a single-thread test which apparently relies heavily on cache performance.

Being that this is a single thread test, the G6900 does well compared to the Ryzen 5 3600, Ryzen 7 3700X and Core i3-10100F. However, the smaller L3 cache capacity does hurt as the 12100F was 32% faster and the 12400 41% faster.

The dual-core 12900K configurations does much better thanks to the larger 30MB L3 cache and interestingly disabling hyper-threading boosts performance significantly. We're looking at a 16% performance improvement with HT disabled.

Not sure how many people will be keen to carry out code compilation work with the Celeron G6900 and if you are, we recommend you reconsider because for basically the same money the 10100F is almost three times faster. It was impressive to find that the 12900K with just 2 cores and 4 threads was able to roughly match the 10100F.

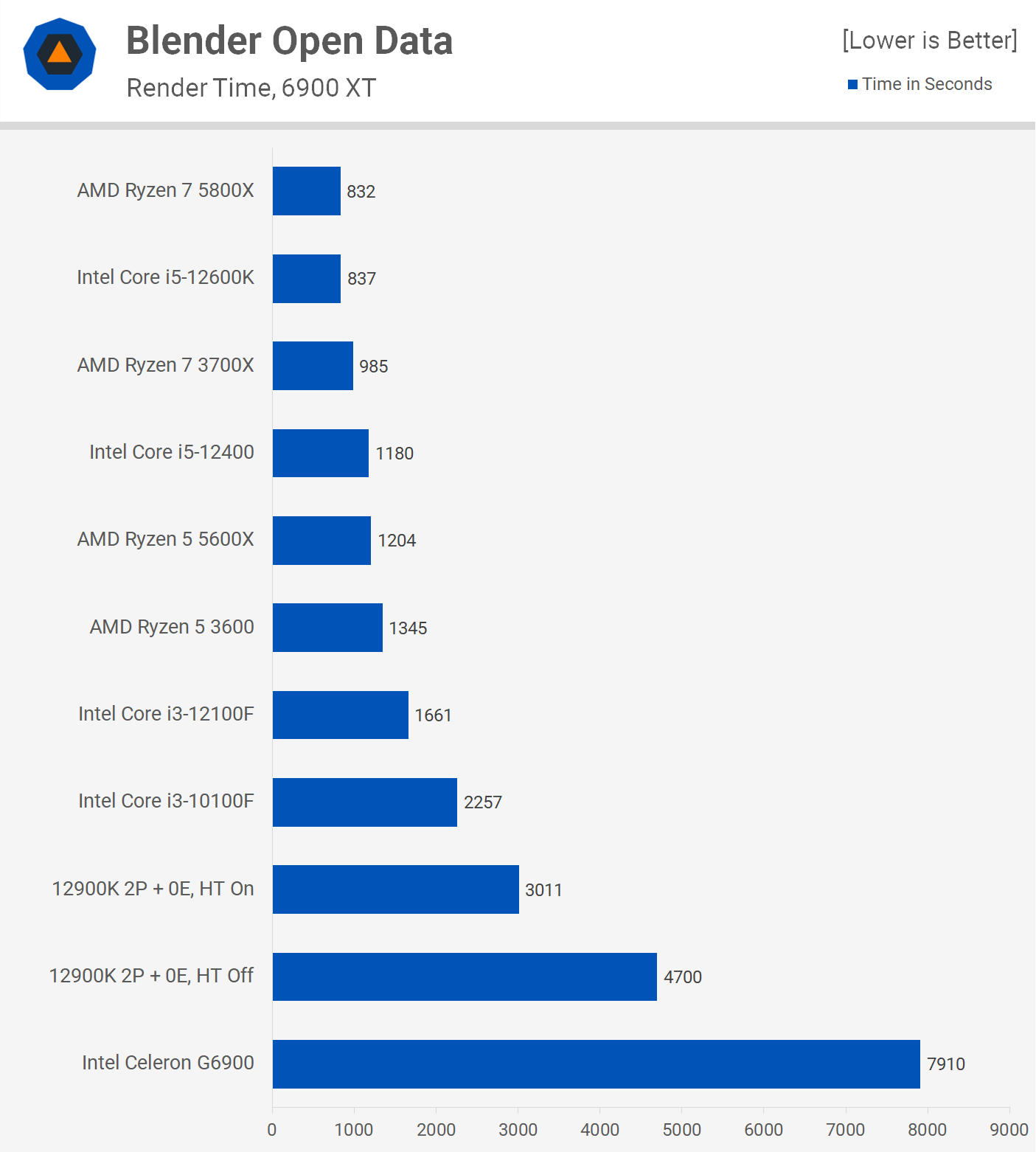

The last application benchmark is Blender and this is a particularly terrible result for the G6900 as the 10100F was 3.5x faster. The dual-core 12900K was almost 70% faster, while hyper-threading boosted performance by a further 56%.

Power Consumption

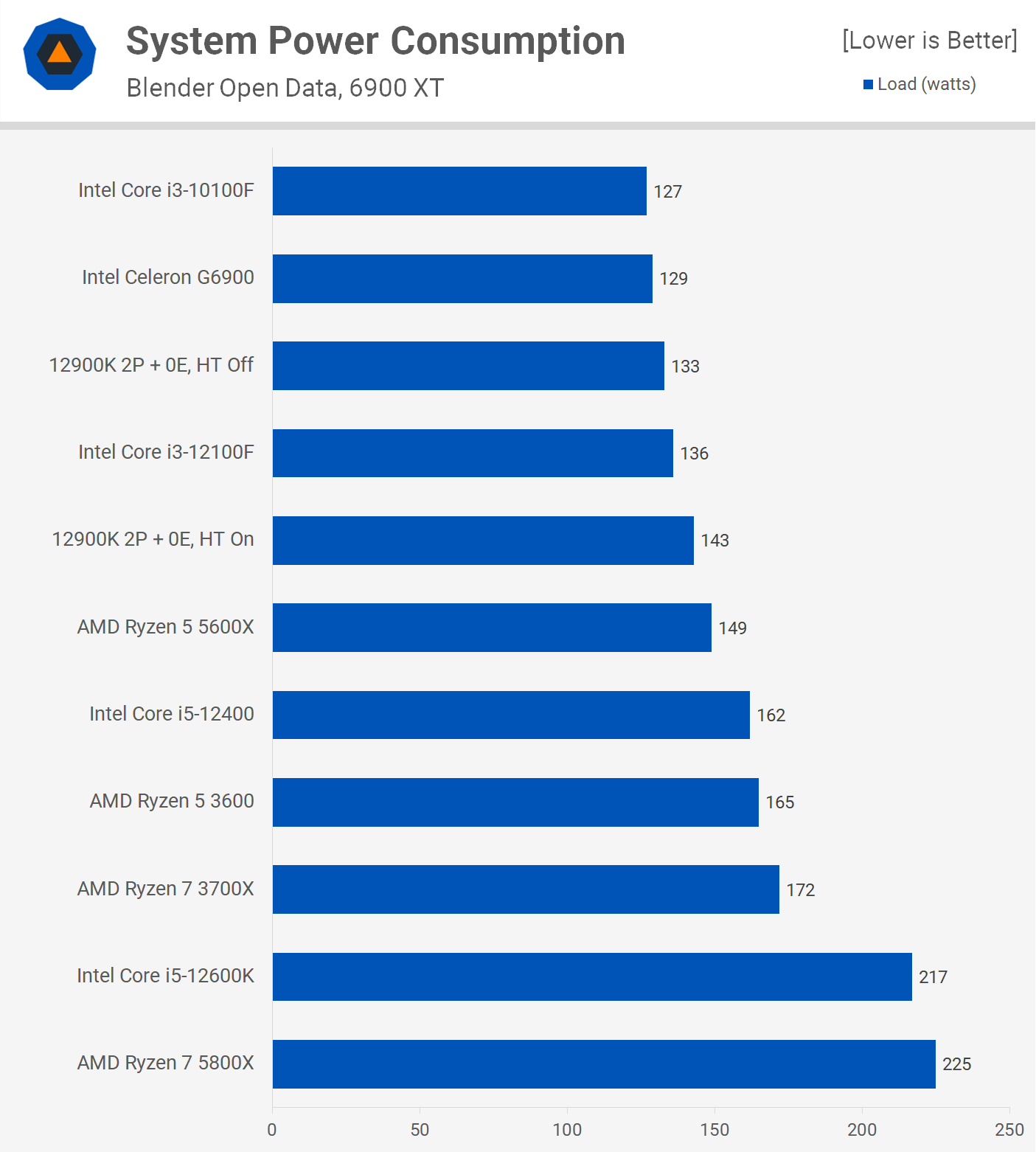

Disappointingly, in our test system the G6900 consumed slightly more power than the Core i3-10100F, despite being significantly slower. So in terms of power efficiency it's not particularly impressive. In fact, the 12900K clocked 47% higher only consumed a few extra watts which was quite unexpected.

Gaming Benchmarks

Time for some gaming, well, maybe not gaming exactly. More like horrific frame stuttering...

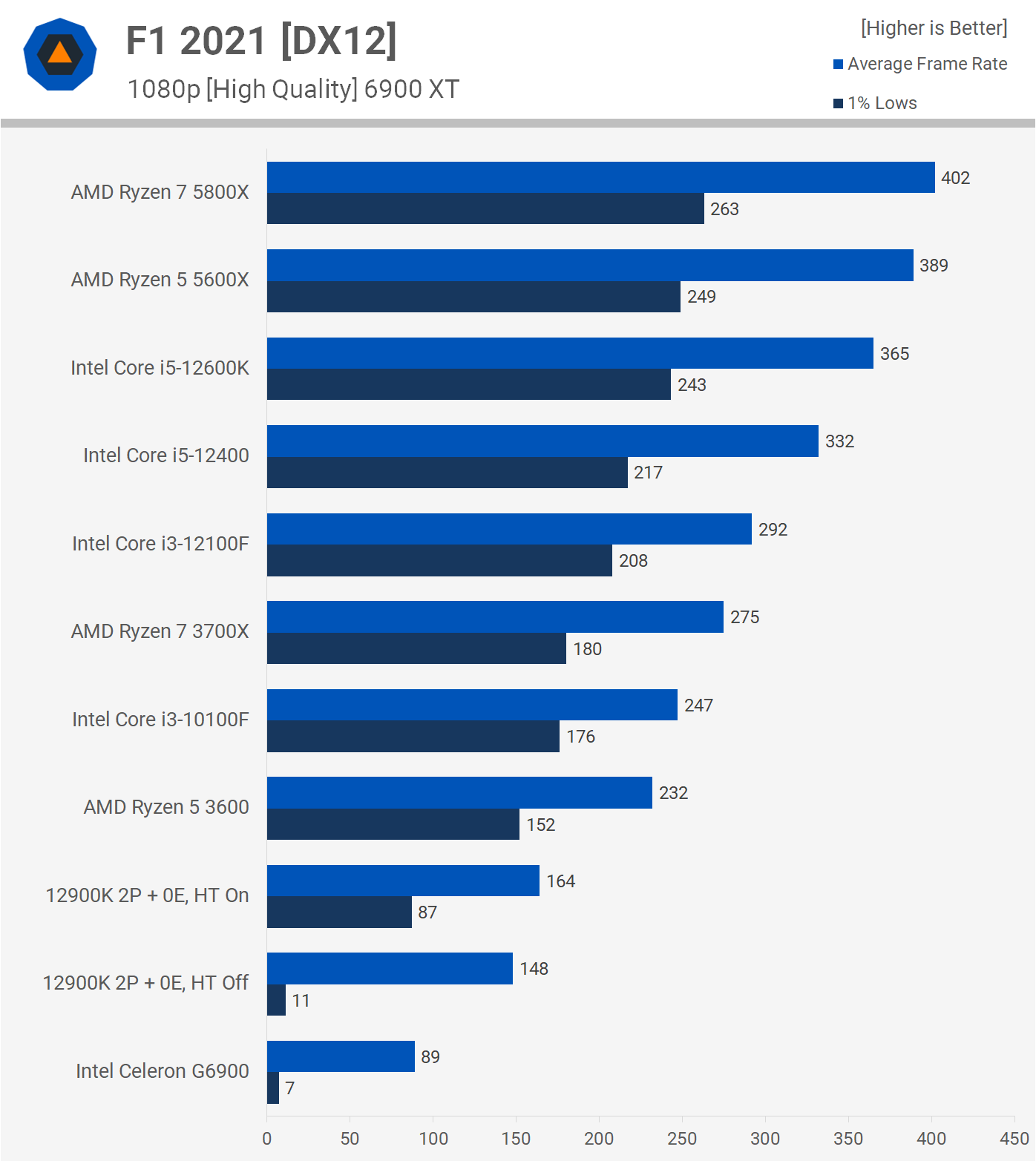

The Celeron G6900 looks okay in F1 if we focus on the 89 fps average, but taking one look at the actual gameplay, it's evident we had an issue with constant stuttering resulting in a 1% low of just 7 fps. The dual-core 12900K configuration wasn't any better when it came to actual playability.

However, with hyper-threading enabled, the 1% lows picked up dramatically and now the game was very playable with barely any frame pacing issues. Of course, it was still much slower than even the Ryzen 5 3600, but the performance could not pass as playable.

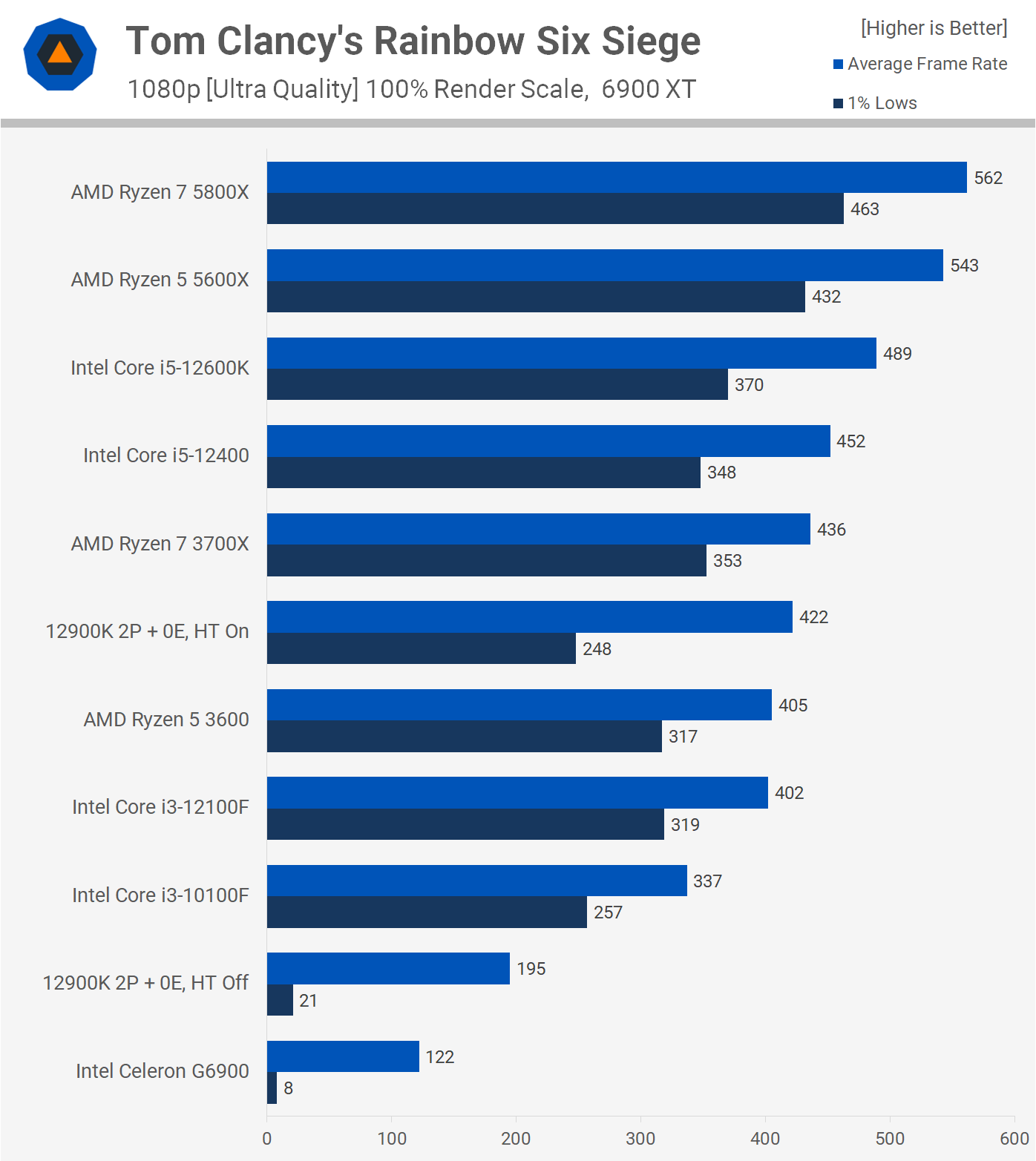

Rainbow Six Siege suffered similar issues with the G6900. The average frame rate looked great, but it was the 1% lows that were terrible, making the game a stuttery mess and completely unplayable.

Even with two really fast cores, the game remained unplayable with 1% lows of 21 fps. We did find with HT enabled that the dual-core 12900K was able to deliver playable performance and although frame consistency wasn't as good as it could have been, performance overall was solid and playable.

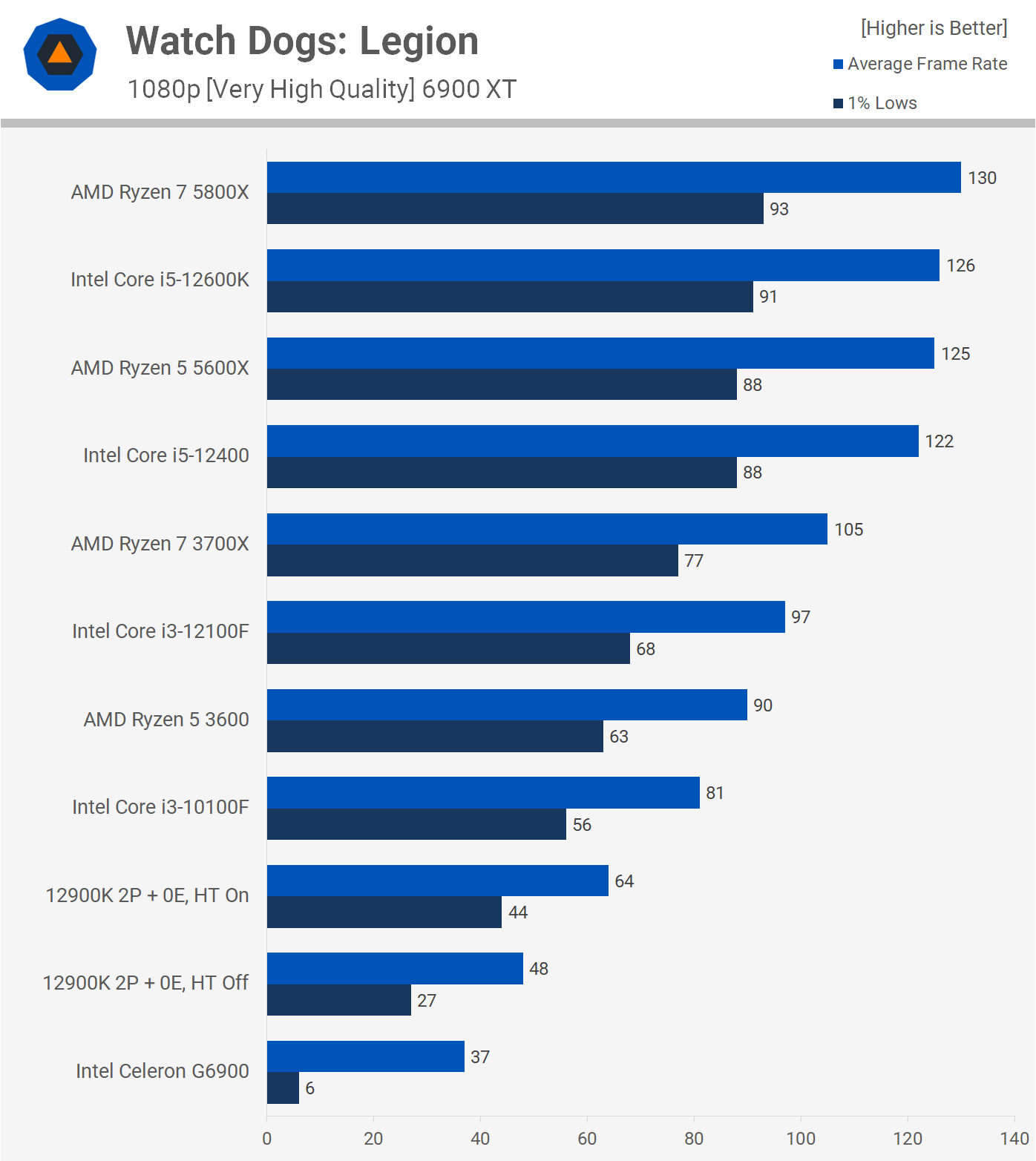

Watch Dogs: Legion was also horrible with the G6900 showing performance that was miles away from resembling anything playable. The dual-core 12900K was miles better, but still pretty rough while the HT enabled configuration was playable, though still suboptimal when compared to a budget part like the 10100F.

Even older games like Shadow of the Tomb Raider aren't playable with the Celeron G6900, not even close. As it stands, there is no dual-core processor or dual-core configuration on the planet that can enable playable performance in this game, at least without SMT support.

The 2-core/4-thread 12900K configuration was playable and performance overall was surprisingly good, despite being well down on the 10100F.

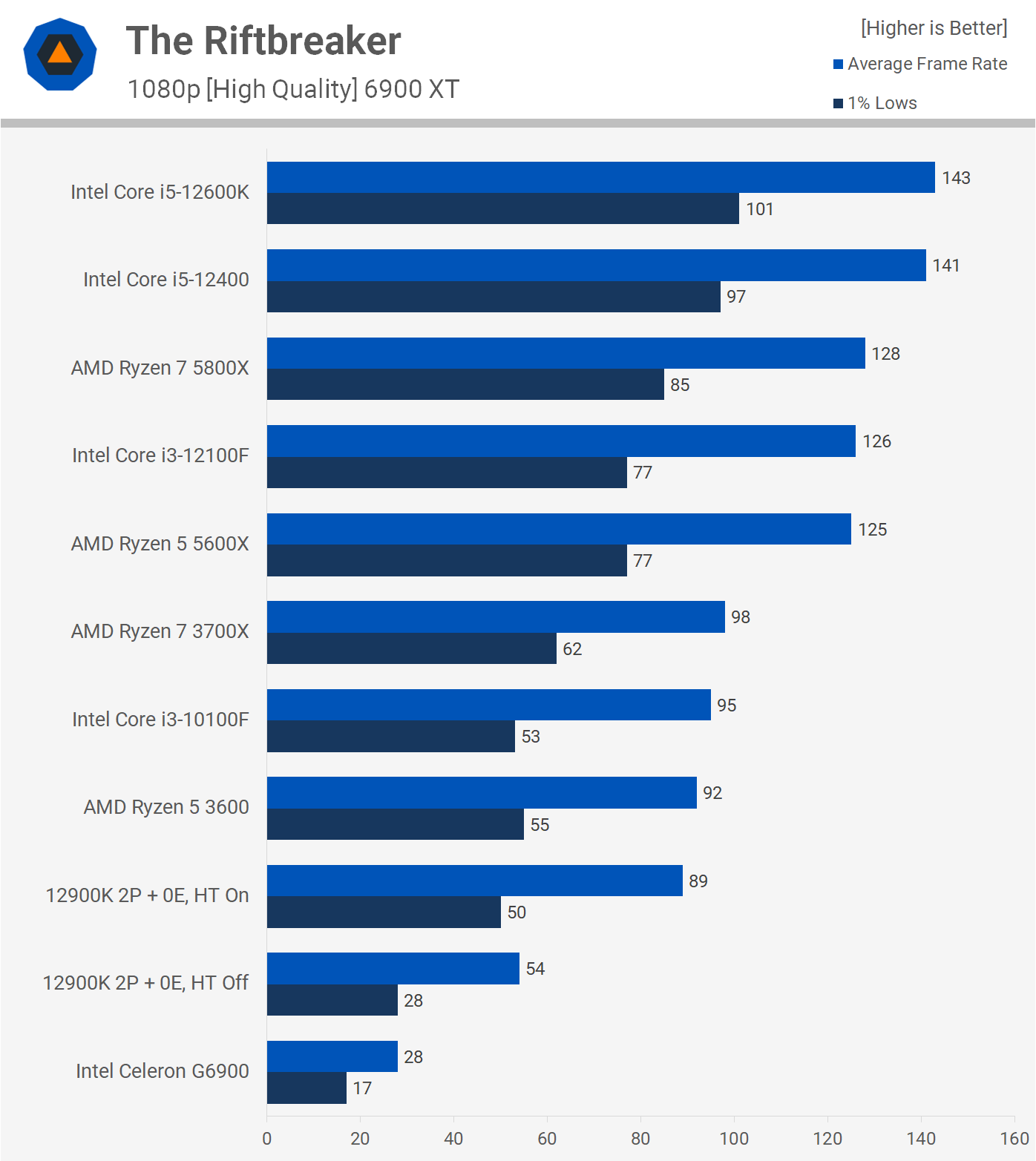

The Riftbreaker crashed several times when testing with the G6900 and performance was nowhere near playable when it wasn't crashing. The dual-core 12900K was better but it required HT support to deliver perfectly playable performance, though that's still an impressive achievement for a dual-core configuration.

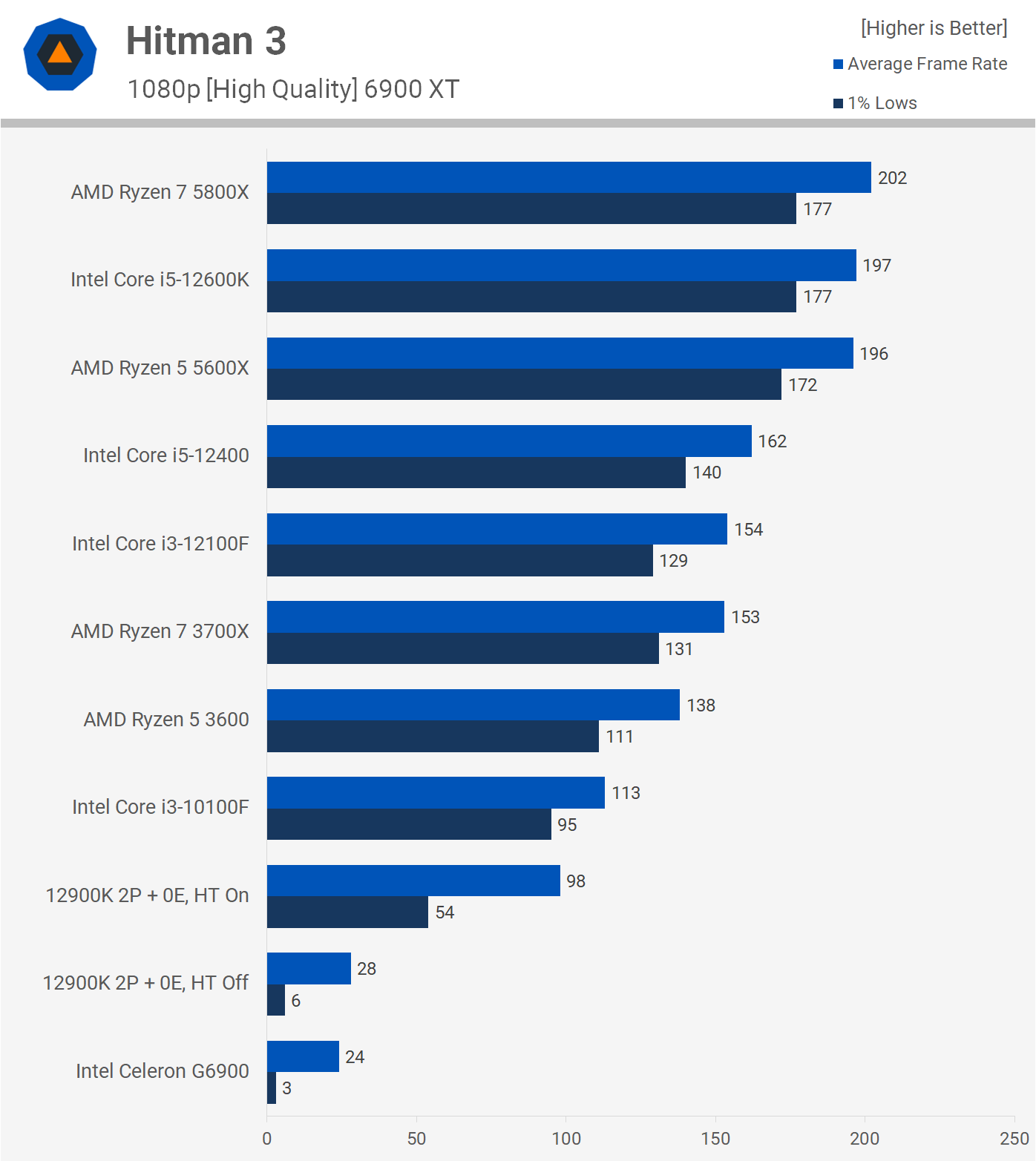

Hitman 3 was nothing more than stuttering frames with the G6900, there wasn't a moment that revealed normal looking gameplay. The 2-core/2-thread 12900K configuration was no better, but with HT enabled it was a completely different result and now the game was playable. Frame consistency wasn't great and stuttering was very noticeable at times, but overall the game was playable.

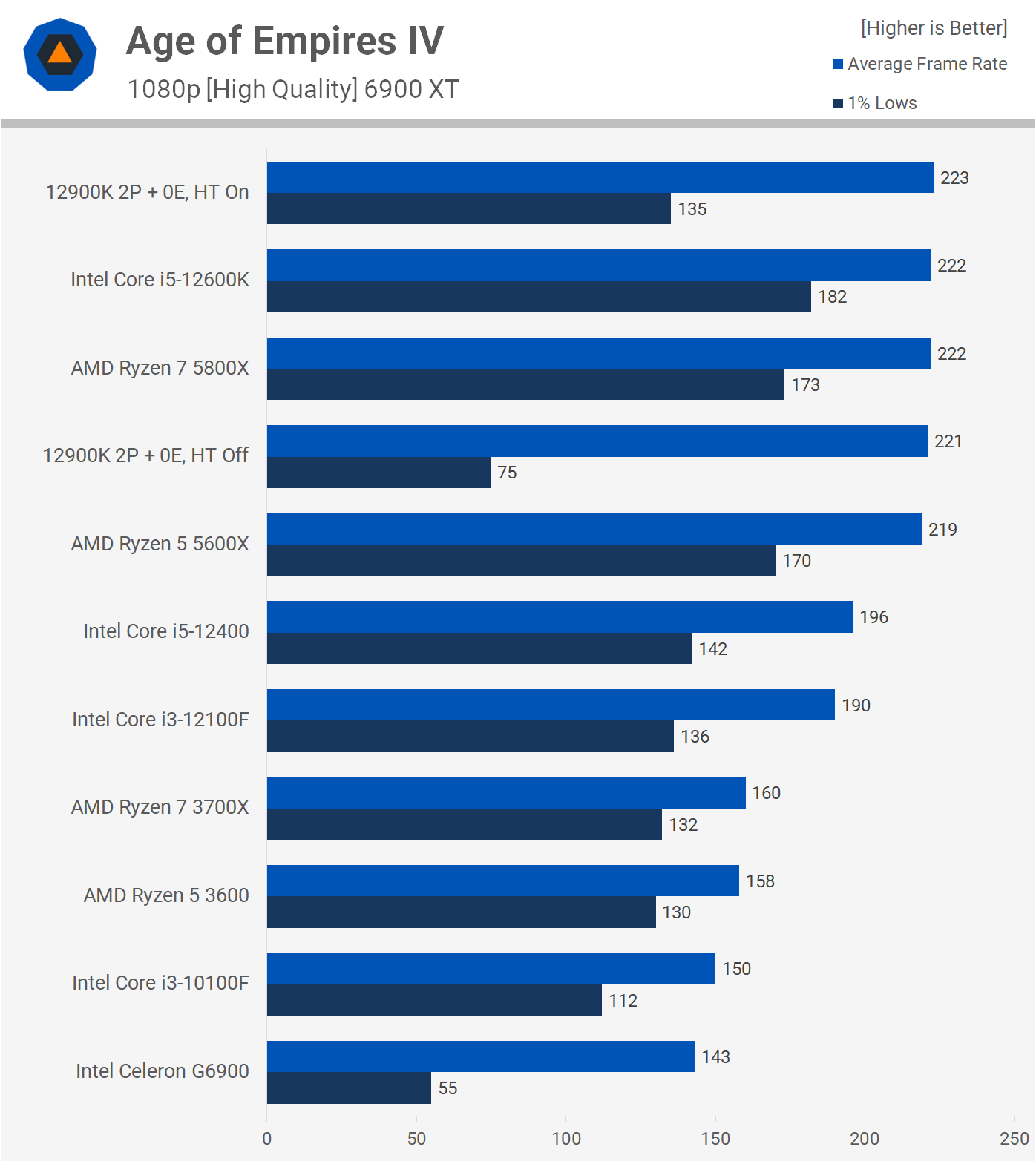

Age of Empires IV is a lightly threaded game, so the G6900 manages to survive here. The experience wasn't stutter free, but overall it was playable and this is the first example we have where the Celeron processor does work to play a game.

Far Cry 6 is another lightly threaded game, but the G6900 didn't come close to working. The dual-core 12900K was unusable as well, though we do find another instance where enabling hyper-threading saved performance. Sure, the 1% lows are still weak, but the 2-core/4-thread 12900K configuration was usable.

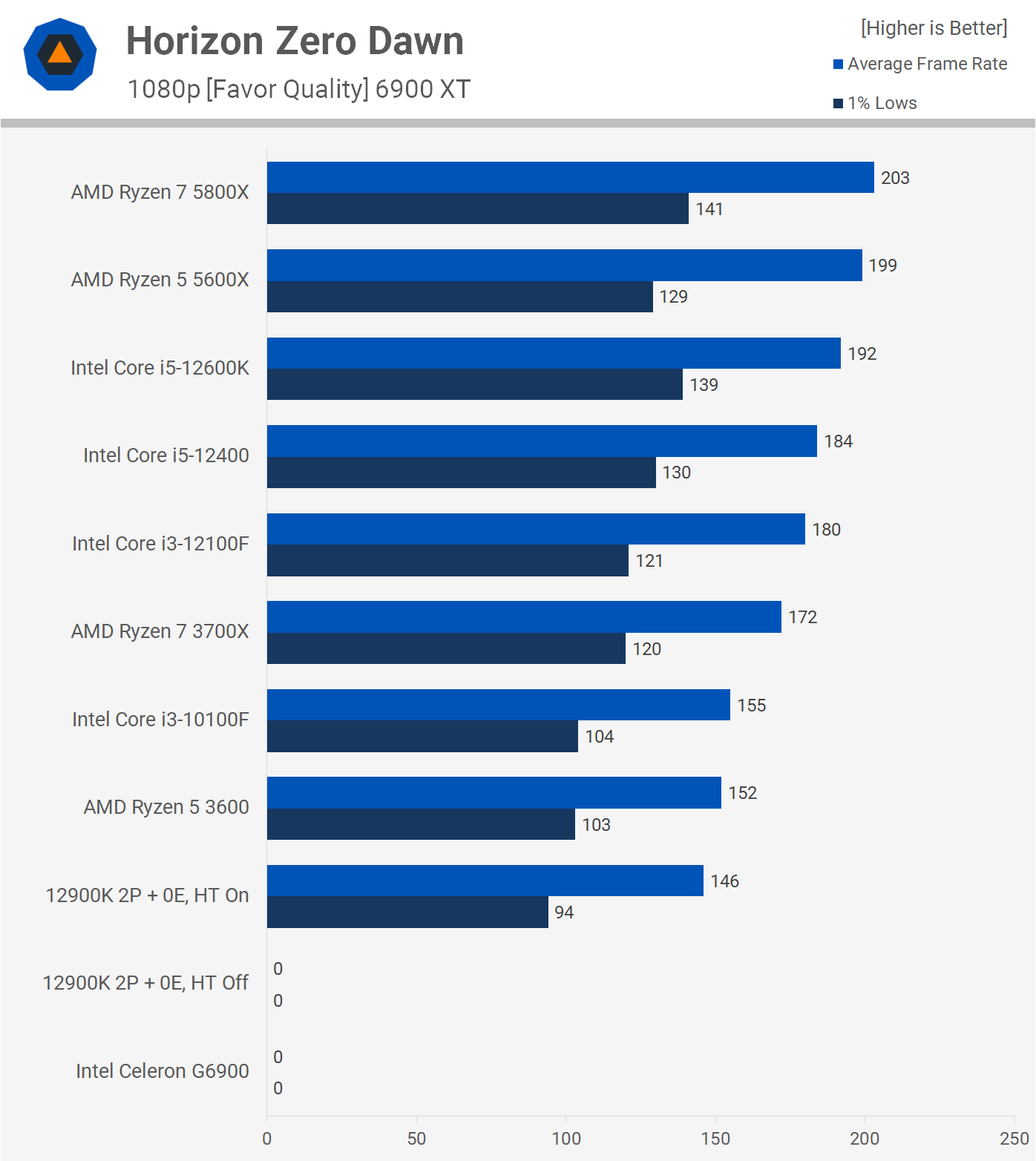

Horizon Zero Dawn would load into the menu with just 2 cores and 2 threads, but that was as far as you're getting with this configuration. The built-in benchmark and the game itself failed to load, even after 30 minutes, I was still stuck at the loading screen.

Enabling hyper-threading solved this for the the 2-core/4-thread 12900K configuration, enabling strong performance that was similar to the Ryzen 5 3600 and Core i3-10100F.

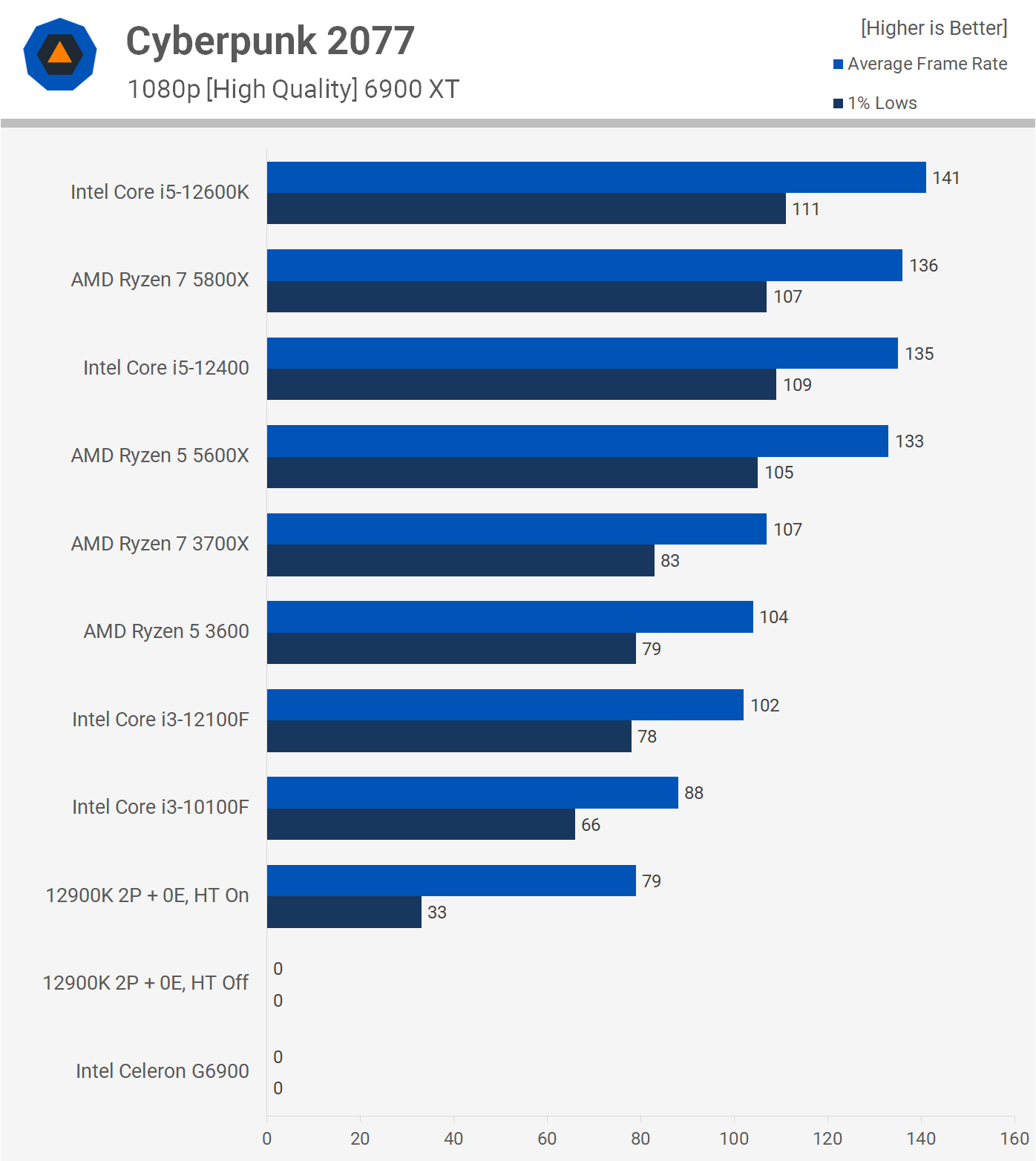

Cyberpunk 2077 is also broken with just 2 cores and like Horizon Zero Dawn you can't load/save games. Enabling hyper-threading on the dual-core 12900K solved that issue and the game was now playable. Of course, frame spacing wasn't amazing, but the game was playable and not as bad as I was expecting.

There's not much of a point looking at the 10-game average considering the Celeron G6900 failed to post a result in some of the games, while performance in most was unplayable. Needless to say, unless you're playing 5 year old games and in some instances even older titles, the Celeron G6900 is not going to cut it.

What We Learned

As far as gaming CPUs go, the Celeron G6900 is a no-go because as we noted earlier, the Core i3-10100F costs just $10 more. Moreover, the Core i3-12100F should be priced around $120, though that part appears to be harder to get at the moment.

Frankly, the Celeron G6900 doesn't make sense at any price. For gamers, it would be a tough sell even at $20, because you know, it can't play any modern games.

Even if you paired it with the cheapest H610 board around, for $50 more you could land instead a Core i3-10100F + H510 combo, which is ~3x faster and allows for playable performance in all modern games.

The Celeron G6900 is just too slow, the L3 cache is too small, the clock speeds are too low, and crucially the lack of hyper-threading means many new games won't even work.

The Celeron G6900 is just too slow, the L3 cache is too small, the clock speeds are too low, and crucially the lack of hyper-threading means many new games won't even work. If you're on a tight budget, just get the Core i3-10100, which is about the same price when factoring in board costs, and if you want to go cheaper than that, we suggest you try your luck on the second hand market.

Ideally, the Core i3-12100F is as low as gamers will want to go today, with the Core i5-12400F being our recommended sweet spot. Gamers aside, we still don't see who the G6900 makes sense for. Again, for roughly the same money, you'd just get the much more powerful Core i3-10100F.

Intel also plans to offer the Pentium G7400, which should arrive next month. The Pentium is a slightly higher clocked G6900 with 50% more L3 cache and hyper-threading support. It won't be as fast as the 2-core/4-thread 12900K configuration shown in this review, so the Core i3-10100F will still be a better buy, with the 12100F being a far better choice. Put simply, the 12100F is without question the cheapest Alder Lake CPU you're going to want to bother with.

Bottom line, the Celeron G6900 has been a failed experiment for us. This dual-core simply isn't powerful enough for gaming, and while it did work well enough for general usage, there are much better alternatives for essentially the same price.