A hot potato: Police departments are using a new tool that allows them to write reports using generative AI. The software vendor providing this claims its approach has solved some of the more vexing problems associated with gen AI like hallucinations, but the technology has yet to face the scrutiny of the courts, which means the debate of its use is far from over. Discussions are expected to center on issues of privacy, civil rights, and justice.

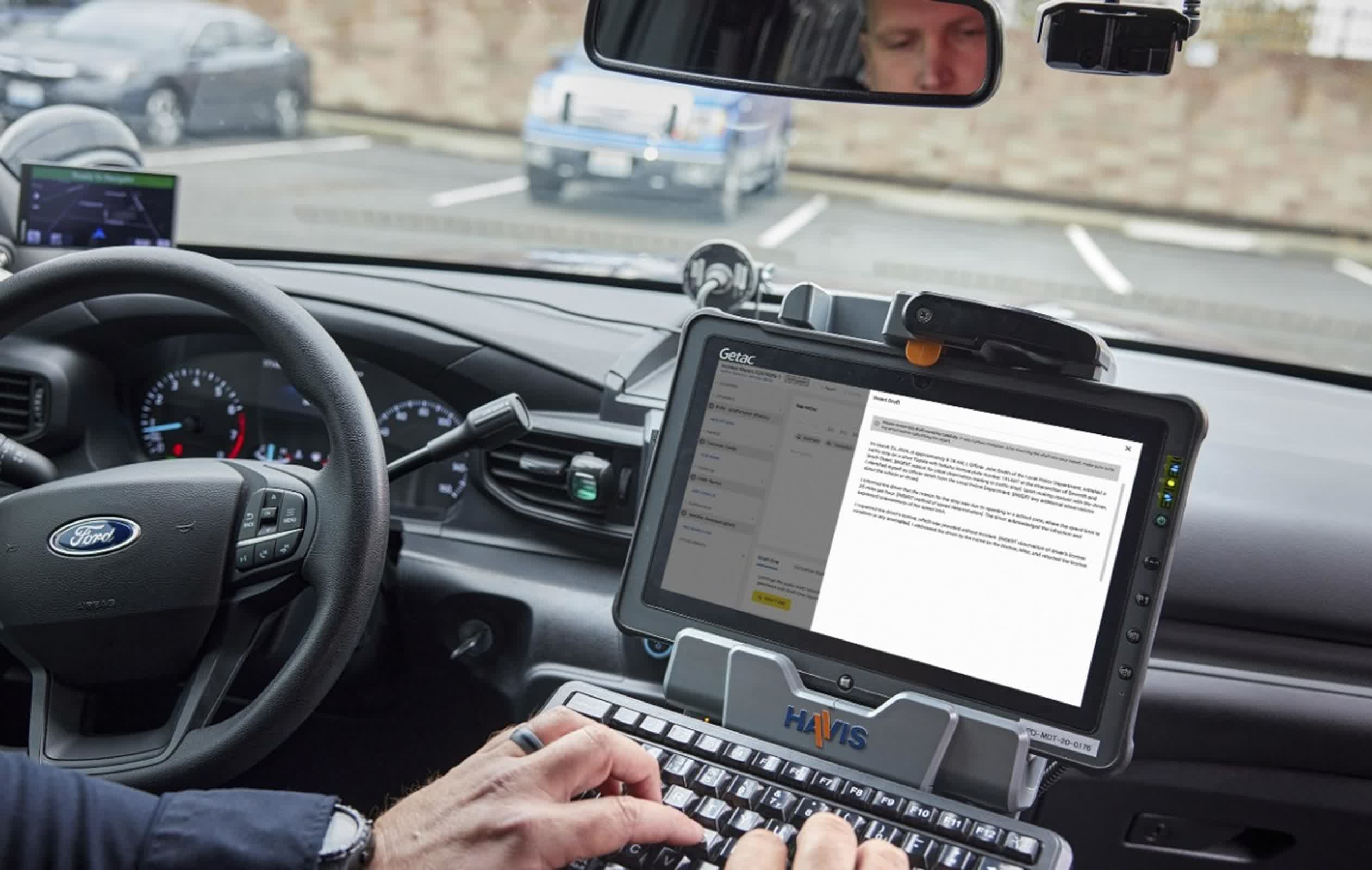

Police departments, long accustomed to using technology in their operations, have recently started integrating generative AI into their report-writing processes. This shift follows the introduction of Draft One, a new tool created by police equipment vendor Axon earlier this year.

Draft One uses Microsoft's Azure OpenAI platform to transcribe audio from police body cameras and then generate draft narratives. To ensure accuracy and objectivity, these reports are strictly based on audio transcripts, avoiding any form of speculation or embellishment.

Officers are required to verify and approve the reports, which are flagged to indicate AI involvement in their creation. Despite using the same underlying technology as ChatGPT, known for occasionally producing misleading information, Axon asserts that it has fine-tuned the AI to prioritize factual accuracy and minimize hallucination issues.

"We use the same underlying technology as ChatGPT, but we have access to more knobs and dials than an actual ChatGPT user would have," Noah Spitzer-Williams, who manages Axon's AI products, told The AP. Turning down the "creativity dial" helps the model stick to facts so that it "doesn't embellish or hallucinate in the same ways that you would find if you were just using ChatGPT on its own," he said.

The selling points of Draft One are clear. It is marketed as a tool designed to significantly reduce the time officers spend on paperwork, potentially cutting down report-writing time by 30-45 minutes per report. This efficiency, Axon says, allows officers to dedicate more time to community engagement and decision-making, which could enhance de-escalation outcomes.

To be fair, officers who have used Draft One report that these AI-generated documents are not only time-efficient but also accurate and well-structured. Moreover, the AI sometimes captures details that officers might overlook.

Police departments across the country have been using Draft One in different ways. In Oklahoma City, AI-generated reports are currently limited to minor incidents that do not involve arrests or violent crimes, following recommendations from local prosecutors to proceed cautiously.

Conversely, in cities like Lafayette and Fort Collins, AI usage is more extensive, even encompassing major cases, although challenges remain, such as handling noisy environments.

Axon has not disclosed the number of police departments currently utilizing their technology. The company is not alone in this market, as startups such as Policereports.ai and Truleo are also offering comparable solutions. However, due to Axon's established connections with law enforcement agencies that purchase its Tasers and body cameras, industry experts and police officials anticipate that the use of AI-generated reports will become increasingly widespread in the near future.

Despite its potential, the use of AI in report writing has sparked concerns among legal scholars, prosecutors, and community activists. They worry about AI's tendency to "hallucinate" or produce false information and the possibility of AI altering critical documents within the criminal justice system. Other issues include embedding societal biases into reports and the risk of officers becoming overly reliant on AI, potentially leading to less meticulous report writing.

"I am concerned that automation and the ease of the technology would cause police officers to be sort of less careful with their writing," said Andrew Ferguson, a law professor at American University working on what appears to be the first law review article on the emerging technology.